May 19, 2015

Quite often recently VoDs on twitch for me are just unplayable through the flash

player - no idea what happens at the backend there, but it buffers endlessly at

any quality level and that's it.

I also need to skip to some arbitrary part in the 7-hour stream (last wcs sc2

ro32), as I've watched half of it live, which turns out to complicate things a bit.

So the solution is to download the thing, which goes something like this:

It's just a video, right? Let's grab whatever stream flash is playing (with

e.g. FlashGot FF addon).

Doesn't work easily, since video is heavily chunked.

It used to be 30-min flv chunks, which are kinda ok, but these days it's

forced 4s chunks - apparently backend doesn't even allow downloading more

than 4s per request.

Fine, youtube-dl it is.

Nope. Doesn't allow seeking to time in stream.

livestreamer wrapper around the thing doesn't allow it either.

Try to use ?t=3h30m URL parameter - doesn't work, sadly.

mpv supports youtube-dl and seek, so use that.

Kinda works, but only for super-short seeks.

Seeking beyond e.g. 1 hour takes AGES, and every seek after that (even

skipping few seconds ahead) takes longer and longer.

youtube-dl --get-url gets m3u8 playlist link, use ffmpeg -ss <pos>

with it.

Apparently works exactly same as mpv above - takes like 20-30min to seek to

3:30:00 (3.5 hour offset).

Dunno if it downloads and checks every chunk in the playlist for length

sequentially... sounds dumb, but no clue why it's that slow otherwise,

apparently just not good with these playlists.

Grab the m3u8 playlist, change all relative links there into full urls, remove

bunch of them from the start to emulate seek, play that with ffmpeg | mpv.

Works at first, but gets totally stuck a few seconds/minutes into the video,

with ffmpeg doing bitrates of ~10 KiB/s.

youtube-dl apparently gets stuck in a similar fashion, as it does the same

ffmpeg-on-a-playlist (but without changing it) trick.

Fine! Just download all the damn links with curl.

grep '^http:' pls.m3u8 | xargs -n50 curl -s | pv -rb -i5 > video.mp4

Makes it painfully obvious why flash player and ffmpeg/youtube-dl get stuck -

eventually curl stumbles upon a chunk that downloads at a few KiB/s.

This "stumbling chunk" appears to be a random one, unrelated to local

bandwidth limitations, and just re-trying it fixes the issue.

Assemble a list of links and use some more advanced downloader that can do

parallel downloads, plus detect and retry super-low speeds.

Naturally, it's aria2, but with all the parallelism it appears to be

impossible to guess which output file will be which with just a cli.

Mostly due to links having same url-path,

e.g. index-0000000014-O7tq.ts?start_offset=955228&end_offset=2822819 with

different offsets (pity that backend doesn't seem to allow grabbing range of

that *.ts file of more than 4s) - aria2 just does file.ts, file.ts.1,

file.ts.2, etc - which are not in playlist-order due to all the parallel

stuff.

Finally, as acceptance dawns, go and write your own youtube-dl/aria2 wrapper

to properly seek necessary offset (according to playlist tags) and

download/resume files from there, in a parallel yet ordered and controlled

fashion.

This is done by using --on-download-complete hook with passing ordered "gid"

numbers for each chunk url, which are then passed to the hook along with the

resulting path (and hook renames file to prefix + sequence number).

Ended up with the chunk of the stream I wanted, locally (online playback lag

never goes away!), downloaded super-fast and seekable.

Resulting script is twitch_vod_fetch (script source link).

Update 2017-06-01: rewritten it using python3/asyncio since then, so stuff

related to specific implementation details here is only relevant for old py2 version

(can be pulled from git history, if necessary).

aria2c magic bits in the script:

aria2c = subprocess.Popen([

'aria2c',

'--stop-with-process={}'.format(os.getpid()),

'--enable-rpc=true',

'--rpc-listen-port={}'.format(port),

'--rpc-secret={}'.format(key),

'--no-netrc', '--no-proxy',

'--max-concurrent-downloads=5',

'--max-connection-per-server=5',

'--max-file-not-found=5',

'--max-tries=8',

'--timeout=15',

'--connect-timeout=10',

'--lowest-speed-limit=100K',

'--user-agent={}'.format(ua),

'--on-download-complete={}'.format(hook),

], close_fds=True)

Didn't bother adding extra options for tweaking these via cli, but might be a

good idea to adjust timeouts and limits for a particular use-case (see also the

massive "man aria2c").

Seeking in playlist is easy, as it's essentially a VoD playlist, and every ~4s

chunk is preceded by e.g. #EXTINF:3.240, tag, with its exact length, so

script just skips these as necessary to satisfy --start-pos / --length

parameters.

Queueing all downloads, each with its own particular gid, is done via JSON-RPC,

as it seem to be impossible to:

- Specify both link and gid in the --input-file for aria2c.

- Pass an actual download URL or any sequential number to --on-download-complete

hook (except for gid).

So each gid is just generated as "000001", "000002", etc, and hook script is a

one-liner "mv" command.

Since all stuff in the script is kinda lenghty time-wise - e.g. youtube-dl

--get-url takes a while, then the actual downloads, then concatenation, ... -

it's designed to be Ctrl+C'able at any point.

Every step just generates a state-file like "my_output_prefix.m3u8", and next

one goes on from there.

Restaring the script doesn't repeat these, and these files can be freely

mangled or removed to force re-doing the step (or to adjust behavior in

whatever way).

Example of useful restart might be removing *.m3u8.url and *.m3u8 files if

twitch starts giving 404's due to expired links in there.

Won't force re-downloading any chunks, will only grab still-missing ones and

assemble the resulting file.

End-result is one my_output_prefix.mp4 file with specified video chunk (or full

video, if not specified), plus all the intermediate litter (to be able to

restart the process from any point).

One issue I've spotted with the initial version:

05/19 22:38:28 [ERROR] CUID#77 - Download aborted. URI=...

Exception: [AbstractCommand.cc:398] errorCode=1 URI=...

-> [RequestGroup.cc:714] errorCode=1 Download aborted.

-> [DefaultBtProgressInfoFile.cc:277]

errorCode=1 total length mismatch. expected: 1924180, actual: 1789572

05/19 22:38:28 [NOTICE] Download GID#0035090000000000 not complete: ...

Seem to be a few of these mismatches (like 5 out of 10k chunks), which don't get

retried, as aria2 doesn't seem to consider these to be a transient errors (which

is probably fair).

Probably a twitch bug, as it clearly breaks http there, and browsers shouldn't

accept such responses either.

Can be fixed by one more hook, I guess - either --on-download-error (to make

script retry url with that gid), or the one using websocket and getting json

notification there.

In any case, just running same command again to download a few of these

still-missing chunks and finish the process works around the issue.

Update 2015-05-22: Issue clearly persists for vods from different chans,

so fixed it via simple "retry all failed chunks a few times" loop at the end.

Update 2015-05-23: Apparently it's due to aria2 reusing same files for

different urls and trying to resume downloads, fixed by passing --out for each

download queued over api.

[script source link]

Apr 11, 2015

Did a kinda-overdue migration of a desktop machine to amd64 a few days ago.

Exherbo has

multiarch there, but I didn't see much point in keeping (and

maintaining in various ways) a full-blown set of 32-bit libs just for Skype,

which I found that I still need occasionally.

Solution I've used before (documented in the past entry) with just grabbing

32-bit Skype binary and full set of libs it needs from whatever distro still

works and applies here, not-so-surprisingly.

What I ended up doing is:

Grab the latest Fedora "32-bit workstation" iso (Fedora-Live-Workstation-i686-21-5.iso).

Install/run it on a virtual machine (plain qemu-kvm).

Download "Dynamic" Skype version (distro-independent tar.gz with files) from

Skype site to/on a VM, "tar -xf" it.

ldd skype-4.3.0.37/skype | grep 'not found' to see which dependency-libs

are missing.

Install missing libs - yum install qtwebkit libXScrnSaver

scp build_skype_env.bash (from skype-space repo that I have from old

days of using skype + bitlbee) to vm, run it on a skype-dir -

e.g. ./build_skype_env.bash skype-4.3.0.37.

Should finish successfully and produce "skype_env" dir in the current path.

Copy that "skype_env" dir with all the libs back to pure-amd64 system.

Since skype binary has "/lib/ld-linux.so.2" as a hardcoded interpreter (as it

should be), and pure-amd64 system shouldn't have one (not to mention missing

multiarch prefix) - patch it in the binary with patchelf:

patchelf --set-interpreter ./ld-linux.so.2 skype

Run it (from that env dir with all the libs):

LD_LIBRARY_PATH=. ./skype --resources=.

Should "just work" \o/

One big caveat is that I don't care about any features there except for simple

text messaging, which is probably not how most people use Skype, so didn't

test if e.g. audio would work there.

Don't think sound should be a problem though, especially since iirc modern

skype could use pulseaudio (or even using it by default?).

Given that skype itself a huge opaque binary, I do have AppArmor profile for the

thing (uses "~/.Skype/env/" dir for bin/libs) - home.skype.

Mar 28, 2015

It probably won't be a surprise to anyone that Bluetooth has profiles to carry

regular network traffic, and BlueZ has support for these since forever, but

setup process has changed quite a bit between 2.X - 4.X - 5.X BlueZ versions, so

here's my summary of it with 5.29 (latest from fives atm).

First step is to get BlueZ itself, and make sure it's 5.X (at least for the

purposes of this post), as some enerprisey distros probably still have 4.X if

not earlier series, which, again, have different tools and interfaces.

Should be easy enough to do with bluetoothctl --version, and if BlueZ

doesn't have "bluetoothctl", it's definitely some earlier one.

Hardware in my case was two linux machines with similar USB dongles, one of them

was RPi (wanted to setup wireless link for that), but that shouldn't really matter.

Aforementioned bluetoothctl allows to easily pair both dongles from cli

interactively (and with nice colors!), that's what should be done first and

serves as an authentication, as far as I can tell.

--- machine-1

% bluetoothctl

[NEW] Controller 00:02:72:XX:XX:XX malediction [default]

[bluetooth]# power on

Changing power on succeeded

[CHG] Controller 00:02:72:XX:XX:XX Powered: yes

[bluetooth]# discoverable on

Changing discoverable on succeeded

[CHG] Controller 00:02:72:XX:XX:XX Discoverable: yes

[bluetooth]# agent on

...

--- machine-2 (snipped)

% bluetoothctl

[NEW] Controller 00:02:72:YY:YY:YY rpbox [default]

[bluetooth]# power on

[bluetooth]# scan on

[bluetooth]# agent on

[bluetooth]# pair 00:02:72:XX:XX:XX

[bluetooth]# trust 00:02:72:XX:XX:XX

...

Not sure if the "trust" bit is really necessary, and what it does - probably

allows to setup agent-less connections or something.

As I needed to connect small ARM board to what amounts to an access point, "NAP"

was the BT-PAN mode of choice for me, but there are also "ad-hoc" modes in BT

like PANU and GN, which seem to only need a different topology (who connects to

who) and pass different UUID parameter to BlueZ over dbus.

For setting up a PAN network with BlueZ 5.X, essentially just two dbus calls are

needed (described in "doc/network-api.txt"), and basic cli tools to do these are

bundled in "test/" dir with BlueZ sources.

Given that these aren't very suited for day-to-day use (hence the "test" dir)

and are fairly trivial, did rewrite them as a single script, more suited for my

purposes - bt-pan.

Update 2015-12-10: There's also "bneptest" tool, which comes as a part of

e.g. "bluez-utils" package on Arch, which seem to do same thing with its "-s"

and "-c" options, just haven't found it at the time (or maybe it's a more recent

addition).

General idea is that one side (access point in NAP topology) sets up a bridge

and calls "org.bluez.NetworkServer1.Register()", while the other ("client") does

"org.bluez.Network1.Connect()".

On the server side (which also applies to GN mode, I think), bluetoothd expects

a bridge interface to be setup and configured, to which it adds individual

"bnepX" interfaces created for each client by itself.

Such bridge gets created with "brctl" (from bridge-utils), and should be

assigned the server IP and such, then server itself can be started, passing name

of that bridge to BlueZ over dbus in "org.bluez.NetworkServer1.Register()" call:

#!/bin/bash

br=bnep

[[ -n "$(brctl show $br 2>&1 1>/dev/null)" ]] && {

brctl addbr $br

brctl setfd $br 0

brctl stp $br off

ip addr add 10.1.2.3/24 dev $br

ip link set $br up

}

exec bt-pan --debug server $br

(as mentioned above, bt-pan script is from fgtk github repo)

Update 2015-12-10: This is "net.bnep" script, as referred-to in

"net-bnep.service" unit just below.

Update 2015-12-10: These days, systemd can easily create and configure

bridge, forwarding and all the interfaces involved, even running built-in DHCP

server there - see "man systemd.netdev" and "man systemd.network", for how to do

that, esp. examples at the end of both.

Just running this script will then setup a proper "bluetooth access point", and

if done from systemd, should probably be a part of bluetooth.target and get

stopped along with bluetoothd (as it doesn't make any sense running without it):

[Unit]

After=bluetooth.service

PartOf=bluetooth.service

[Service]

ExecStart=/usr/local/sbin/net.bnep

[Install]

WantedBy=bluetooth.target

Update 2015-12-10: Put this into e.g. /etc/systemd/system/net-bnep.service

and enable to start with "bluetooth.target" (see "man systemd.special") by

running systemctl enable net-bnep.service.

On the client side, it's even simpler - BlueZ will just create a "bnepX" device

and won't need any bridge, as it is just a single connection:

[Unit]

After=bluetooth.service

PartOf=bluetooth.service

[Service]

ExecStart=/usr/local/bin/bt-pan client --wait 00:02:72:XX:XX:XX

[Install]

WantedBy=bluetooth.target

Update 2015-12-10: Can be /etc/systemd/system/net-bnep-client.service,

don't forget to enable it (creates symlink in "bluetooth.target.wants"), same as

for other unit above (which should be running on the other machine).

Update 2015-12-10: Created "bnepX" device is also trivial to setup with

systemd on the client side, see e.g. "Example 2" at the end of "man

systemd.network".

On top of "bnepX" device on the client, some dhcp client should probably be

running, which systemd-networkd will probably handle by default on

systemd-enabled linuxes, and some dhcpd on the server-side (I used udhcpd from

busybox for that).

Enabling units on both machines make them setup AP and connect on boot, or as

soon as BT donges get plugged-in/detected.

Fairly trivial setup for a wireless one, especially wrt authentication, and seem

to work reliably so far.

Update 2015-12-10: Tried to clarify a few things above for people not very

familiar with systemd, where noted. See systemd docs for more info on all this.

In case something doesn't work in such a rosy scenario, which kinda happens

often, first place to look at is probably debug info of bluetoothd itself, which

can be enabled with systemd via systemctl edit bluetooth and adding a

[Service] section with override like ExecStart=/usr/lib/bluetooth/bluetoothd -d,

then doing daemon-reload and restart of the unit.

This should already produce a ton of debug output, but I generally find

something like bluetoothd[363]: src/device.c:device_bonding_failed() status 14

and bluetoothd[363]: plugins/policy.c:disconnect_cb() reason 3 in there,

which is not super-helpful by itself.

"btmon" tool which also comes with BlueZ provides a much more useful output with

all the stuff decoded from the air, even colorized for convenience (though you

won't see it here):

...

> ACL Data RX: Handle 11 flags 0x02 dlen 20 [hci0] 17.791382

L2CAP: Information Response (0x0b) ident 2 len 12

Type: Fixed channels supported (0x0003)

Result: Success (0x0000)

Channels: 0x0000000000000006

L2CAP Signaling (BR/EDR)

Connectionless reception

> HCI Event: Number of Completed Packets (0x13) plen 5 [hci0] 17.793368

Num handles: 1

Handle: 11

Count: 2

> ACL Data RX: Handle 11 flags 0x02 dlen 12 [hci0] 17.794006

L2CAP: Connection Request (0x02) ident 3 len 4

PSM: 15 (0x000f)

Source CID: 64

< ACL Data TX: Handle 11 flags 0x00 dlen 16 [hci0] 17.794240

L2CAP: Connection Response (0x03) ident 3 len 8

Destination CID: 64

Source CID: 64

Result: Connection pending (0x0001)

Status: Authorization pending (0x0002)

> HCI Event: Number of Completed Packets (0x13) plen 5 [hci0] 17.939360

Num handles: 1

Handle: 11

Count: 1

< ACL Data TX: Handle 11 flags 0x00 dlen 16 [hci0] 19.137875

L2CAP: Connection Response (0x03) ident 3 len 8

Destination CID: 64

Source CID: 64

Result: Connection refused - security block (0x0003)

Status: No further information available (0x0000)

> HCI Event: Number of Completed Packets (0x13) plen 5 [hci0] 19.314509

Num handles: 1

Handle: 11

Count: 1

> HCI Event: Disconnect Complete (0x05) plen 4 [hci0] 21.302722

Status: Success (0x00)

Handle: 11

Reason: Remote User Terminated Connection (0x13)

@ Device Disconnected: 00:02:72:XX:XX:XX (0) reason 3

...

That at least makes it clear what's the decoded error message is, on which

protocol layer and which requests it follows - enough stuff to dig into.

BlueZ also includes a crapton of cool tools for all sorts of diagnostics and

manipulation, which - alas - seem to be missing on some distros, but can be

built along with the package using --enable-tools --enable-experimental

configure-options (all under "tools" dir).

I had to resort to these tricks briefly when trying to setup PANU/GN-mode

connections, but as I didn't really need these, gave up fairly soon on that

"Connection refused - security block" error (from that "policy.c" plugin) - no

idea why BlueZ throws it in this context and google doesn't seem to help much,

maybe polkit thing, idk.

Didn't need these modes though, so whatever.

Mar 25, 2015

Was looking at a weird what-looks-like-a-memleak issue somewhere in the system

on changing desktop background (somewhat surprisingly complex operation btw) and

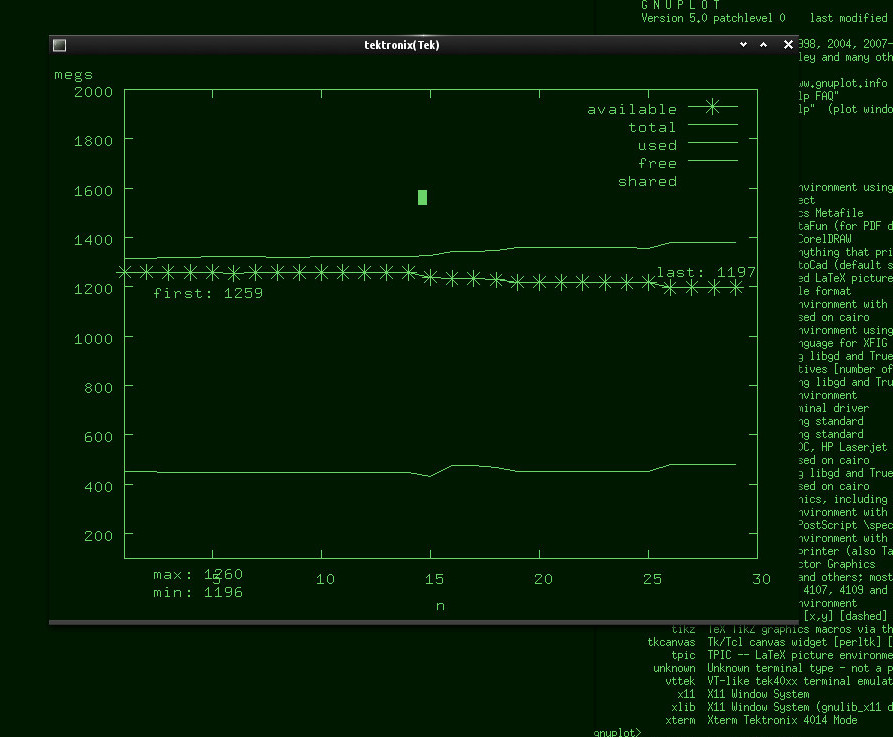

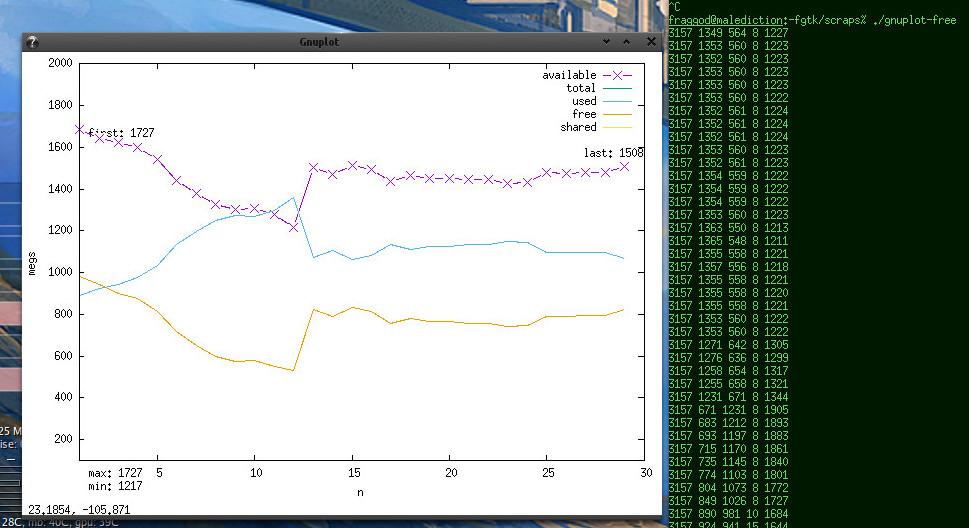

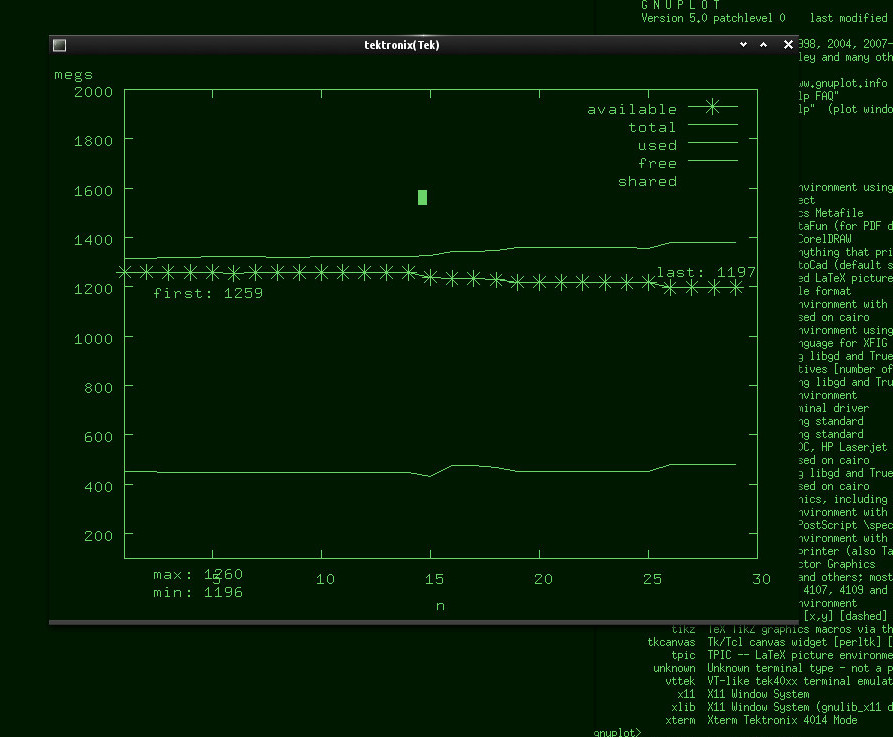

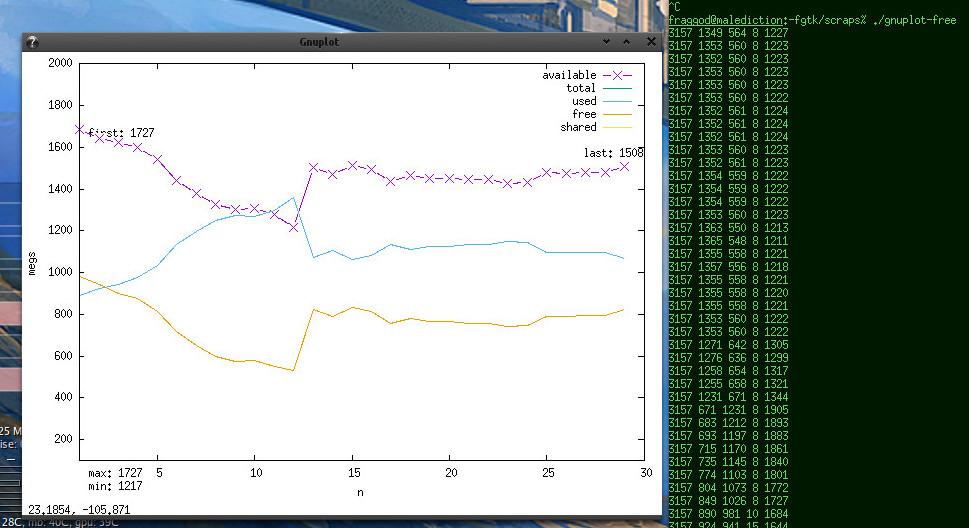

wanted to get a nice graph of "last 30s of free -m output", with some labels

and easy access to data.

A simple enough task for gnuplot, but resulting in a somewhat complicated

solution, as neither "free" nor gnuplot are perfect tools for the job.

First thing is that free -m -s1 doesn't actually give a machine-readable

data, and I was too lazy to find something better (should've used sysstat and

sar!) and thought "let's just parse that with awk":

free -m -s $interval |

awk '

BEGIN {

exports="total used free shared available"

agg="free_plot.datz"

dst="free_plot.dat"}

$1=="total" {

for (n=1;n<=NF;n++)

if (index(exports,$n)) headers[n+1]=$n }

$1=="Mem:" {

first=1

printf "" >dst

for (n in headers) {

if (!first) {

printf " " >>agg

printf " " >>dst }

printf "%d", $n >>agg

printf "%s", headers[n] >>dst

first=0 }

printf "\n" >>agg

printf "\n" >>dst

fflush(agg)

close(dst)

system("tail -n '$points' " agg " >>" dst) }'

That might be more awk than one ever wants to see, but I imagine there'd be not

too much space to wiggle around it, as gnuplot is also somewhat picky in its

input (either that or you can write same scripts there).

I thought that visualizing "live" stream of data/measurements would be kinda

typical task for any graphing/visualization solution, but meh, apparently not so

much for gnuplot, as I haven't found better way to do it than "reread" command.

To be fair, that command seem to do what I want, just not in a much obvious way,

seamlessly updating output in the same single window.

Next surprising quirk was "how to plot only last 30 points from big file", as it

seem be all-or-nothing with gnuplot, and googling around, only found that people

do it via the usual "tail" before the plotting.

Whatever, added that "tail" hack right to the awk script (as seen above), need

column headers there anyway.

Then I also want nice labels - i.e.:

- How much available memory was there at the start of the graph.

- How much of it is at the end.

- Min for that parameter on the graph.

- Same, but max.

stats won't give first/last values apparently, unless I missed those in

the PDF (

only available format for up-to-date docs,

le sigh), so one

solution I came up with is to do a dry-run

plot command with

set

terminal unknown and "grab first value" / "grab last value" functions to

"plot".

Which is not really a huge deal, as it's just a preprocessed batch of 30

points, not a huge array of data.

Ok, so without further ado...

src='free_plot.dat'

y0=100; y1=2000;

set xrange [1:30]

set yrange [y0:y1]

# --------------------

set terminal unknown

stats src using 5 name 'y' nooutput

is_NaN(v) = v+0 != v

y_first=0

grab_first_y(y) = y_first = y_first!=0 && !is_NaN(y_first) ? y_first : y

grab_last_y(y) = y_last = y

plot src u (grab_first_y(grab_last_y($5)))

x_first=GPVAL_DATA_X_MIN

x_last=GPVAL_DATA_X_MAX

# --------------------

set label 1 sprintf('first: %d', y_first) at x_first,y_first left offset 5,-1

set label 2 sprintf('last: %d', y_last) at x_last,y_last right offset 0,1

set label 3 sprintf('min: %d', y_min) at 0,y0-(y1-y0)/15 left offset 5,0

set label 4 sprintf('max: %d', y_max) at 0,y0-(y1-y0)/15 left offset 5,1

# --------------------

set terminal x11 nopersist noraise enhanced

set xlabel 'n'

set ylabel 'megs'

set style line 1 lt 1 lw 1 pt 2 pi -1 ps 1.5

set pointintervalbox 2

plot\

src u 5 w linespoints linestyle 1 t columnheader,\

src u 1 w lines title columnheader,\

src u 2 w lines title columnheader,\

src u 3 w lines title columnheader,\

src u 4 w lines title columnheader,\

# --------------------

pause 1

reread

Probably the most complex gnuplot script I composed to date.

Yeah, maybe I should've just googled around for an app that does same thing,

though I like how this lore potentially gives ability to plot whatever other

stuff in a similar fashion.

That, and I love all the weird stuff gnuplot can do.

For instance, xterm apparently has some weird "plotter" interface hardware

terminals had in the past:

And there's also the famous "dumb" terminal for pseudographics too.

Regular x11 output looks nice and clean enough though:

It updates smoothly, with line crawling left-to-right from the start and then

neatly flowing through. There's a lot of styling one can do to make it prettier,

but I think I've spent enough time on such a trivial thing.

Didn't really help much with debugging though. Oh well...

Full "free | awk | gnuplot" script is here on github.

Mar 11, 2015

Most Firefox addons add a toolbar button that does something when clicked, or

you can add such button by dragging it via Customize Firefox interface.

For example, I have a button for (an awesome) Column Reader extension on the

right of FF menu bar (which I have always-visible):

But as far as I can tell, most simple extensions don't bother with some custom

hotkey-adding interface, so there seem to be no obvious way to "click" that

button by pressing a hotkey.

In case of Column Reader, this is more important because pressing its button is

akin to "inspect element" in Firebug or FF Developer Tools - allows to pick any

box of text on the page, so would be especially nice to call via hotkey + click,

(as you'd do with Ctrl+Shift+C + click).

As I did struggle with binding hotkeys for specific extensions before (in their

own quirky ways), found one sure-fire way to do exactly what you'd get on click

this time - by simulating a click event itself (upon pressing the hotkey).

Whole process can be split into several steps:

Install Keyconfig or similar extension, allowing to bind/run arbitrary

JavaScript code on hotkeys.

One important note here is that such code should run in the JS context of the

extension itself, not just some page, as JS from page obviously won't be

allowed to send events to Firefox UI.

Keyconfig is very simple and seem to work perfectly for this purpose - just

"Add a new key" there and it'll pop up a window where any privileged JS can be

typed/pasted in.

Install DOM Inspector extension (from AMO).

This one will be useful to get button element's "id" (similar to DOM elements'

"id" attribute, but for XUL).

It should be available (probably after FF restart) under "Tools -> Web

Developer -> DOM Inspector".

Run DOM Inspector and find the element-to-be-hotkeyed there.

Under "File" select "Inspect Chrome Document" and first document there -

should update "URL bar" in the inspector window to

"chrome://browser/content/browser.xul".

Now click "Find a node by clicking" button on the left (or under "Edit ->

Select Element by Click"), and then just click on the desired UI

button/element - doesn't really have to be an extension button.

It might be necessary to set "View -> Document Viewer -> DOM Nodes" to see XUL

nodes on the left, if it's not selected already.

There it'd be easy to see all the neighbor elements and this button element.

Any element in that DOM Inspector frame can be right-clicked and there's

"Blink Element" option to show exactly where it is in the UI.

"id" of any box where click should land will do (highlighted with red in my

case on the image above).

Write/paste JavaScript that would "click" on the element into Keyconfig (or

whatever other hotkey-addon).

I did try HTML-specific ways to trigger events, but none seem to have worked

with XUL elements, so JS below uses nsIDOMWindowUtils XPCOM interface,

which seem to be designed specifically with such "simulation" stuff in mind

(likely for things like Selenium WebDriver).

JS for my case:

var el_box = document.getElementById('columnsreader').boxObject;

var domWindowUtils =

window.QueryInterface(Components.interfaces.nsIInterfaceRequestor)

.getInterface(Components.interfaces.nsIDOMWindowUtils);

domWindowUtils.sendMouseEvent('mousedown', el_box.x, el_box.y, 0, 1, 0);

domWindowUtils.sendMouseEvent('mouseup', el_box.x, el_box.y, 0, 1, 0);

"columnsreader" there is an "id" of an element-to-be-clicked, and should

probably be substituted for whatever else from the previous step.

There doesn't seem to be a "click" event, so "mousedown" + "mouseup" it is.

"0, 1, 0" stuff is: left button, single-click (not sure what it does here), no

modifiers.

If anything goes wrong in that JS, the usual "Tools -> Web Developer ->

Browser Console" (Ctrl+Shift+J) window should show errors.

It should be possible to adjust click position by adding/subtracting pixels

from el_box.x / el_box.y, but left-top corner seem to work fine for buttons.

Save time and frustration by not dragging stupid mouse anymore, using trusty

hotkey instead \o/

Wish there was some standard "click on whatever to bind it to specified

hotkey" UI option in FF (like there is in e.g.

Claws Mail), but haven't

seen one so far (FF 36).

Maybe someone should write addon for that!

Jan 30, 2015

Important: This way was pretty much made obsolete by Device Tree overlays,

which have returned (as expected) in 3.19 kernels - it'd probably be easier to

use these in most cases.

BeagleBone Black board has three i2c buses, two of which are available by

default on Linux kernel with patches from RCN (Robert Nelson).

There are plenty of links on how to enable i2c1 on old-ish (by now) 3.8-series

kernels, which had "Device Tree overlays" patches, but these do not apply to

3.9-3.18, though it looks like

they might make a comeback in the future

(LWN).

Probably it's just a bit too specific task to ask an easy answer for.

Overlays in pre-3.9 allowed to write a "patch" for a Device Tree, compile it and

then load it at runtime, which is not possible without these, but perfectly

possible and kinda-easy to do by compiling a dtb and loading it on boot.

It'd be great if there was a prebuilt dtb file in Linux with just i2c1 enabled

(not just for some cape, bundled with other settings), but unfortunately, as of

patched 3.18.4, there doesn't seem to be one, hence the following patching

process.

For that, getting the kernel sources (whichever were used to build the kernel,

ideally) is necessary.

In

Arch Linux ARM (which I tend to use with such boards), this can be done

by grabbing the

PKGBUILD dir, editing the "PKGBUILD" file, uncommenting the

"return 1" under "stop here - this is useful to configure the kernel" comment

and running

makepkg -sf (from "base-devel" package set on arch) there.

That will just unpack the kernel sources, put the appropriate .config file

there and run make prepare on them.

With kernel sources unpacked, the file that you'd want to patch is

"arch/arm/boot/dts/am335x-boneblack.dts" (or whichever other dtb you're

loading via uboot):

--- am335x-boneblack.dts.bak 2015-01-29 18:20:29.547909768 +0500

+++ am335x-boneblack.dts 2015-01-30 20:56:43.129213998 +0500

@@ -23,6 +23,14 @@

};

&ocp {

+ /* i2c */

+ P9_17_pinmux {

+ status = "disabled";

+ };

+ P9_18_pinmux {

+ status = "disabled";

+ };

+

/* clkout2 */

P9_41_pinmux {

status = "disabled";

@@ -33,6 +41,13 @@

};

};

+&i2c1 {

+ status = "okay";

+ pinctrl-names = "default";

+ pinctrl-0 = <&i2c1_pins>;

+ clock-frequency = <100000>;

+};

+

&mmc1 {

vmmc-supply = <&vmmcsd_fixed>;

};

Then "make dtbs" can be used to build dtb files only, and not the whole kernel

(which would take a while on BBB).

Resulting *.dtb (e.g. "am335x-boneblack.dtb" for "am335x-boneblack.dts", in the

same dir) can be put into "dtbs" on boot partition and loaded from uEnv.txt (or

whatever uboot configs are included from there).

Reboot, and i2cdetect -l should show i2c-1:

# i2cdetect -l

i2c-0 i2c OMAP I2C adapter I2C adapter

i2c-1 i2c OMAP I2C adapter I2C adapter

i2c-2 i2c OMAP I2C adapter I2C adapter

As I've already mentioned before, this might not be the optimal way to enable

the thing in kernels 3.19 and beyond, if "device tree overlays" patches will

land there - it should be possible to just load some patch on-the-fly there,

without all the extra hassle described above.

Update 2015-03-19: Device Tree overlays landed in 3.19 indeed, but if

migrating to use these is too much hassle for now, here's a patch for

3.19.1-bone4 am335x-bone-common.dtsi to enable i2c1 and i2c2 on boot

(applies in the same way, make dtbs, copy am335x-boneblack.dtb to /boot/dtbs).

Jan 28, 2015

There seem to be a surprising lack of python code on the net for this particular

device, except for

this nice pi-ras blog post, in japanese.

So, to give google some more food and a bit of commentary in english to that

post - here goes.

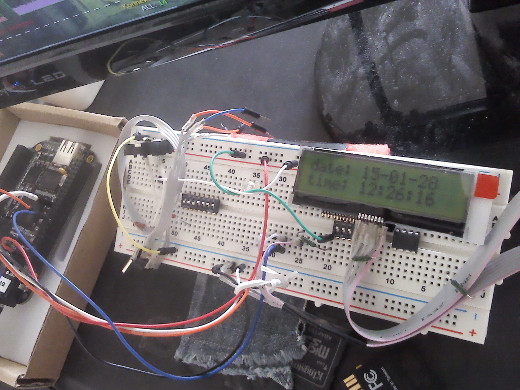

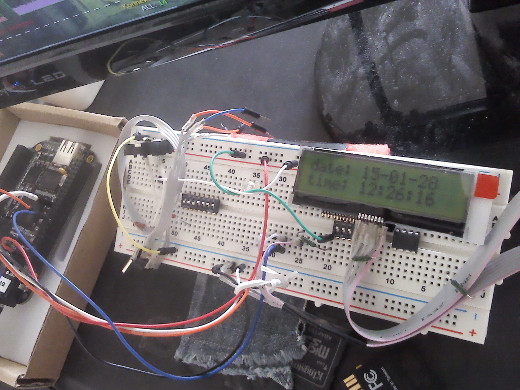

I'm using Midas MCCOG21605C6W-SPTLYI 2x16 chars LCD panel, connected to 5V VDD

and 3.3V BeagleBone Black I2C bus:

Code for the above LCD clock "app" (python 2.7):

import smbus, time

class ST7032I(object):

def __init__(self, addr, i2c_chan, **init_kws):

self.addr, self.bus = addr, smbus.SMBus(i2c_chan)

self.init(**init_kws)

def _write(self, data, cmd=0, delay=None):

self.bus.write_i2c_block_data(self.addr, cmd, list(data))

if delay: time.sleep(delay)

def init(self, contrast=0x10, icon=False, booster=False):

assert contrast < 0x40 # 6 bits only, probably not used on most lcds

pic_high = 0b0111 << 4 | (contrast & 0x0f) # c3 c2 c1 c0

pic_low = ( 0b0101 << 4 |

icon << 3 | booster << 2 | ((contrast >> 4) & 0x03) ) # c5 c4

self._write([0x38, 0x39, 0x14, pic_high, pic_low, 0x6c], delay=0.01)

self._write([0x0c, 0x01, 0x06], delay=0.01)

def move(self, row=0, col=0):

assert 0 <= row <= 1 and 0 <= col <= 15, [row, col]

self._write([0b1000 << 4 | (0x40 * row + col)])

def addstr(self, chars, pos=None):

if pos is not None:

row, col = (pos, 0) if isinstance(pos, int) else pos

self.move(row, col)

self._write(map(ord, chars), cmd=0x40)

def clear(self):

self._write([0x01])

if __name__ == '__main__':

lcd = ST7032I(0x3e, 2)

while True:

ts_tuple = time.localtime()

lcd.clear()

lcd.addstr(time.strftime('date: %y-%m-%d', ts_tuple), 0)

lcd.addstr(time.strftime('time: %H:%M:%S', ts_tuple), 1)

time.sleep(1)

Note the constants in the "init" function - these are all from

"INITIALIZE(5V)" sequence on page-8 of the

Midas LCD datasheet , setting up

things like voltage follower circuit, OSC frequency, contrast (not used on my

panel), modes and such.

Actual reference on what all these instructions do and how they're decoded can

be found on page-20 there.

Even with the same exact display, but connected to 3.3V, these numbers should

probably be a bit different - check the datasheet (e.g. page-7 there).

Also note the "addr" and "i2c_chan" values (0x3E and 2) - these should be taken

from the board itself.

"i2c_chan" is the number of the device (X) in /dev/i2c-X, of which there seem

to be usually more than one on ARM boards like RPi or BBB.

For instance, Beaglebone Black has three I2C buses, two of which are available

on the expansion headers (with proper dtbs loaded).

And the address is easy to get from the datasheet (lcd I have uses only one

static slave address), or detect via i2cdetect -r -y <i2c_chan>, e.g.:

# i2cdetect -r -y 2

0 1 2 3 4 5 6 7 8 9 a b c d e f

00: -- -- -- -- -- -- -- -- -- -- -- -- --

10: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

20: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

30: -- -- -- -- -- -- -- -- -- -- -- -- -- -- 3e --

40: -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- --

50: -- -- -- -- UU UU UU UU -- -- -- -- -- -- -- --

60: -- -- -- -- -- -- -- -- 68 -- -- -- -- -- -- --

70: -- -- -- -- -- -- -- --

Here I have DS1307 RTC on 0x68 and an LCD panel on 0x3E address (again, also

specified in the datasheet).

On Arch or source-based distros these all come with "i2c-tools" package, but

on e.g. debian, python module seem to be split into "python-smbus".

Plugging these bus number and the address for your particular hardware into the

script above and maybe adjusting the values there for your lcd panel modes

should make the clock show up and tick every second.

In general, upon seeing tutorial on some random blog (like this one), please

take it with a grain of salt, because it's highly likely that it was written by

a fairly incompetent person (like me), since engineers who deal with these

things every day don't see above steps as any kind of accomplishment - it's a

boring no-brainer routine for them, and they aren't likely to even think about

it, much less write tutorials on it (all trivial and obvious, after all).

Nevertheless, hope this post might be useful to someone as a pointer on where to

look to get such device started, if nothing else.

Jan 12, 2015

Needed to implement a thing that would react on USB Flash Drive inserted (into

autonomous BBB device) - to get device name, mount fs there, rsync stuff to it,

unmount.

To avoid whatever concurrency issues (i.e. multiple things screwing with device

in parallel), proper error logging and other startup things, most obvious thing

is to wrap the script in a systemd oneshot service.

Only non-immediately-obvious problem for me here was how to pass device to such

service properly.

With a bit of digging through google results (and even finding one post here

somehow among them), eventually found "Pairing udev's SYSTEMD_WANTS and

systemd's templated units" resolved thread, where what seem to be current-best

approach is specified.

Adapting it for my case and pairing with generic patterns for

device-instantiated services, resulted in the following configuration.

99-sync-sensor-logs.rules:

SUBSYSTEM=="block", ACTION=="add", ENV{ID_TYPE}="disk", ENV{DEVTYPE}=="partition",\

PROGRAM="/usr/bin/systemd-escape -p --template=sync-sensor-logs@.service $env{DEVNAME}",\

ENV{SYSTEMD_WANTS}+="%c"

sync-sensor-logs@.service:

[Unit]

BindTo=%i.device

After=%i.device

[Service]

Type=oneshot

TimeoutStartSec=300

ExecStart=/usr/local/sbin/sync-sensor-logs /%I

This makes things stop if it works for too long or if device vanishes (due to

BindTo=) and properly delays script start until device is ready.

"sync-sensor-logs" script at the end gets passed original unescaped device

name as an argument.

Does not need things like systemctl invocation or manual systemd escaping

re-implementation either, though running "systemd-escape" still seem to be

necessary evil there.

systemd-less alternative seem to be having a script that does per-device flock,

timeout logic and a lot more checks for whether device is ready and/or still

there, so this approach looks way saner and clearer, with a caveat that one

should probably be familiar with all these systemd features.

Dec 23, 2014

I've been using emacs for a while now, and always on a lookout for a features

that'd be nice to have there.

Accumulated quite a number of these in my emacs-setup repo as a result.

Most of these features start from ideas in other editors or tools (e.g. music

players, irc clients, etc - emacs seem to be best for a lot of stuff), or a

simplistic proof-of-concept implementation of something similar.

I usually add these to my emacs due to sheer fun of coding in lisps, compared

to pretty much any other lang family I know of.

Recently added two of these, and wanted to share/log the ideas here, in case

someone else might find these useful.

"Remote" based on emacsclient tool

As I use laptop and desktop machines for coding and procrastination

interchangeably, can have e.g. irc client (ERC - seriously the best irc client

I've seen by far) running on either of these.

But even with ZNC bouncer setup (and easy log-reading tools for it), it's

still a lot of hassle to connect to same irc from another machiine and catch-up

on chan history there.

Or sometimes there are unsaved buffer changes, or whatever other stuff

happening, or just stuff you want to do in a remote emacs instance, which would

be easy if you could just go turn on the monitor, shrug-off screen blanking,

sometimes disable screen-lock, then switch to emacs and press a few hotkeys

there... yeah, it doesn't look that easy even when I'm at home and close to the

thing.

emacs has "emacsclient" thing, that allows you to eval whatever elisp code on a

remote emacs instance, but it's impossible to use for such simple tasks without

some convenient wrappers.

And these remote invocation wrappers is what this idea is all about.

Consider terminal dump below, running in an ssh or over tcp to remote emacs

server (and I'd strongly suggest having (server-start) right in ~/.emacs,

though maybe not on tcp socket for security reasons):

% ece b

* 2014-12-23.a_few_recent_emacs_features_-_remote_and_file_colors.rst

fg_remote.el

rpc.py

* fg_erc.el

utils.py

#twitter_bitlbee

#blazer

#exherbo

...

% ece b remote

*ERROR*: Failed to uniquely match buffer by `remote', matches:

2014-12-23.a_few_recent_emacs_features_-_remote_and_file_colors.rst,

fg_remote.el

--- whoops... lemme try again

% ece b fg_rem

...(contents of the buffer, matched by unique name part)...

% ece erc

004 #twitter_bitlbee

004 #blazer

002 #bordercamp

--- Showing last (unchecked) irc activity, same as erc-track-mode does (but nicer)

% ece erc twitter | t

[13:36:45]<fijall> hint - you can use gc.garbage in Python to store any sort of ...

[14:57:59]<veorq> Going shopping downtown. Pray for me.

[15:48:59]<mitsuhiko> I like how if you google for "London Bridge" you get ...

[17:15:15]<simonw> TIL the Devonshire word "frawsy" (or is it "frawzy"?) - a ...

[17:17:04] *** -------------------- ***

[17:24:01]<veorq> RT @collinrm: Any opinions on VeraCrypt?

[17:33:31]<veorq> insightful comment by @jmgosney about the Ars Technica hack ...

[17:35:36]<veorq> .@jmgosney as you must know "iterating" a hash is in theory ...

[17:51:50]<veorq> woops #31c3 via @joernchen ...

~erc/#twitter_bitlbee%

--- "t" above is an alias for "tail" that I use in all shells, lines snipped jic

% ece h

Available commands:

buffer (aliases: b, buff)

buffer-names

erc

erc-mark

get-socket-type

help (aliases: h, rtfm, wat)

log

switch-sockets

% ece h erc-mark

(fg-remote-erc-mark PATTERN)

Put /mark to a specified ERC chan and reset its activity track.

--- Whole "help" thing is auto-generated, see "fg-remote-help" in fg_remote.el

And so on - anything is trivial to implement as elisp few-liner.

For instance, missing "buffer-save" command will be:

(defun fg-remote-buffer-save (pattern)

"Saves specified bufffer, matched via `fg-get-useful-buffer'."

(with-current-buffer (fg-get-useful-buffer pattern) (save-buffer)))

(defalias 'fg-remote-bs 'fg-remote-buffer-save)

Both "bufffer-save" command and its "bs" alias will instantly appear in "help"

and be available for calling via emacs client.

Hell, you can "implement" this stuff from terminal and eval on a remote emacs

(i.e. just pass code above to emacsclient -e), extending its API in an

ad-hoc fashion right there.

"ece" script above is a thin wrapper around "emacsclient" to avoid typing that

long binary name and "-e" flag with a set of parentheses every time, can be

found in the root of emacs-setup repo.

So it's easier to procrastinate in bed whole morning with a laptop than ever.

Yup, that's the real point of the whole thing.

Unique per-file buffer colors

Stumbled upon this idea in a deliberate-software blog entry recently.

There, author suggests making static per-code-project colors, but I thought -

why not have slight (and automatic) per-file-path color alterations for buffer

background?

Doing that makes file buffers (or any non-file ones too) recognizable, i.e. you

don't need to look at the path or code inside anymore to instantly know that

it's that exact file you want (or don't want) to edit - eye/brain picks it up

automatically.

emacs' color.el already has all the cool stuff for colors - tools for conversion

to/from L*a*b* colorspace (humane "perceptual" numbers), CIEDE2000 color

diffs (JUST LOOK AT THIS THING), and so on - easy to use these for the

task.

Result is "fg-color-tweak" function that I now use for slight changes to buffer

bg, based on md5 hash of the file path and reliably-contrast irc nicknames

(based also on the hash, used way worse and unreliable "simple" thing for this

in the past):

(fg-color-tweak COLOR &optional SEED MIN-SHIFT MAX-SHIFT (CLAMP-RGB-AFTER 20)

(LAB-RANGES ...))

Adjust COLOR based on (md5 of-) SEED and MIN-SHIFT / MAX-SHIFT lists.

COLOR can be provided as a three-value (0-1 float)

R G B list, or a string suitable for `color-name-to-rgb'.

MIN-SHIFT / MAX-SHIFT can be:

* three-value list (numbers) of min/max offset on L*a*b* in either direction

* one number - min/max cie-de2000 distance

* four-value list of offsets and distance, combining both options above

* nil for no-limit

SEED can be number, string or nil.

Empty string or nil passed as SEED will return the original color.

CLAMP-RGB-AFTER defines how many attempts to make in picking

L*a*b* color with random offset that translates to non-imaginary sRGB color.

When that number is reached, last color will be `color-clamp'ed to fit into sRGB.

Returns color plus/minus offset as a hex string.

Resulting color offset should be uniformly distributed between min/max shift limits.

It's a bit complicated under the hood, parsing all the options and limits,

making sure resulting color is not "imaginary" L*a*b* one and converts to RGB

without clamping (if possible), while maintaining requested min/max distances,

doing several hashing rounds if necessary, with fallbacks... etc.

Actual end-result is simple though - deterministic and instantly-recognizable

color-coding for anything you can think of - just pass the attribute to base

coding on and desired min/max contrast levels, get back the hex color to use,

apply it.

Should you use something like that, I highly suggest taking a moment to look

at L*a*b* and HSL color spaces, to understand how colors can be easily tweaked

along certain parameters.

For example, passing '(0 a b) as min/max-shift to the function above will

produce color variants with the same "lightness", which is super-useful to

control, making sure you won't ever get out-of-whack colors for

e.g. light/dark backgrounds.

To summarize...

Coding lispy stuff is super-fun, just for the sake of it ;)

Actually, speaking of fun, I can't recommend installing magnars'

s.el and

dash.el right now highly enough, unless you have these already.

They make coding elisp stuff so much more fun and trivial, to a degree that'd

be hard to describe, so please at least try coding somethig with these.

All the stuff mentioned above is in (also linked here already) emacs-setup repo.

Cheers!

Oct 05, 2014

Experimenting with all kinds of arm boards lately (nyms above stand for

Raspberry Pi, Beaglebone Black and Cubieboard), I can't help but feel a bit

sorry of microsd cards in each one of them.

These are even worse for non-bulk writes than SSD, having less erase cycles plus

larger blocks, and yet when used for all fs needs of the board, even typing "ls"

into shell will usually emit a write (unless shell doesn't keep history, which

sucks).

Great explaination of how they work can be found on LWN (as usual).

Easy and relatively hassle-free way to fix the issue is to use aufs, but as

doing it for the whole rootfs requires initramfs (which is not needed here

otherwise), it's a lot easier to only use it for commonly-writable parts -

i.e. /var and /home in most cases.

Home for "root" user is usually /root, so to make it aufs material as well, it's

better to move that to /home (which probably shouldn't be a separate fs on these

devices), leaving /root as a symlink to that.

It seem to be impossible to do when logged-in as /root (mv will error with

EBUSY), but trivial from any other machine:

# mount /dev/sdb2 /mnt # mount microsd

# cd /mnt

# mv root home/

# ln -s home/root

# cd

# umount /mnt

As aufs2 is already built into Arch Linux ARM kernel, only thing that's left is

to add early-boot systemd unit for mounting it,

e.g. /etc/systemd/system/aufs.service:

[Unit]

DefaultDependencies=false

[Install]

WantedBy=local-fs-pre.target

[Service]

Type=oneshot

RemainAfterExit=true

# Remount /home and /var as aufs

ExecStart=/bin/mount -t tmpfs tmpfs /aufs/rw

ExecStart=/bin/mkdir -p -m0755 /aufs/rw/var /aufs/rw/home

ExecStart=/bin/mount -t aufs -o br:/aufs/rw/var=rw:/var=ro none /var

ExecStart=/bin/mount -t aufs -o br:/aufs/rw/home=rw:/home=ro none /home

# Mount "pure" root to /aufs/ro for syncing changes

ExecStart=/bin/mount --bind / /aufs/ro

ExecStart=/bin/mount --make-private /aufs/ro

And then create the dirs used there and enable unit:

# mkdir -p /aufs/{rw,ro}

# systemctl enable aufs

Now, upon rebooting the board, you'll get aufs mounts for /home and /var, making

all the writes there go to respective /aufs/rw dirs on tmpfs while allowing to

read all the contents from underlying rootfs.

To make sure systemd doesn't waste extra tmpfs space thinking it can sync logs

to /var/log/journal, I'd also suggest to do this (before rebooting with aufs

mounts):

# rm -rf /var/log/journal

# ln -s /dev/null /var/log/journal

Can also be done via journald.conf with Storage=volatile.

One obvious caveat with aufs is, of course, how to deal with things that do

expect to have permanent storage in /var - examples can be a pacman (Arch

package manager) on system updates, postfix or any db.

For stock Arch Linux ARM though, it's only pacman on manual updates.

And depending on the app and how "ok" can loss of this data might be, app dir

in /var (e.g. /var/lib/pacman) can be either moved + symlinked to /srv or synced

before shutdown or after it's done with writing (for manual oneshot apps like

pacman).

For moving stuff back to permanent fs, aubrsync from aufs2-util.git can be

used like this:

# aubrsync move /var/ /aufs/rw/var/ /aufs/ro/var/

As even pulling that from shell history can be a bit tedious, I've made a

simpler ad-hoc wrapper - aufs_sync - that can be used (with mountpoints

similar to presented above) like this:

# aufs_sync

Usage: aufs_sync { copy | move | check } [module]

Example (flushes /var): aufs_sync move var

# aufs_sync check

/aufs/rw

/aufs/rw/home

/aufs/rw/home/root

/aufs/rw/home/root/.histfile

/aufs/rw/home/.wh..wh.orph

/aufs/rw/home/.wh..wh.plnk

/aufs/rw/home/.wh..wh.aufs

/aufs/rw/var

/aufs/rw/var/.wh..wh.orph

/aufs/rw/var/.wh..wh.plnk

/aufs/rw/var/.wh..wh.aufs

--- ... just does "find /aufs/rw"

# aufs_sync move

--- does "aubrsync move" for all dirs in /aufs/rw

Just be sure to check if any new apps might write something important there

(right after installing these) and do symlinks (to something like /srv) for

their dirs, as even having "aufs_sync copy" on shutdown definitely won't prevent

data loss for these on e.g. sudden power blackout or any crashes.