Sep 23, 2014

Update 2015-11-08: No longer necessary (or even supported in 2.1) - tmux'

new "backoff" rate-limiting approach works like a charm with defaults \o/

Had the issue of spammy binary locking-up terminal for a long time, but never

bothered to do something about it... until now.

Happens with any terminal I've seen - just run something like this in the shell

there:

# for n in {1..500000}; do echo "$spam $n"; done

And for at least several seconds, terminal is totally unresponsive, no matter

how many

screen's /

tmux'es are running there.

It's usually faster to kill term window via WM and re-attach to whatever was

inside from a new one.

xterm seem to be one of the most resistant *terms to this, e.g. terminology -

much less so, which I guess just means that it's more fancy and hence slower to

draw millions of output lines.

Anyhow, tmuxrc magic:

set -g c0-change-trigger 150

set -g c0-change-interval 100

"man tmux" says that 250/100 are defaults, but it doesn't seem to be true, as

just setting these "defaults" explicitly here fixes the issue, which exists with

the default configuration.

Fix just limits rate of tmux output to basically 150 newlines (which is like

twice my terminal height anyway) per 100 ms, so xterm won't get overflown with

"rendering megs of text" backlog, remaining apparently-unresponsive (to any

other output) for a while.

Since I always run tmux as a top-level multiplexer in xterm, totally solved the

annoyance for me.

Just wish I've done that much sooner - would've saved me a lot of time and

probably some rage-burned neurons.

Jul 16, 2014

Tried to find any simple script to update tinydns (part of djbdns) zones

that'd be better than ssh dns_update@remote_host update.sh, but failed -

they all seem to be hacky php scripts, doomed to run behind httpds, send

passwords in url, query random "myip" hosts or something like that.

What I want instead is something that won't be making http, tls or ssh

connections (and stirring all the crap behind these), but would rather just send

udp or even icmp pings to remotes, which should be enough for update, given

source IPs of these packets and some authentication payload.

So yep, wrote my own scripts for that - tinydns-dynamic-dns-updater project.

Tool sends UDP packets with 100 bytes of "( key_id || timestamp ) ||

Ed25519_sig" from clients, authenticating and distinguishing these

server-side by their signing keys ("key_id" there is to avoid iterating over

them all, checking which matches signature).

Server zone files can have "# dynamic: ts key1 key2 ..." comments before records

(separated from static records after these by comments or empty lines), which

says that any source IPs of packets with correct signatures (and more recent

timestamps) will be recorded in A/AAAA records (depending on source AF) that

follow instead of what's already there, leaving anything else in the file

intact.

Zone file only gets replaced if something is actually updated and it's possible

to use dynamic IP for server as well, using dynamic hostname on client (which is

resolved for each delayed packet).

Lossy nature of UDP can be easily mitigated by passing e.g. "-n5" to the client

script, so it'd send 5 packets (with exponential delays by default, configurable

via --send-delay), plus just having the thing on fairly regular intervals in

crontab.

Putting server script into socket-activated systemd service file also makes all

daemon-specific pains like using privileged ports (and most other

security/access things), startup/daemonization, restarts, auto-suspend timeout

and logging woes just go away, so there's --systemd flag for that too.

Given how easy it is to run djbdns/tinydns instance, there really doesn't seem

to be any compelling reason not to use your own dynamic dns stuff for every

single machine or device that can run simple python scripts.

Github link: tinydns-dynamic-dns-updater

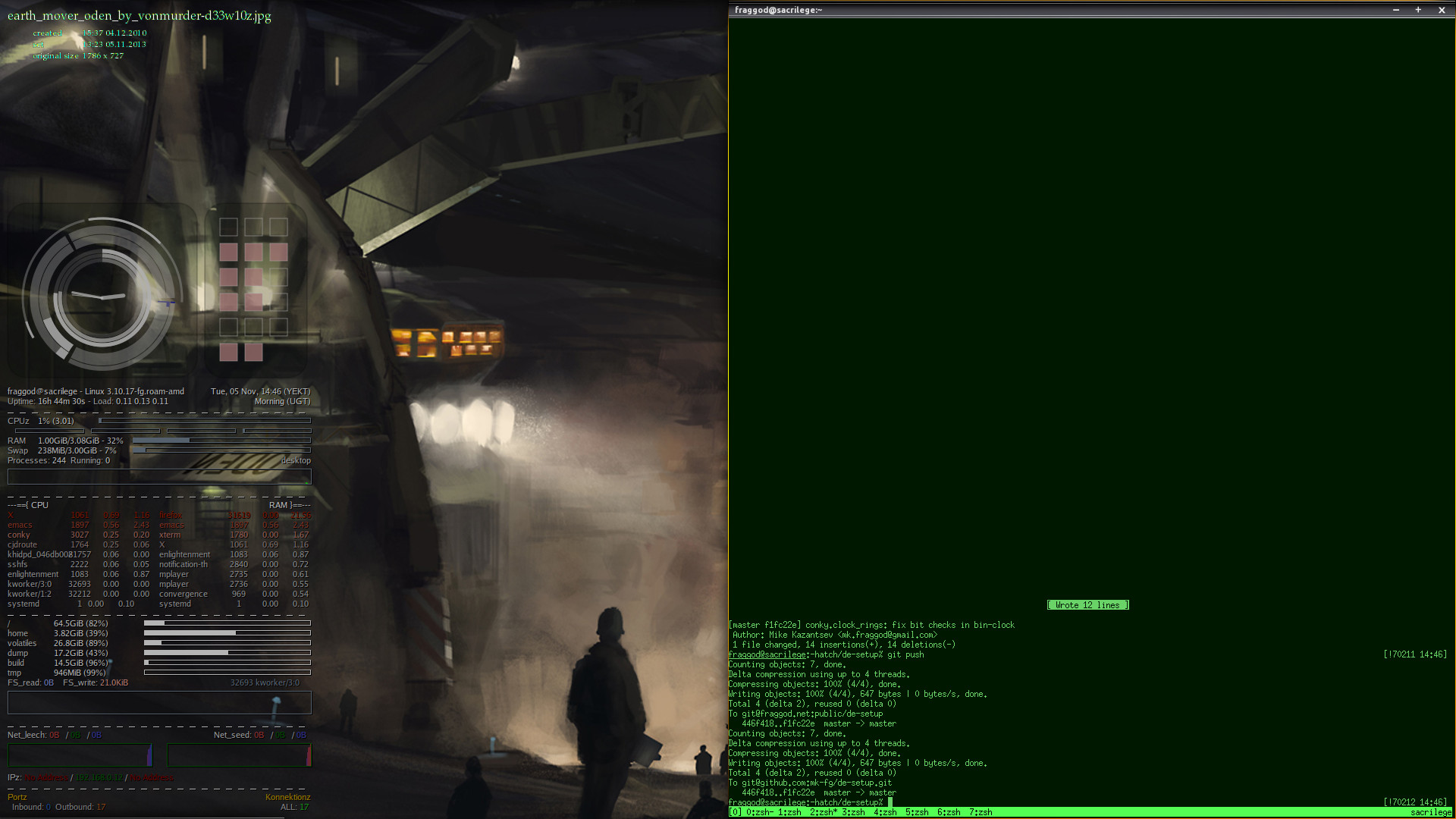

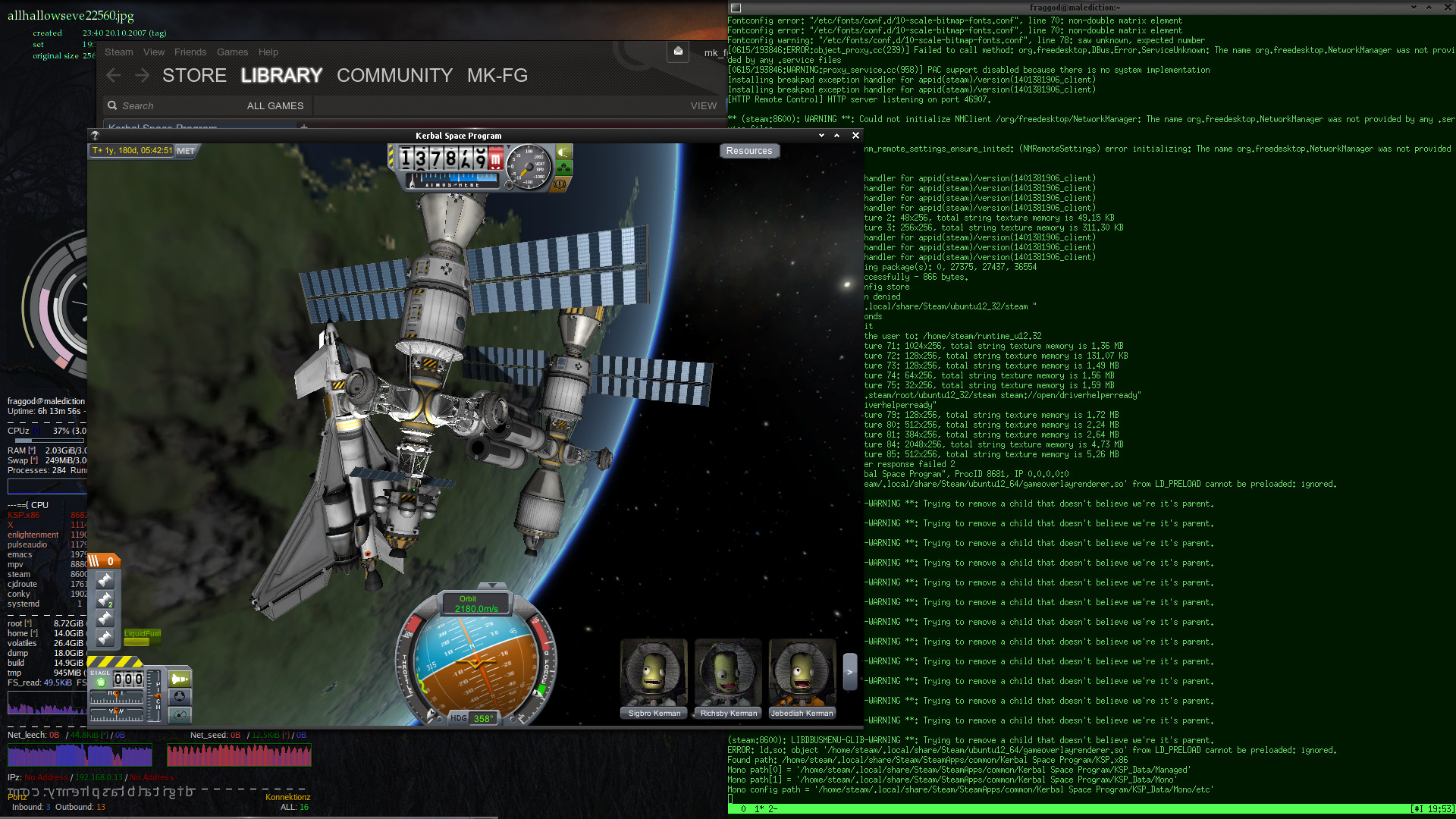

Jun 15, 2014

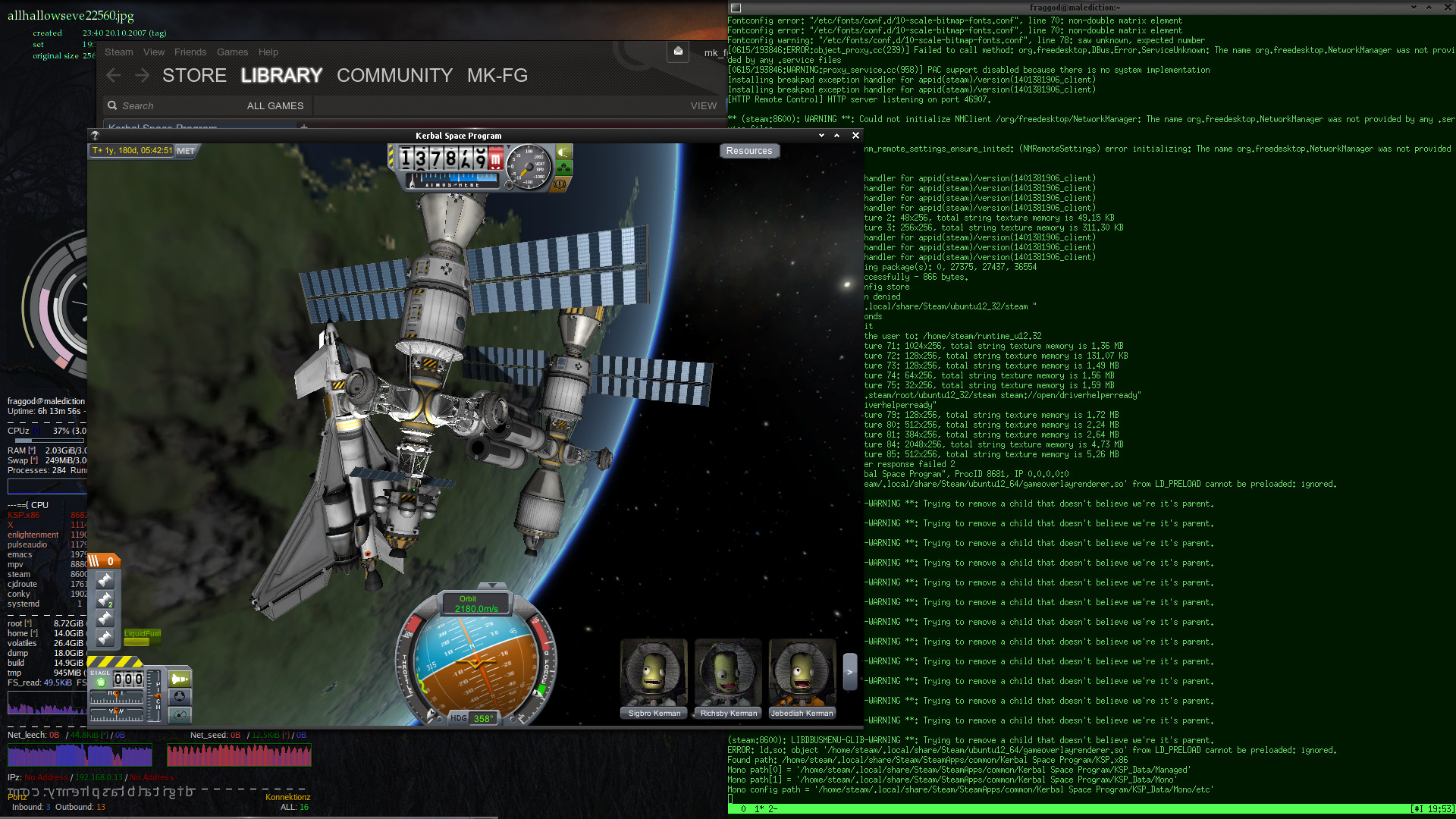

Finally got around to installing

Steam platform to a desktop linux machine.

Been using Win7 instance here for games before, but as another fan in my

laptop died, have been too lazy to reboot into dedicated games-os here.

Given that Steam is a closed-source proprietary DRM platform for mass software

distribution, it seem to be either an ideal malware spread vector or just a

recipie for disaster, so of course not keen on giving it any access in a

non-dedicated os.

I also feel a bit guilty on giving the thing any extra PR, as it's the worst

kind of always-on DRM crap in principle, and already pretty much monopolized

PC Gaming market.

These days even many game critics push for filtering and essentially abuse of

that immense leverage - not a good sign at all.

To its credit, of course, Steam is nice and convenient to use, as such things

(e.g. google, fb, droids, apple, etc) tend to be.

So, isolation:

To avoid having Steam and any games anywhere near $HOME, giving it separate

UID is a good way to go.

That should also allow for it to run in a separate desktop session - i.e. have

its own cgroup, to easily contain, control and set limits for games:

% loginctl user-status steam

steam (1001)

Since: Sun 2014-06-15 18:40:34 YEKT; 31s ago

State: active

Sessions: *7

Unit: user-1001.slice

└─session-7.scope

├─7821 sshd: steam [priv]

├─7829 sshd: steam@notty

├─7830 -zsh

├─7831 bash /usr/bin/steam

├─7841 bash /home/steam/.local/share/Steam/steam.sh

├─7842 tee /tmp/dumps/steam_stdout.txt

├─7917 /home/steam/.local/share/Steam/ubuntu12_32/steam

├─7942 dbus-launch --autolaunch=e52019f6d7b9427697a152348e9f84ad ...

└─7943 /usr/bin/dbus-daemon --fork --print-pid 5 ...

AppArmor should allow to further isolate processes from having any access

beyond what's absolutely necessary for them to run, warn when these try to do

strange things and allow to just restrict these from doing outright stupid

things.

Given separate UID and cgroup, network access from all Steam apps can be

easily controlled via e.g. iptables, to avoid Steam and games scanning and

abusing other things in LAN, for example.

Creating steam user should be as simple as useradd steam, but then switching

to that UID from within a running DE should still allow it to access same X

server and start systemd session for it, plus not have any extra env,

permissions, dbus access, fd's and such from the main session.

By far the easiest way to do that I've found is to just ssh

steam@localhost, putting proper pubkey into ~steam/.ssh/authorized_keys

first, of course.

That should ensure that nothing leaks from DE but whatever ssh passes, and

it's rather paranoid security-oriented tool, so can be trusted with that .

It should allow to both bootstrap and install stuff as well as run it, yet

don't allow steam to poke too much into other shared dirs or processes.

To allow access to X, xhost or ~/.Xauthority cookie can be used along with some

extra env in e.g. ~/.zshrc:

export DISPLAY=':1.0'

In similar to ssh fashion, I've used pulseaudio network streaming to main DE

sound daemon on localhost for sound (also in ~/.zshrc):

export PULSE_SERVER='{e52019f6d7b9427697a152348e9f84ad}tcp6:malediction:4713'

export PULSE_COOKIE="$HOME"/.pulse-cookie

(I have pulse network streaming setup anyway, for sharing sound from desktop to

laptop - to e.g. play videos on a big screen there yet hear sound from laptop's

headphones)

Running Steam will also start its own dbus session (maybe it's pulse client lib

doing that, didn't check), but it doesn't seem to be used for anything, so there

seem to be no need to share it with main DE.

That should allow to start Steam after ssh'ing to steam@localhost, but process

can be made much easier (and more foolproof) with e.g. ~/bin/steam as:

#!/bin/bash

cmd=$1

shift

steam_wait_exit() {

for n in {0..10}; do

pgrep -U steam -x steam >/dev/null || return 0

sleep 0.1

done

return 1

}

case "$cmd" in

'')

ssh steam@localhost <<EOF

source .zshrc

exec steam "$@"

EOF

loginctl user-status steam ;;

s*) loginctl user-status steam ;;

k*)

steam_exited=

pgrep -U steam -x steam >/dev/null

[[ $? -ne 0 ]] && steam_exited=t

[[ -z "$steam_exited" ]] && {

ssh steam@localhost <<EOF

source .zshrc

exec steam -shutdown

EOF

steam_wait_exit

[[ $? -eq 0 ]] && steam_exited=t

}

sudo loginctl kill-user steam

[[ -z "$steam_exited" ]] && {

steam_wait_exit || sudo loginctl -s KILL kill-user steam

} ;;

*) echo >&2 "Usage: $(basename "$0") [ status | kill ]"

esac

Now just steam in the main DE will run the thing in its own $HOME.

For further convenience, there's steam status and steam kill to easily

monitor or shutdown running Steam session from the terminal.

Note the complicated shutdown thing - Steam doesn't react to INT or TERM signals

cleanly, passing these to the running games instead, and should be terminated

via its own cli option (and the rest can then be killed-off too).

With this setup, iptables rules for outgoing connections can use user-slice

cgroup match (in 3.14 at least) or -m owner --uid-owner steam matches for

socket owner uid.

The only non-WAN things Steam connects to here are DNS servers and

aforementioned pulseaudio socket on localhost, the rest can be safely

firewalled.

Finally, running KSP there on Exherbo, I quickly discovered that sound libs

and plugins - alsa and pulse - in ubuntu "runtime" steam bootstrap setups don't

work well - either there's no sound or game fails to load at all.

Easy fix is to copy the runtime it uses (32-bit one for me) and cleanup alien

stuff from there for what's already present in the system, i.e.:

% cp -R .steam/bin32/steam-runtime my-runtime

% find my-runtime -type f\

\( -path '*asound*' -o -path '*alsa*' -o -path '*pulse*' \) -delete

And then add something like this to ~steam/.zshrc:

steam() { STEAM_RUNTIME="$HOME"/my-runtime command steam "$@"; }

That should keep all of the know-working Ubuntu libs that steam bootsrap gets

away from the rest of the system (where stuff like Mono just isn't needed, and

others will cause trouble) while allowing to remove any of them from the runtime

to use same thing in the system.

And yay - Kerbal Space Program seem to work here way faster than on Win7.

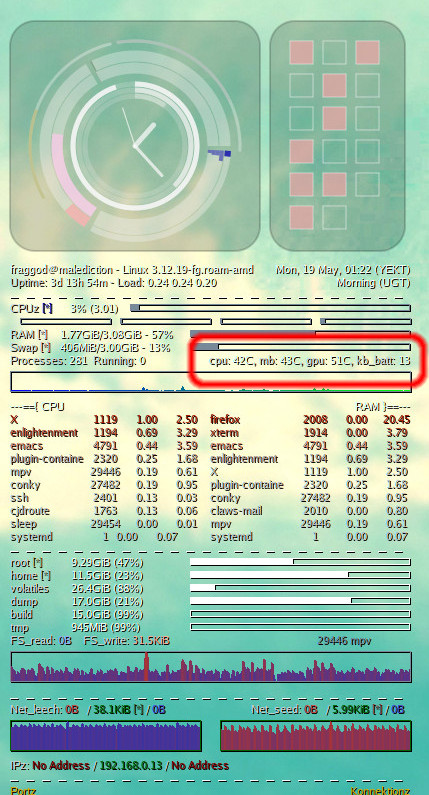

May 19, 2014

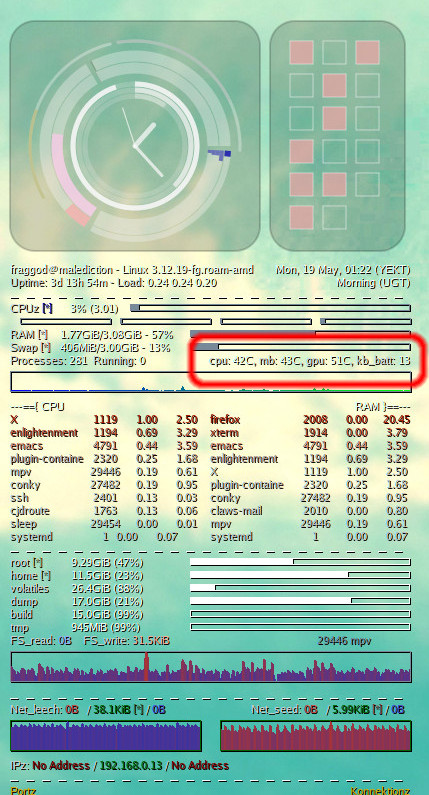

Conky sure has a ton of sensor-related hw-monitoring options, but it still

doesn't seem to be enough to represent even just the temperatures from this

"sensors" output:

atk0110-acpi-0

Adapter: ACPI interface

Vcore Voltage: +1.39 V (min = +0.80 V, max = +1.60 V)

+3.3V Voltage: +3.36 V (min = +2.97 V, max = +3.63 V)

+5V Voltage: +5.08 V (min = +4.50 V, max = +5.50 V)

+12V Voltage: +12.21 V (min = +10.20 V, max = +13.80 V)

CPU Fan Speed: 2008 RPM (min = 600 RPM, max = 7200 RPM)

Chassis Fan Speed: 0 RPM (min = 600 RPM, max = 7200 RPM)

Power Fan Speed: 0 RPM (min = 600 RPM, max = 7200 RPM)

CPU Temperature: +42.0°C (high = +60.0°C, crit = +95.0°C)

MB Temperature: +43.0°C (high = +45.0°C, crit = +75.0°C)

k10temp-pci-00c3

Adapter: PCI adapter

temp1: +30.6°C (high = +70.0°C)

(crit = +90.0°C, hyst = +88.0°C)

radeon-pci-0400

Adapter: PCI adapter

temp1: +51.0°C

Given the summertime, and faulty noisy cooling fans, decided that it'd be nice

to be able to have an idea about what kind of temperatures hw operates on under

all sorts of routine tasks.

Conky is extensible via lua, which - among other awesome things there are -

allows to code caches for expensive operations (and not just repeat them every

other second) and parse output of whatever tools efficiently (i.e. without

forking five extra binaries plus perl).

Output of "sensors" though not only is kinda expensive to get, but also hardly

parseable, likely unstable, and tool doesn't seem to have any "machine data"

option.

lm_sensors includes a libsensors, which still doesn't seem possible to call

from conky-lua directly (would need some kind of ffi), but easy to write the

wrapper around - i.e. this sens.c 50-liner, to dump info in a useful way:

atk0110-0-0__in0_input 1.392000

atk0110-0-0__in0_min 0.800000

atk0110-0-0__in0_max 1.600000

atk0110-0-0__in1_input 3.360000

...

atk0110-0-0__in3_max 13.800000

atk0110-0-0__fan1_input 2002.000000

atk0110-0-0__fan1_min 600.000000

atk0110-0-0__fan1_max 7200.000000

atk0110-0-0__fan2_input 0.000000

...

atk0110-0-0__fan3_max 7200.000000

atk0110-0-0__temp1_input 42.000000

atk0110-0-0__temp1_max 60.000000

atk0110-0-0__temp1_crit 95.000000

atk0110-0-0__temp2_input 43.000000

atk0110-0-0__temp2_max 45.000000

atk0110-0-0__temp2_crit 75.000000

k10temp-0-c3__temp1_input 31.500000

k10temp-0-c3__temp1_max 70.000000

k10temp-0-c3__temp1_crit 90.000000

k10temp-0-c3__temp1_crit_hyst 88.000000

radeon-0-400__temp1_input 51.000000

It's all lm_sensors seem to know about hw in a simple key-value form.

Still not keen on running that on every conky tick, hence the lua cache:

sensors = {

values=nil,

cmd="sens",

ts_read_i=120, ts_read=0,

}

function conky_sens_read(name, precision)

local ts = os.time()

if os.difftime(ts, sensors.ts_read) > sensors.ts_read_i then

local sh = io.popen(sensors.cmd, 'r')

sensors.values = {}

for p in string.gmatch(sh:read('*a'), '(%S+ %S+)\n') do

local n = string.find(p, ' ')

sensors.values[string.sub(p, 0, n-1)] = string.sub(p, n)

end

sh:close()

sensors.ts_read = ts

end

if sensors.values[name] then

local fmt = string.format('%%.%sf', precision or 0)

return string.format(fmt, sensors.values[name])

end

return ''

end

Which can run the actual "sens" command every 120s, which is perfectly fine with

me, since I don't consider conky to be an "early warning" system, and more of an

"have an idea of what's the norm here" one.

Config-wise, it'd be just cpu temp: ${lua sens_read atk0110-0-0__temp1_input}C,

or a more fancy template version with a flashing warning and hidden for missing

sensors:

template3 ${color lightgrey}${if_empty ${lua sens_read \2}}${else}\

${if_match ${lua sens_read \2} > \3}${color red}\1: ${lua sens_read \2}C${blink !!!}\

${else}\1: ${color}${lua sens_read \2}C${endif}${endif}

It can then be used simply as ${template3 cpu atk0110-0-0__temp1_input 60}

or ${template3 gpu radeon-0-400__temp1_input 80}, with 60 and 80 being

manually-specified thresholds beyond which indicator turns red and has blinking

"!!!" to get more attention.

Overall result in my case is something like this:

sens.c (plus Makefile with gcc -Wall -lsensors for it) and my conky config

where it's utilized can be all found in de-setup repo on github (or my git

mirror, ofc).

May 18, 2014

Just had an epic moment wrt how to fail at kinda-basic math, which seem to be

quite representative of how people fail wrt homebrew crypto code (and what

everyone and their mom warn against).

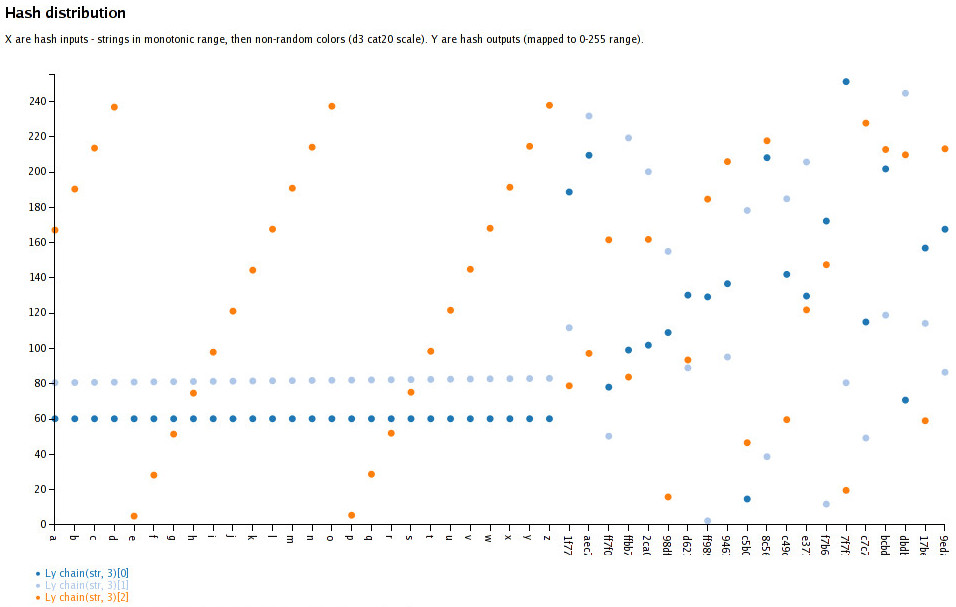

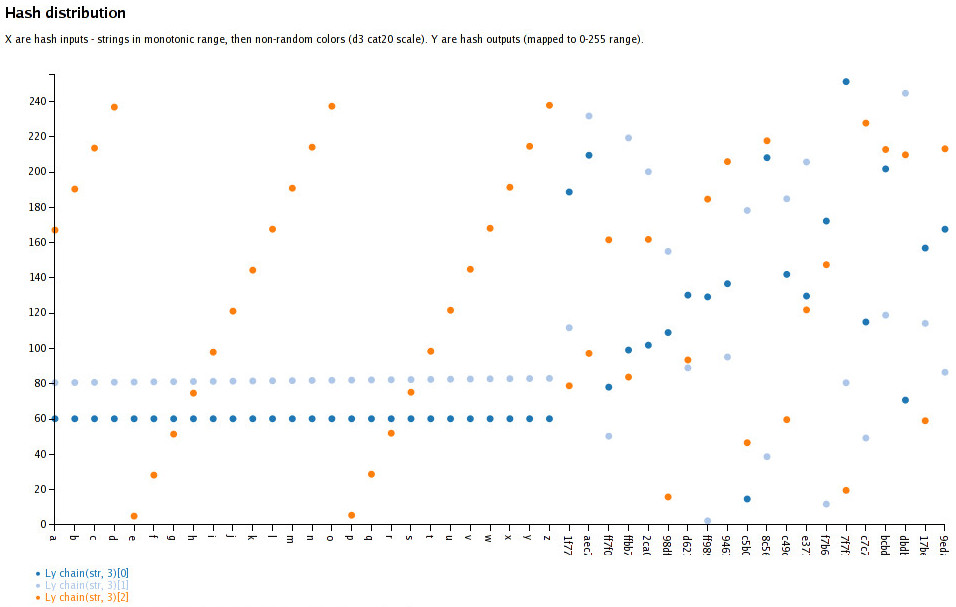

So, anyhow, on a d3 vis, I wanted to get a pseudorandom colors for text blobs,

but with reasonably same luminosity on HSL scale (Hue - Saturation -

Luminosity/Lightness/Level), so that changing opacity on light/dark bg can be

seen clearly as a change of L in the resulting color.

There are text items like (literally, in this example) "thing1", "thing2",

"thing3" - these should have all distinct and constant colors, ideally.

So how do you pick H and S components in HSL from a text tag?

Just use hash, right?

As JS doesn't have any hashes yet (

WebCryptoAPI is in the works) and I don't

really need "crypto" here, just some str-to-num shuffler for color, decided

that I might as well just roll out simple one-liner non-crypto hash func

implementation.

There are plenty of those around, e.g.

this list.

Didn't want much bias wrt which range of colors get picked, so there are these

test results - link1, link2 - wrt how these functions work, e.g. performance

and distribution of output values over uint32 range.

Picked random "ok" one - Ly hash, with fairly even output distribution,

implemented as this:

hashLy_max = 4294967296 # uint32

hashLy = (str, seed=0) ->

for n in [0..(str.length-1)]

c = str.charCodeAt(n)

while c > 0

seed = ((seed * 1664525) + (c & 0xff) + 1013904223) % hashLy_max

c >>= 8

seed

c >>= 8 line and internal loop here because JS has unicode strings, so it's

a trivial (non-standard) encoding.

But given any "thing1" string, I need two 0-255 values: H and S, not one

0-(2^32-1).

So let's map output to a 0-255 range and just call it twice:

hashLy_chain = (str, count=2, max=255) ->

[hash, hashes] = [0, []]

scale = d3.scale.linear()

.range([0, max]).domain([0, hashLy_max])

for n in [1..count]

hash = hashLy(str, hash)

hashes.push(scale(hash))

hashes

Note how to produce second hash output "hashLy" just gets called with "seed"

value equal to the first hash - essentially hash(hash(value) || value).

People do that with md5, sha*, and their ilk all the time, right?

Getting the values from this func, noticed that they look kinda non-random at

all, which is not what I came to expect from hash functions, quite used to

dealing crypto hashes, which are really easy to get in any lang but JS.

So, sure, given that I'm playing around with d3 anyway, let's just plot the

outputs:

"Wat?... Oh, right, makes sense."

Especially with sequential items, it's hilarious how non-random, and even

constant the output there is.

And it totally makes sense, of course - it's just a "k1*x + x + k2" function.

It's meant for hash tables, where seq-in/seq-out is fine, and the results in

"chain(3)[0]" and "chain(3)[1]" calls are so close on 0-255 that they map to the

same int value.

Plus, of course, the results are anything but "random-looking", even for

non-sequential strings of d3.scale.category20() range.

Lession learned - know what you're dealing with, be super-careful rolling your

own math from primitives you don't really understand, stop and think about wth

you're doing for a second - don't just rely on "intuition" (associated with e.g.

"hash" word).

Now I totally get how people start with AES and SHA1 funcs, mix them into their

own crypto protocol and somehow get something analogous to ROT13 (or even

double-ROT13, for extra hilarity) as a result.

May 12, 2014

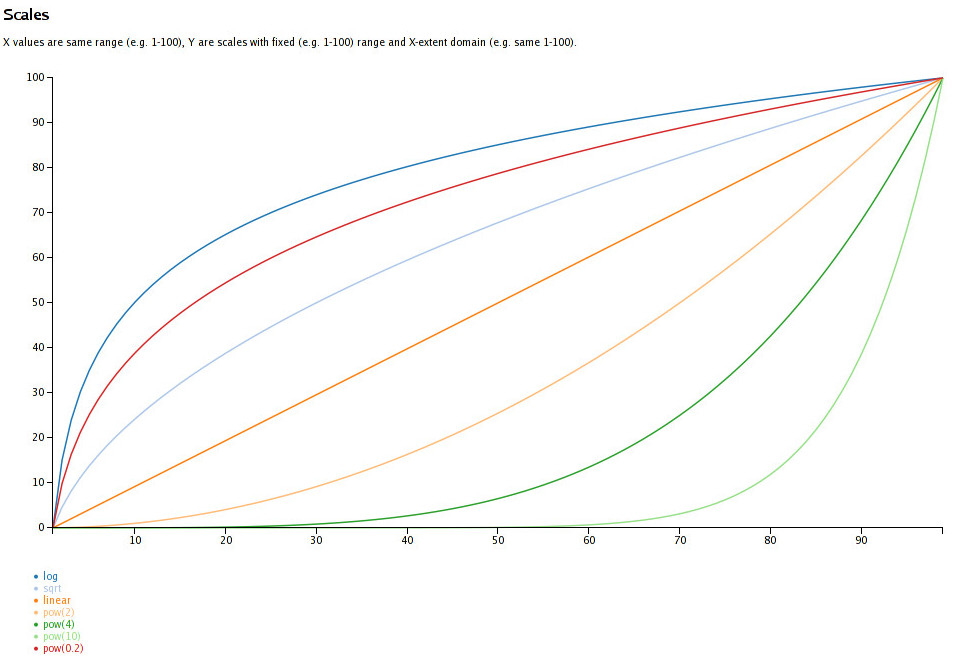

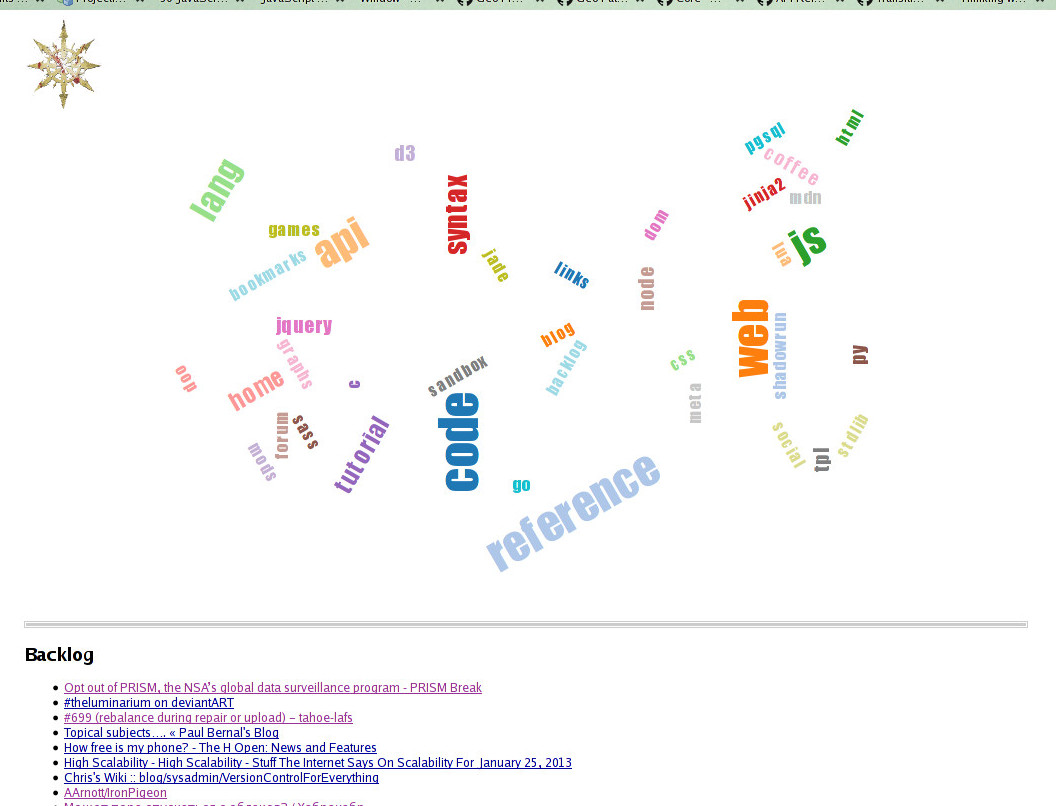

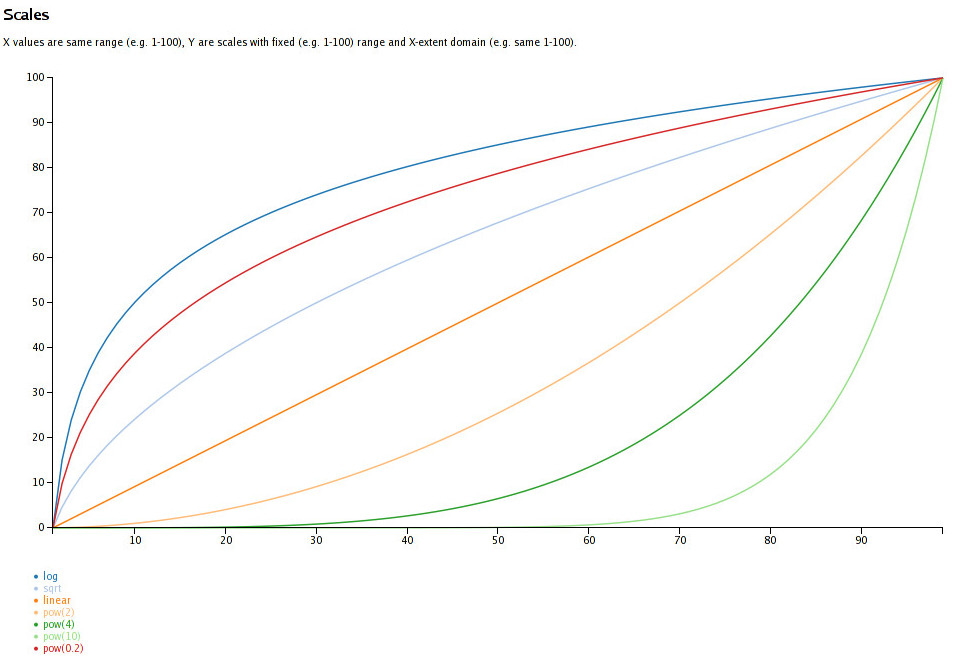

As I was working on a small d3-heavy project (my weird firefox homepage), I

did use d3 scales for things like opacity of the item, depending on its

relevance, and found these a bit counter-intuitive, but with no

readily-available demo (i.e. X-Y graphs of scales with same fixed domain/range)

on how they actually work.

Basically, I needed this:

I'll be first to admit that I'm no data scientist and not particulary good at

math, but from what memories on the subject I have, intuition tells me that

e.g. "d3.scale.pow().exponent(4)" should rise waaaay faster from the very start

than "d3.scale.log()", but with fixed domain + range values, that's exactly the

opposite of truth!

So, a bit confused about weird results I was getting, just wrote a simple

script to plot these charts for all basic d3 scales.

And, of course, once I saw a graph, it's fairly clear how that works.

Here, it's obvious that if you want to pick something that mildly favors higher

X values, you'd pick pow(2), and not sqrt.

Feel like such chart should be in the docs, but don't feel qualified enough to

add it, and maybe it's counter-intuitive just for me, as I don't dabble with

data visualizations much and/or might be too much of a visually inclined person.

In case someone needs the script to do the plotting (it's really trivial

though): scales_graph.zip

May 12, 2014

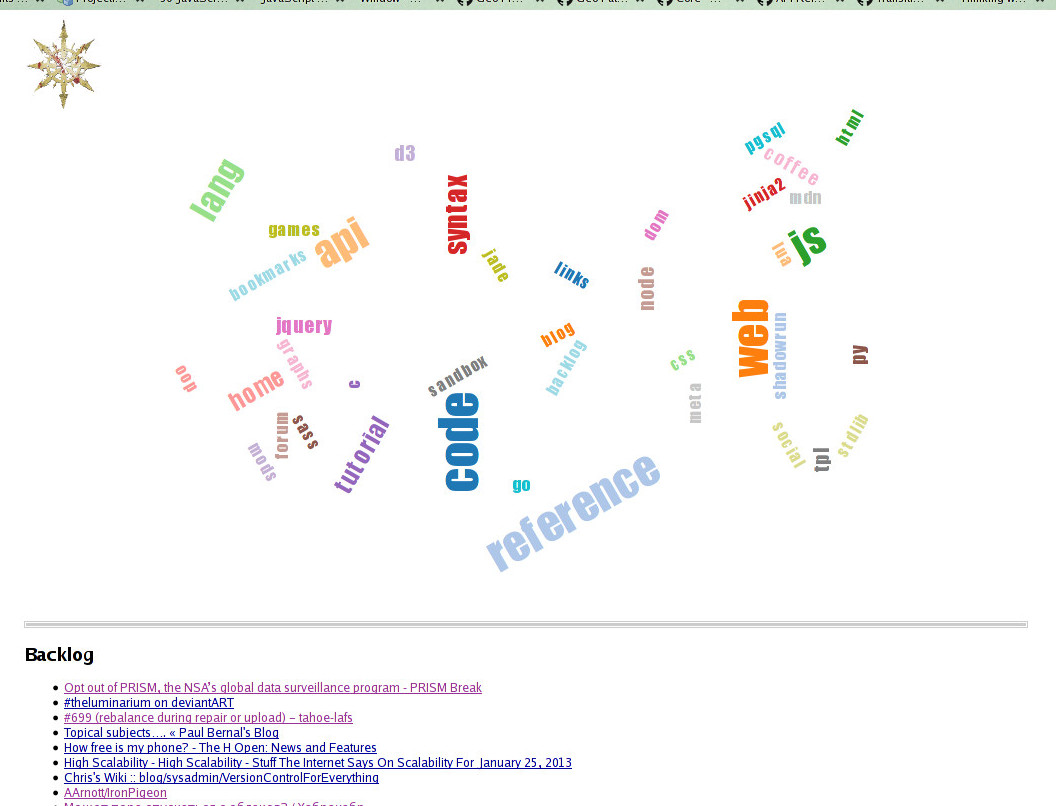

Wanted to have some sort of "homepage with my fav stuff, arranged as I want to"

in firefox for a while, and finally got resolve to do something about it - just

finished a (first version of) script to generate the thing -

firefox-homepage-generator.

Default "grid of page screenshots" never worked for me, and while there are

other projects that do other layouts for different stuff, they just aren't

flexible enough to do whatever horrible thing I want.

In this particular case, I wanted to experiment with chaotic tag cloud of

bookmarks (so they won't ever be in the same place), relations graph for these

tags and random picks for "links to read" from backlog.

Result is a dynamic d3 + d3.layout.cloud (interactive example of this

layout) page without much style:

"Mark of Chaos" button in the corner can fly/re-pack tags around.

Clicking tag shows bookmarks tagged as such and fades all other tags out in

proportion to how they're related to the clicked one (i.e. how many links

share the tag with others).

Started using FF bookmarks again in a meaningful way only recently, so not much

stuff there yet, but it does seem to help a lot, especially with these handy

awesome bar tricks.

Not entirely sure how useful the cloud visualization or actually having a

homepage would be, but it's a fun experiment and a nice place to collect any

useful web-surfing-related stuff I might think of in the future.

Repo link: firefox-homepage-generator

Mar 19, 2014

Dragonfall is probably the best CRPG (and game, given that it's my fav genre)

I've played in a few years.

Right mix of setting, world, characters, tone, choices and mechanics, and it

only gets more amazing towards the end.

I think

all the praise it gets is well deserved - for me it's up there with

early Bioware games (BGs, PST) and Fallouts.

Didn't quite expect it too after okay'ish Dead Man's Switch first SRR

experience, and hopefully there's more to come from HBS, would even justify

another kickstarter imo.

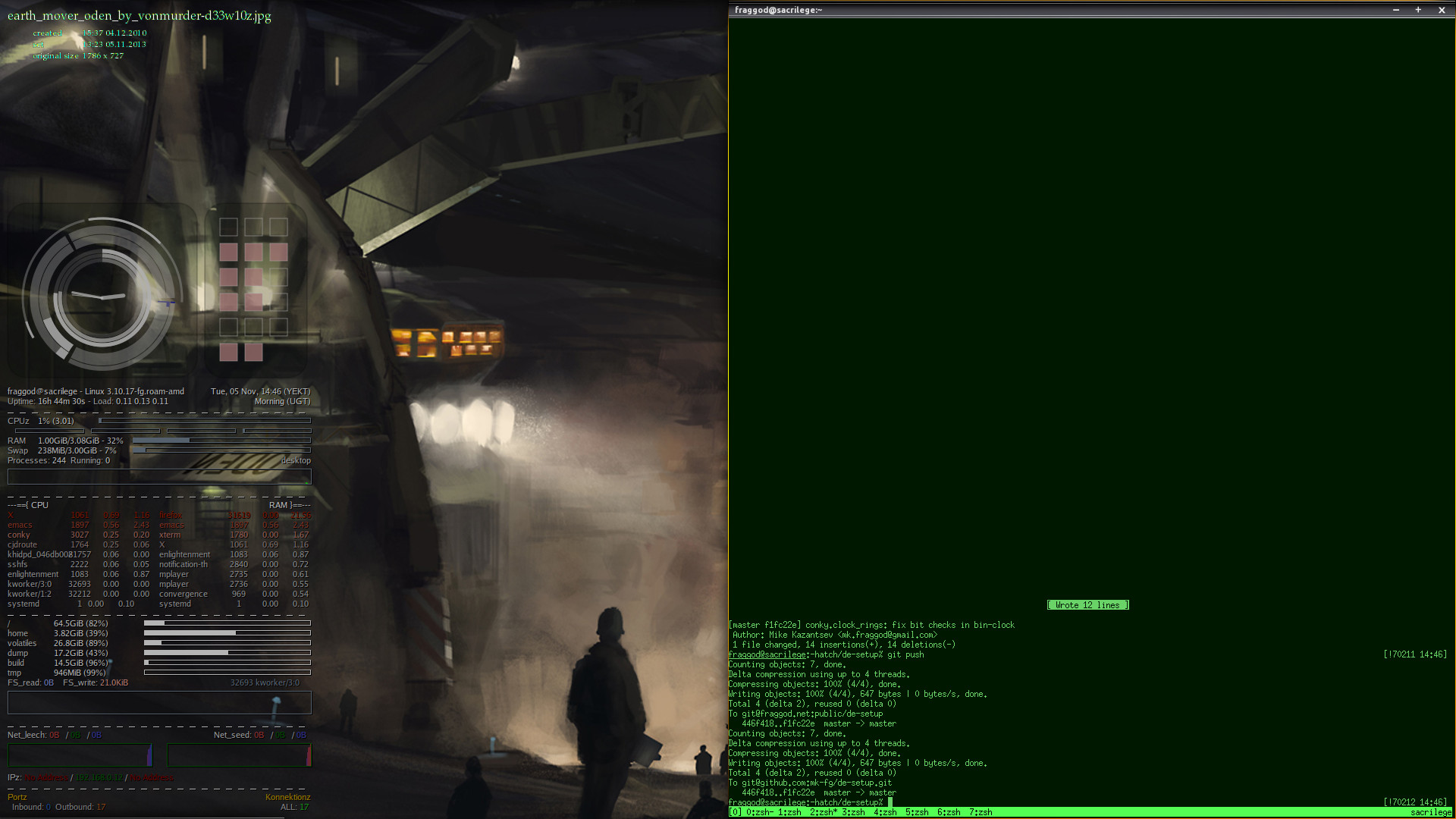

Nov 05, 2013

So my laptop broke anyway, but on the bright side - I've got fairly large

(certainly by my display standards) desktop fullhd screen now.

While restoring OS there, decided to update ~/.conkyrc (see conky), as it was

kinda small for this larger screen, so why not put some eye-candy there, while

at it?

Leftmost radial meters show (inner-to-outer) clock with hands and rings right

next to them, blue-ish cpu arcs (right-bottom, outer one is load summary, inner

ones are per-core), used (non-cache) memory/swap (left), network traffic

(top-right, green/red arcs for up/down) and / and /home df arcs (outer top).

On the right it's good ol' binary clock.

All drawings are lua script, all text and graphs below is conky's magic.

Whole script to draw the things can be found in de-setup repo on gh along

with full conkyrc I currently use.

Nov 01, 2013

Had a fan in a laptop dying for a few weeks now, but international mail being

universally bad (and me too hopeful about dying fan's lifetime), replacement

from ebay is still on its looong way.

Meanwhile, thing started screeching like mad, causing strong vibration in the

plastic and stopping/restarting every few seconds with an audible thunk.

Things not looking good, and me being too lazy to work hard enough to be able to

afford new laptop, had to do something to postpone this one's imminent death.

Cleaning the dust and hairs out of fan's propeller and heatsink and changing

thermal paste did make the thing a bit cooler, but given that it's fairly slim

Acer S3 ultrabook, no local repair shop was able to offer any immediate

replacement for the fan, so no clean hw fix in reach yet.

Interesting thing about broken fans though, is that they seem to start vibrating

madly out of control only beyond certain speed, so one option was to slow the

thing down, while keeping cpu cool somehow.

cpupower tool that comes with linux kernel can nicely downclock this i5 cpu to

800 MHz, but that's not really enough to keep fan from spinning madly - some

default BIOS code seem to be putting it to 100% at 50C.

Besides, from what I've seen, it seem to be quite counter-productive, making

everything (e.g. opening page in FF) much longer, keeping cpu at 100% of that

lower rate all the time, which seem to heat it up slower, sure, but to the same

or even higher level for the same task (e.g. opening that web page), with side

effect being also wasting time.

Luckily, found out that fan on Acer laptops can be controlled using /dev/ports

registers, as described on

linlap wiki page.

50C doesn't seem to be high for these CPUs at all, and one previous laptop

worked fine on 80C all the time, so making threshold for killing the fan

higher seem to be a good idea - it's not like there's much to loose anyway.

As acers3fand script linked from the wiki was for a bit different purpose,

wrote my own (also lighter and more self-contained) script - fan_control to

only put more than ~50% of power to it after it goes beyond 60C and warns if it

heats up way more without putting the fan into "wailing death" mode ever, with

max being at about 75% power, also reaching for cpupower hack before that.

Such manual control opens up a possibility of cpu overheating though, or

otherwise doesn't help much when you run cpu-intensive stuff, and I kinda don't

want to worry about some cronjob, stuck dev script or hung DE app killing the

machine while I'm away, so one additional hack I could think of is to just

throttle CPU bandwidth enough so that:

- short tasks complete at top performance, without delays.

- long cpu-intensive stuff gets throttled to a point where it can't generate

enough heat and cpu stays at some 60C with slow fan speed.

- some known-to-be-intensive tasks like compilation get their own especially

low limits.

So kinda like cpupower trick, but more fine-grained and without fixed presets

one can slow things down to (as lowest bar there doesn't cut it).

Kernel Control Groups (cgroups) turned out to have the right thing for that -

"cpu" resource controller there has cfs_quote_us/cfs_period_us knobs to control

cpu bandwidth for threads within a specific cgroup.

New enough systemd has the concept of "slices" to control resources for a

groups of services, which are applied automatically for all DE stuff as

"user.slice" and its "user-<name>.slice" subslices, so all that had to be done

is to echo the right values (which don't cause overheating or fan-fail) to

that rc's /sys knobs.

Similar generic limitations are easy to apply to other services there by

grouping them with Slice= option.

For distinct limits on daemons started from cli, there's "systemd-run" tool

these days, and for more proper interactive wrapping, I've had pet

cgroup-tools scripts for a while now (to limit cpu priority of heavier bg

stuff like builds though).

With that last tweak, situation seem to be under control - no stray app can

really kill the cpu and fan doesn't have to do all the hard work to prevent it

either, seemingly solving that hardware fail with software measures for now.

Keeping mobile i5 cpu around 50 degrees apparently needs it to spin only

barely, yet seem to allow all the desktop stuff to function without noticeable

slowdowns or difference.

Makes me wonder why Intel did allow that low-power ARM things fly past it...

Now, if only replacement fan got here before I drop off the nets even with these

hacks.