Oct 02, 2019

Something like 8y ago, back in 2011, it was ok to manage cgroup-imposed resource

limits in parallel to systemd (e.g. via libcgroup or custom tools), but over

years cgroups moved increasingly towards "unified hierarchy" (for many good

reasons, outlined in kernel docs), joining controllers at first, and then

creating new unified cgroup-v2 interface for it.

Latter no longer allows to separate systemd process-tracking tree from ones for

controlling specific resources, so you can't put restrictions on pids without

either pulling them from hierarchy that systemd uses (making it loose track of

them - bad idea) or changing cgroup configuration in parallel to systemd,

potentially stepping on its toes (also bad idea).

So with systemd running in unified cgroup-v2 hierarchy mode (can be compiled-in

default, esp. in the future, or enabled via systemd.unified_cgroup_hierarchy

on cmdline), there are two supported options to manage custom resource limits:

- By using systemd, via its slices, scopes and services.

- By taking over control of some cgroup within systemd hierarchy

via Delegate= in units.

Delegation necessitates managing pids within that subtree outside of systemd

entirely, while first one is simpler of the two, where instead of libcgroup,

cgmanager or cgconf config file, you'd define all these limits in systemd

.slice unit-file hierarchy like this:

# apps.slice

[Slice]

CPUWeight=30

IOWeight=30

MemoryHigh=5G

MemoryMax=8G

MemorySwapMax=1G

# apps-browser.slice

[Slice]

CPUWeight=30

IOWeight=30

MemoryHigh=3G

# apps-misc.slice

[Slice]

MemoryHigh=700M

MemoryMax=1500M

These can reside under whatever pre-defined slices (see systemctl status for

full tree), including "systemd --user" slices, where users can set these up as well.

Running arbitrary desktop app under such slices can be done as a .service with

all Type=/ExecStart=/ExecStop= complications or just .scope as a bunch of

arbitrary unmanaged processes, using systemd-run:

% systemd-run -q --user --scope \

--unit chrome --slice apps-browser -- chrominum

Scope will inherit all limits from specified slices, as composed into hierarchy

(with the usual hyphen-to-slash translation in unit names), and auto-start/stop

the scope (when all pids there exit) and all slices required.

So no extra scripts for mucking about in /sys/fs/cgroup are needed anymore,

whole subtree is visible and inspectable via systemd tools (e.g. systemctl

status apps.slice, systemd-cgls, systemd-cgtop and such), and can be

adjusted on the fly via e.g. systemctl set-property apps-misc.slice CPUWeight=30.

My old cgroup-tools provided few extra things for checking cgroup contents from

scripts easily (cgls), queueing apps from shell via cgroups and such (cgwait,

"cgrc -q"), which systemctl and systemd-run don't provide, but easy to implement

on top as:

% cg=$(systemctl -q --user show -p ControlGroup --value -- apps-browser.scope)

% procs=$( [ -z "$cg" ] ||

find /sys/fs/cgroup"$cg" -name cgroup.procs -exec cat '{}' + 2>/dev/null )

Ended up wrapping long systemd-run commands along with these into cgrc wrapper

shell script in spirit of old tools:

% cgrc -h

Usage: cgrc [-x] { -l | -c | -q [opts] } { -u unit | slice }

cgrc [-x] [-q [-i N] [-t N]] [-u unit] slice -- cmd [args...]

Run command via 'systemd-run --scope'

within specified slice, or inspect slice/scope.

Slice should be pre-defined via .slice unit and starts/stops automatically.

--system/--user mode detected from uid (--system for root, --user otherwise).

Extra options:

-u name - scope unit name, derived from command basename by default.

Starting scope with same unit name as already running one will fail.

With -l/-c list/check opts, restricts check to that scope instead of slice.

-q - wait for all pids in slice/scope to exit before start (if any).

-i - delay between checks in seconds, default=7s.

-t - timeout for -q wait (default=none), exiting with code 36 afterwards.

-l - list all pids within specified slice recursively.

-c - same as -l, but for exit code check: 35 = pids exist, 0 = empty.

-x - 'set -x' debug mode.

% cgrc apps-browser -- chrominum &

% cgrc -l apps | xargs ps u

...

% cgrc -c apps-browser || notify-send 'browser running'

% cgrc -q apps-browser ; notify-send 'browser exited'

That + systemd slice units seem to replace all old resource-management cruft

with modern unified v2 tree nicely, and should probably work for another decade, at least.

Jul 17, 2019

Was finally annoyed enough by lack of one-hotkey way to swap arguments in stuff

like cat /some/log/path/to/file1 > /even/longer/path/to/file2, which is

commonly needed for "edit and copy back" or various rsync tasks.

Zsh has transpose-words widget (in zle terminology) bound to ESC-t, and its

transpose-words-match extension, but they all fail miserably for stuff above -

either transpose parts (e.g. path components, as they are "words"), wrong args

entirely or don't take stuff like ">" into account (should be ignored).

These widgets are just small zsh funcs to edit/set BUFFER and CURSOR though,

and binding/replacing/extending them is easy, except that doing any non-trivial

string manipulation in shell is sort of a nightmare.

Hence sh-embedded python code for that last part:

_zle-transpose-args() {

res=$(python3 - 3<<<"$CURSOR.$BUFFER" <<'EOF'

import re

pos, line = open(3).read().rstrip('\r\n').split('.', 1)

pos, suffix = int(pos) if pos else -1, ''

# Ignore words after cursor, incl. if it's on first letter

n = max(0, pos-1)

if pos > 0 and line[n:n+1]:

if line[n:n+1].strip(): n += len(line[n:].split(None, 1)[0])

line, suffix = line[:n], line[n:]

line = re.split(r'(\s+)', line.rstrip('\r\n'))

arg_ns = list( n for n, arg in enumerate(line)

if arg.strip() and not re.search(r'^[-<>|&]{1,4}$', arg) )

line[arg_ns[-2]], line[arg_ns[-1]] = line[arg_ns[-1]], line[arg_ns[-2]]

line = ''.join(line) + suffix

if pos < 0: pos = len(line)

print(f'{pos:02d}{line}\n', end='')

EOF

)

[[ $? -eq 0 ]] || return

BUFFER=${res:2}

CURSOR=${res:0:2}

}

zle -N transpose-words _zle-transpose-args # bound to ESC-t by default

Given that such keys are pressed sparingly, there's really no downside in using

saner language for any non-oneliner stuff, passing code to stdin and any extra

args/values via file descriptors like 3<<<"some value" above.

Opens up a lot of freedom wrt making shell prompt more friendly and avoiding

mouse and copy-pasting to/from there for common tasks like that.

Jan 26, 2019

While Matrix still gaining traction and is somewhat in flux,

Slack slowly dies in a fire (hopefully!),

Discord seem to be the most popular IRC of the day,

especially in any kind of gaming and non-technical communities.

So it's only fitting to use same familiar IRC clients for the new thing,

even though it doesn't officially support it.

Started using it initially via bitlbee and bitlbee-discord,

but these don't seem to work well for that - you can't just /list or /join

channels, multiple discord guilds aren't handled too well,

some useful features like chat history aren't easily available,

(surprisingly common) channel renames are constant pain,

hard to debug, etc - and worst of all, it just kept loosing my messages,

making some conversations very confusing.

So given relatively clear protocols for both, wrote my own proxy-ircd bridge

eventually - https://github.com/mk-fg/reliable-discord-client-irc-daemon

Which seem to address all things that annoyed me in bitlbee-discord nicely,

as obviously that was the point of implementing whole thing too.

Being a single dependency-light (only aiohttp for discord websockets) python3

script, should be much easier to tweak and test to one's liking.

Quite proud of all debug and logging stuff there in particular,

where you can easily get full debug logs from code and full protocol logs

of irc, websocket and http traffic at the moment of any issue

and understand, find and fix is easily.

Hopefully Matrix will eventually get to be more common,

as it - being more open and dev-friendly - seem to have plenty of nice GUI clients,

which are probably better fit for all the new features that it has over good old IRC.

Jan 10, 2019

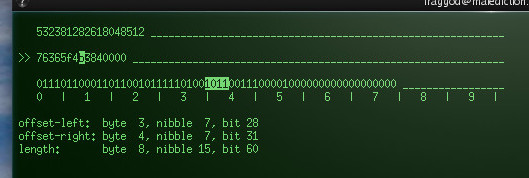

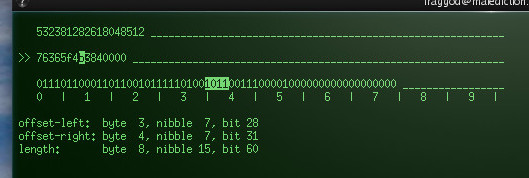

Really simple curses-based tool which I've been kinda-missing for years:

There's probably something similar built into any decent reverse-engineering

suite or hex editor (though ghex doesn't have one!), but don't use either much,

don't want to start either to quickly check mental math, and bet GUI conventions

will work against how this should work ideally.

Converts between dec/hex/binary and helps to visualize all three at the same

time, as well as how flipping bits or digits affects them all.

Nov 10, 2018

These days D-Bus is used in lot of places, on both desktop machines where it

started, and servers too, with e.g. systemd APIs all over the place.

Never liked bindings offered for it in python though, as it's an extra half-C

lib to worry about, and one that doesn't work too well either (had a lot of

problems with it in earlier pulseaudio-mixer-cli script iterations).

But with systemd and its sd-bus everywhere, there's no longer a need for such

extra lib, as its API is very easy to use via ctypes, e.g.:

import os, ctypes as ct

class sd_bus(ct.Structure): pass

class sd_bus_error(ct.Structure):

_fields_ = [ ('name', ct.c_char_p),

('message', ct.c_char_p), ('need_free', ct.c_int) ]

class sd_bus_msg(ct.Structure): pass

lib = ct.CDLL('libsystemd.so')

def run(call, *args, sig=None, check=True):

func = getattr(lib, call)

if sig: func.argtypes = sig

res = func(*args)

if check and res < 0: raise OSError(-res, os.strerror(-res))

return res

bus = ct.POINTER(sd_bus)()

run( 'sd_bus_open_system', ct.byref(bus),

sig=[ct.POINTER(ct.POINTER(sd_bus))] )

error = sd_bus_error()

reply = ct.POINTER(sd_bus_msg)()

try:

run( 'sd_bus_call_method',

bus,

b'org.freedesktop.systemd1',

b'/org/freedesktop/systemd1',

b'org.freedesktop.systemd1.Manager',

b'Dump',

ct.byref(error),

ct.byref(reply),

b'',

sig=[

ct.POINTER(sd_bus),

ct.c_char_p, ct.c_char_p, ct.c_char_p, ct.c_char_p,

ct.POINTER(sd_bus_error),

ct.POINTER(ct.POINTER(sd_bus_msg)),

ct.c_char_p ] )

dump = ct.c_char_p()

run( 'sd_bus_message_read', reply, b's', ct.byref(dump),

sig=[ct.POINTER(sd_bus_msg), ct.c_char_p, ct.POINTER(ct.c_char_p)] )

print(dump.value.decode())

finally: run('sd_bus_flush_close_unref', bus, check=False)

Which can be a bit more verbose than dbus-python API, but only needs libsystemd,

which is already there on any modern systemd-enabled machine.

It's surprisingly easy to put/parse any amount of arrays, maps or any kind of

variant data there, as sd-bus has convention of how each one is serialized to a

flat list of values.

For instance, "as" (array of strings) would expect an int count with

corresponding number of strings following it, or just NULL for empty array, with

no complicated structs or interfaces.

Same thing for maps and variants, where former just have keys/values in a row

after element count, and latter is a type string (e.g. "s") followed by value.

Example of using a more complicated desktop notification interface, which has

all of the above stuff can be found here:

https://github.com/mk-fg/fgtk/blob/4eaa44a/desktop/notifications/power#L22-L104

Whole function is a libsystemd-only drop-in replacement for a bunch of crappy

modules which provide that on top of libdbus.

sd-bus api is relatively new, but really happy that it exists in systemd now,

given how systemd itself uses dbus all over the place.

Few script examples using it:

For small system scripts in particular, not needing to install any deps except

python/systemd (which are always there), is definitely quite a good thing.

Sep 06, 2018

It's been bugging me for a while that "find" does not have matches for extended

attributes and posix capabilities on filesystems.

Finally got around to looking for a solution, and there apparently is a recent

patch (Jul 2018), which addresses the issue nicely:

https://www.mail-archive.com/bug-findutils@gnu.org/msg05483.html

It finally allows to easily track modern-suid binaries with capabilities that

allow root access (almost all of them do in one way or another), as well as

occasional ACLs and such, e.g.:

% find /usr/ -type f -xattr .

/usr/bin/rcp

/usr/bin/rsh

/usr/bin/ping

/usr/bin/mtr-packet

/usr/bin/dumpcap

/usr/bin/gnome-keyring-daemon

/usr/bin/rlogin

/usr/lib/gstreamer-1.0/gst-ptp-helper

% getcap /usr/bin/ping

/usr/bin/ping = cap_net_raw+ep

(find -type f -cap . -o -xattr . -o -perm /u=s,g=s to match either of

caps/xattrs/suid-bits, though -xattr . includes all -cap matches already)

These -cap/-xattr flags allow matching stuff by regexps too, so they work for

pretty much any kind of matching.

Same as with --json in iproute2 and other tools, a very welcome update, which

hopefully will make it into one of the upstream versions eventually.

(but probably not very soon, since last findutils-4.6.0 release is from 2015

about 3 years ago, and this patch isn't even merged to current git yet)

Easy to use now on Arch though, as there's findutils-git in AUR, which only

needs a small patch (as of 2101eda) to add all this great stuff -

e.g. findutils-git-2101eda-xattrs.patch (includes xattrs.patch itself too).

It also applies with minor changes to non-git findutils version, but as these

are from back 2015 atm, it's a bit PITA to build them already due to various

auto-hell and gnulib changes.

Aug 14, 2018

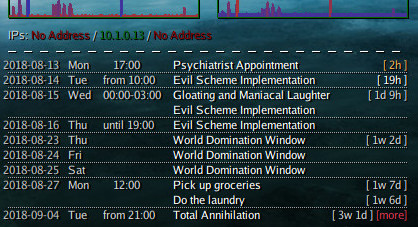

Basically converting some free-form .rst (as in ReST or reStructuredText) like this:

Minor chores

------------

Stuff no one ever remembers doing.

- Pick up groceries

:ts: 2018-08-27 12:00

Running low on salt, don't forget to grab some.

- Do the laundry

:ts: every 2w interval

That pile in the corner across the room.

Total Annihilation

------------------

:ts-start: 2018-09-04 21:00

:ts-end: 2018-09-20 21:00

For behold, the LORD will come in fire And His chariots like the whirlwind,

To render His anger with fury, And His rebuke with flames of fire. ... blah blah

Into this:

Though usually with a bit less theatrical evil and more useful events you don't

want to use precious head-space to always keep track of.

Emacs org-mode is the most praised and common solution for this (well, in

non-web/gui/mobile world, that is), but never bothered to learn its special syntax,

and don't really want such basic thing to be emacs/elisp-specific

(like, run emacs to parse/generate stuff!).

rst, on the other hand, is an old friend (ever since I found markdown to be too

limiting and not blocky enough), supports embedding structured data in there

(really like how github highlights it btw - check out cal.rst example here),

and super-easy to work with in anything, not just emacs, and can be parsed by

a whole bunch of stuff.

Project on github: mk-fg/rst-icalendar-event-tracker

Should be zero-setup, light on dependencies and very easy to use.

Has simple iCalendar export for anything else, but with conky in particular,

its generated snippet can be included via $(catp /path/to/output} directive,

and be configured wrt columns/offsets/colors/etc both on script-level

via -o/--conky-params option and per-event via :conky: <opts> rst tags.

Calendar like that is not only useful if you wear suits, but also to check on all

these cool once-per-X podcasts, mark future releases of some interesting games

or movies, track all the monthly bills and chores that are easy to forget about.

And it feels great to not be afraid to forget or miss all this stuff anymore.

In fact, having an excuse to structure and write it down helps a ton already.

But beyond that, feel like using transient and passing reminders can be just as

good for tracking updates on some RSS feeds or URLs, so that you can notice

update and check it out if you want to, maybe even mark as a todo-entry somewhere,

but it won't hang over the head by default (and forever) in an inbox,

feed reader or a large un-*something* number in bold someplace else.

So plan to add url-grepping and rss-checking as well, and have updates on

arbitrary pages or feeds create calendar events there, which should at least be

useful for aforementioned media periodics.

In fact, rst-configurable feed-checker (not "reader"!) might be a much better

idea even for text-based content than some crappy web-based django mostrosity

like the one I used before.

Aug 09, 2018

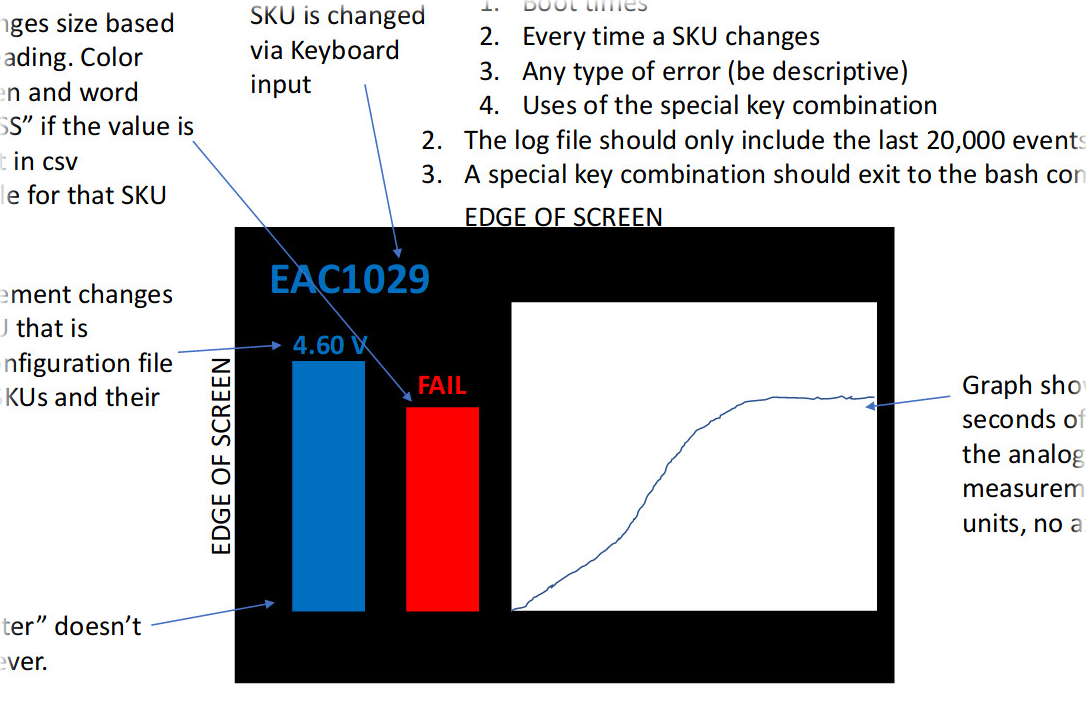

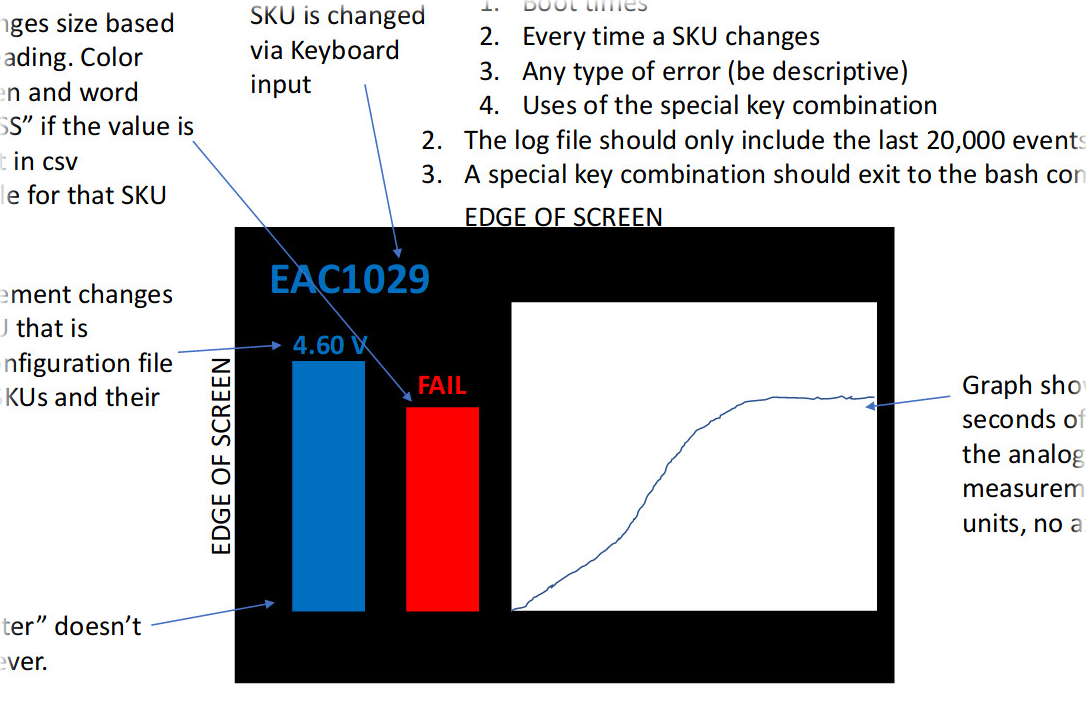

Implementing rather trivial text + chart UI with RPi recently,

was surprised that it's somehow still not very straightforward in 2018.

Basic idea is to implement something like this:

Which is basically three text labels and three rectangles, with something like

1/s updates, so nothing that'd require 30fps 3D rendering loop or performance of

C or Go, just any most basic 2D API and python should work fine for it.

Plenty of such libs/toolkits on top of X/Wayland and similar stuff, but that's a

ton of extra layers of junk and jank instead of few trivial OpenVG calls,

with only quirk of being able to render scaled text labels.

There didn't seem to be anything python that looks suitable for the task, notably:

- ajstarks/openvg - great simple OpenVG wrapper lib, but C/Go only, has

somewhat unorthodox unprefixed API, and seem to be abandoned these days.

- povg - rather raw OpenVG ctypes wrapper, looks ok, but rendering fonts there

would be a hassle.

- libovg - includes py wrapper, but seem to be long-abandoned and have broken

text rendering.

- pi3d - for 3D graphics, so quite a different beast, rather excessive and

really hard-to-use for static 2D UIs.

- Qt5 and cairo/pango - both support OpenVG, but have excessive dependencies,

with cathedral-like ecosystems built around them (qt/gtk respectively).

- mgthomas99/easy-vg - updated fork of ajstarks/openvg, with proper C-style

namespacing, some fixes and repackaged as a shared lib.

So with no good python alternative, last option of just wrapping dozen .so

easy-vg calls via ctypes seemed to be a good-enough solution,

with ~100-line wrapper for all calls there (evg.py in mk-fg/easy-vg).

With that, rendering code for all above UI ends up being as trivial as:

evg.begin()

evg.background(*c.bg.rgb)

evg.scale(x(1), y(1))

# Bars

evg.fill(*c.baseline.rgba)

evg.rect(*pos.baseline, *sz.baseline)

evg.fill(*c[meter_state].rgba)

evg.rect(*pos.meter, sz.meter.x, meter_height)

## Chart

evg.fill(*c.chart_bg.rgba)

evg.rect(*pos.chart, *sz.chart)

if len(chart_points) > 1:

...

arr_sig = evg.vg_float * len(cp)

evg.polyline(*(arr_sig(*map(

op.attrgetter(k), chart_points )) for k in 'xy'), len(cp))

## Text

evg.scale(1/x(1), 1/y(1))

text_size = dxy_scaled(sz.bar_text)

evg.fill(*(c.sku.rgba if not text.sku_found else c.sku_found.rgba))

evg.text( x(pos.sku.x), y(pos.sku.y),

text.sku.encode(), None, dxy_scaled(sz.sku) )

...

(note: "*stuff" are not C pointers, but python's syntax for "explode value list")

That code can start straight-up after local-fs.target with only dependency being

easy-vg's libshapes.so to wrap OpenVG calls to RPi's /dev/vc*, and being python,

use all the nice stuff like gpiozero, succinct and no-brainer to work with.

Few additional notes on such over-the-console UIs:

RPi's VC4/DispmanX has great "layers" feature, where multiple apps can display

different stuff at the same time.

This allows to easily implement e.g. splash screen (via some simple/quick

10-liner binary) always running underneath UI, hiding any noise and

providing more graceful start/stop/crash transitions.

Can even be used to play some dynamic video splash or logos/animations

(via OpenMAX API and omxplayer) while main app/UI initializing/running

underneath it.

(wrote a bit more about this in an earlier Raspberry Pi early boot splash /

logo screen post here)

If keyboard/mouse/whatever input have to be handled, python-evdev + pyudev

are great and simple way to do it (also mentioned in an earlier post),

and generally easier to use than a11y layers that some GUI toolkits provide.

systemctl disable getty@tty1 to not have it spammed with whatever input is

intended for the UI, as otherwise it'll still be running under the graphics.

Should UI app ever need to drop user back to console (e.g. via something like

ExecStart=/sbin/agetty --autologin root tty1), it might be necessary to

scrub all the input from there first, which can be done by using

StandardInput=tty in the app and something like the following snippet:

if sys.stdin.isatty()

import termios, atexit

signal.signal(signal.SIGHUP, signal.SIG_IGN)

atexit.register(termios.tcflush, sys.stdin.fileno(), termios.TCIOFLUSH)

It'd be outright dangerous to run shell with some random input otherwise.

While it's neat single quick-to-start pid on top of bare init, it's probably

not suitable for more complex text/data layouts, as positioning and drawing

all the "nice" UI boxes for that can be a lot of work and what widget toolkits

are there for.

Kinda expected that RPi would have some python "bare UI" toolkit by now, but oh

well, it's not that difficult to make one by putting stuff linked above together.

In future, mgthomas99/easy-vg seem to be moving away from simple API it

currently has, based more around paths like raw OpenVG or e.g. cairo in

"develop" branch already, but there should be my mk-fg/easy-vg fork retaining

old API as it is demonstrated here.

Aug 05, 2018

Have been reviewing backups last about 10 years ago (p2, p3),

and surprisingly not much changed since then - still same ssh and rsync

for secure sync over network remote.

Stable-enough btrfs makes --link-dest somewhat anachronistic, and rsync filters

came a long way since then, but everything else is the same, so why not use this

opportunity to make things simpler and smoother...

In particular, it was always annoying to me that backups either had to be pulled

from some open and accessible port or pushed to same thing on the backup server,

which isn't hard to fix with "ssh -R" tunnels - that allows backup server to

have locked-down and reasonably secure ssh port open at most, yet provides no

remote push access (i.e. no overwriting whatever remote wants to, like simple

rsyncd setup would do), and does everything through just a single outgoing ssh

connection.

That is, run "rsync --daemon" or localhost, make reverse-tunnel to it when

connecting to backup host and let it pull from that.

On top of being no-brainer to implement and use - as simple as ssh from behind

however many NATs - it avoids following (partly mentioned) problematic things:

- Pushing stuff to backup-host, which can be exploited to delete stuff.

- Using insecure network channels and/or rsync auth - ssh only.

- Having any kind of insecure auth or port open on backup-host (e.g. rsyncd) - ssh only.

- Requiring backed-up machine to be accessible on the net for backup-pulls - can

be behind any amount of NAT layers, and only needs one outgoing ssh connection.

- Specifying/handling backup parameters (beyond --filter lists), rotation and

cleanup on the backed-up machine - backup-host will handle all that in a

known-good and uniform manner.

- Running rsyncd or such with unrestricted fs access "for backups" - only

runs it on localhost port with one-time auth for ssh connection lifetime,

restricted to specified read-only path, with local filter rules on top.

- Needing anything beyond basic ssh/rsync/python on either side.

Actual implementation I've ended up with is ssh-r-sync + ssh-r-sync-recv scripts

in fgtk repo, both being rather simple py3 wrappers for ssh/rsync stuff.

Both can be used by regular uid's, and can use rsync binary with capabilities or

sudo wrapper to get 1-to-1 backup with all permissions instead of --fake-super

(though note that giving root-rsync access to uid is pretty much same as "NOPASSWD: ALL" sudo).

One relatively recent realization (coming from acme-cert-tool) compared to

scripts I wrote earlier, is that using bunch of script hooks all over the place

is a way easier than hardcoding a dozen of ad-hoc options.

I.e. have option group like this (-h/--help output from argparse):

Hook options:

-x hook:path, --hook hook:path

Hook-script to run at the specified point.

Specified path must be executable (chmod +x ...),

will be run synchronously, and must exit with 0

for tool to continue operation, non-0 to abort.

Hooks are run with same uid/gid

and env as the main script, can use PATH-lookup.

See --hook-list output to get full list of

all supported hook-points and arguments passed to them.

Example spec: -x rsync.pre:~/hook.pre-sync.sh

--hook-timeout seconds

Timeout for waiting for hook-script to finish running,

before aborting the operation (treated as hook error).

Zero or negative value will disable timeout. Default: no-limit

--hook-list

Print the list of all supported

hooks with descriptions/parameters and exit.

And --hook-list providing full attached info like:

Available hook points:

script.start:

Before starting handshake with authenticated remote.

args: backup root dir.

...

rsync.pre:

Right before backup-rsync is started, if it will be run.

args: backup root dir, backup dir, remote name, privileged sync (0 or 1).

stdout: any additional \0-separated args to pass to rsync.

These must be terminated by \0, if passed,

and can start with \0 to avoid passing any default options.

rsync.done:

Right after backup-rsync is finished, e.g. to check/process its output.

args: backup root dir, backup dir, remote name, rsync exit code.

stdin: interleaved stdout/stderr from rsync.

stdout: optional replacement for rsync return code, int if non-empty.

Hooks are run synchronously,

waiting for subprocess to exit before continuing.

All hooks must exit with status 0 to continue operation.

Some hooks get passed arguments, as mentioned in hook descriptions.

Setting --hook-timeout (defaults to no limit)

can be used to abort when hook-scripts hang.

Very trivial to implement and then allows to hook much simpler single-purpose

bash scripts handling specific stuff like passing extra options on per-host basis,

handling backup rotation/cleanup and --link-dest,

creating "backup-done-successfully" mark and manifest files, or whatever else,

without needing to add all these corner-cases into the main script.

One boilerplate thing that looks useful to hardcode though is a "nice ionice ..."

wrapper, which is pretty much inevitable for background backup scripts

(though cgroup limits can also be a good idea), and fairly easy to do in python,

with minor a caveat of a hardcoded ioprio_set syscall number,

but these pretty much never change on linux.

As a side-note, can recommend btrbk as a very nice tool for managing backups

stored on btrfs, even if for just rotating/removing snapshots in an easy and

sane "keep A daily ones, B weekly, C monthly, ..." manner.

[code link: ssh-r-sync + ssh-r-sync-recv scripts]

Apr 16, 2018

EMMS is the best music player out there (at least if you use emacs),

as it allows full power and convenience of proper $EDITOR for music playlists and such.

All mpv backends for it that I'm aware of were restarting player binary for

every track though, which is simple, good compatibility-wise,

but also suboptimal in many ways.

For one thing, stuff like audio visualization is pita if it's in a constantly

created/destroyed transient window, it adds significant gaps between played tracks

(gapless+crossfade? forget it!), and - more due to why player starts/exit

(know when playback ends) - feedback/control over it are very limited,

since clearly no good APIs are used there, if wrapper relies on process exit

as "playback ended" event.

Rewritten emms-player-mpv.el (also in "mpv-json-ipc" branch of emms git

atm) fixes all that.

What's curious is that I didn't see almost all of these interesting use-cases,

which using the tool in the sane way allows for, and only wrote new wrapper to

get nice "playback position" feedback and out of petty pedantry over how lazy

simple implementation seem to be.

Having separate persistent player window allows OSD config or lua to display any

kind of metadata or infographics (with full power of lua + mpv + ffmpeg)

about current tracks or playlist stuff there (esp. for online streams),

enables subs/lyrics display, and getting stream of metadata update events from

mpv allows to update any "now playing" meta stuff in emacs/emms too.

What seemed like a petty and almost pointless project to have fun with lisp,

turned out to be actually useful, which seem to often be the case once you take

a deep-dive into things, and not just blindly assume stuff about them

(fire hot, water wet, etc).

Hopefully might get merged upstream after EMMS 5.0 and get a few more features

and interesting uses like that along the way.

(though I'd suggest not waiting and just adding anything that comes to mind in

~/.emacs via emms-mpv-event-connect-hook, emms-mpv-event-functions and

emms-mpv-ipc-req-send - should be really easy now)