Apr 12, 2018

Didn't know mpv could do that until dropping into raw mpv for music playback

yesterday, while adding its json api support into emms (emacs music player).

One option in mpv that I found essential over time - especially as playback from

network sources via youtube-dl (youtube, twitch and such) became more common -

is --force-window=immediate (via config), so that you can just run "mpv URL" in

whatever console and don't have to wait until video buffers enough for mpv window to pop-up.

This saves a few-to-dozen seconds of annoyance as otherwise you can't do

anything during ytdl init and buffering phase is done,

as that's when window will pop-up randomly and interrupt whatever you're doing,

plus maybe get affected by stuff being typed at the moment

(and close, skip, seek or get all messed-up otherwise).

It's easy to disable this unnecessary window for audio-only files via lua,

but other option that came to mind when looking at that black square is to

send it to aux display with some nice visualization running.

Which is not really an mpv feature, but one of the many things that ffmpeg can

render with its filters, enabled via --lavfi-complex audio/video filtering option.

E.g. mpv --lavfi-complex="[aid1]asplit[ao][a]; [a]showcqt[vo]" file.mp3 will

process a copy of --aid=1 audio stream (one copy goes straight to "ao" - audio output)

via ffmpeg showcqt filter and send resulting visualization to "vo" (video output).

As ffmpeg is designed to allow many complex multi-layered processing pipelines,

extending on that simple example can produce really fancy stuff, like any blend

of images, text and procedurally-generated video streams.

Some nice examples of those can be found at ffmpeg wiki FancyFilteringExamples page.

It's much easier to build, control and tweak that stuff from lua though,

e.g. to only enable such vis if there is a blank forced window without a video stream,

and to split those long pipelines into more sensible chunks of parameters, for example:

local filter_bg = lavfi_filter_string{

'firequalizer', {

gain = "'20/log(10)*log(1.4884e8"

.."* f/(f*f + 424.36)"

.."* f/(f*f + 1.4884e8)"

.."* f/sqrt(f*f + 25122.25) )'",

accuracy = 1000,

zero_phase = 'on' },

'showcqt', {

fps = 30,

size = '960x768',

count = 2,

bar_g = 2,

sono_g = 4,

bar_v = 9,

sono_v = 17,

font = "'Luxi Sans,Liberation Sans,Sans|bold'",

fontcolor = "'st(0, (midi(f)-53.5)/12);"

.."st(1, 0.5 - 0.5 * cos(PI*ld(0))); r(1-ld(1)) + b(ld(1))'",

tc = '0.33',

tlength = "'st(0,0.17);"

.."384*tc/(384/ld(0)+tc*f/(1-ld(0)))"

.." + 384*tc/(tc*f/ld(0)+384/(1-ld(0)))'" } }

local filter_fg = lavfi_filter_string{ 'avectorscope',

{ mode='lissajous_xy', size='960x200',

rate=30, scale='cbrt', draw='dot', zoom=1.5 } }

local overlay = lavfi_filter_string{'overlay', {format='yuv420'}}

local lavfi =

'[aid1] asplit=3 [ao][a1][a2];'

..'[a1]'..filter_bg..'[v1];'

..'[a2]'..filter_fg..'[v2];'

..'[v1][v2]'..overlay..'[vo]'

mp.set_property('options/lavfi-complex', lavfi)

Much easier than writing something like this down into one line.

("lavfi_filter_string" there concatenates all passed options with comma/colon

separators, as per ffmpeg syntax)

Complete lua script that I ended-up writing for this: fg.lavfi-audio-vis.lua

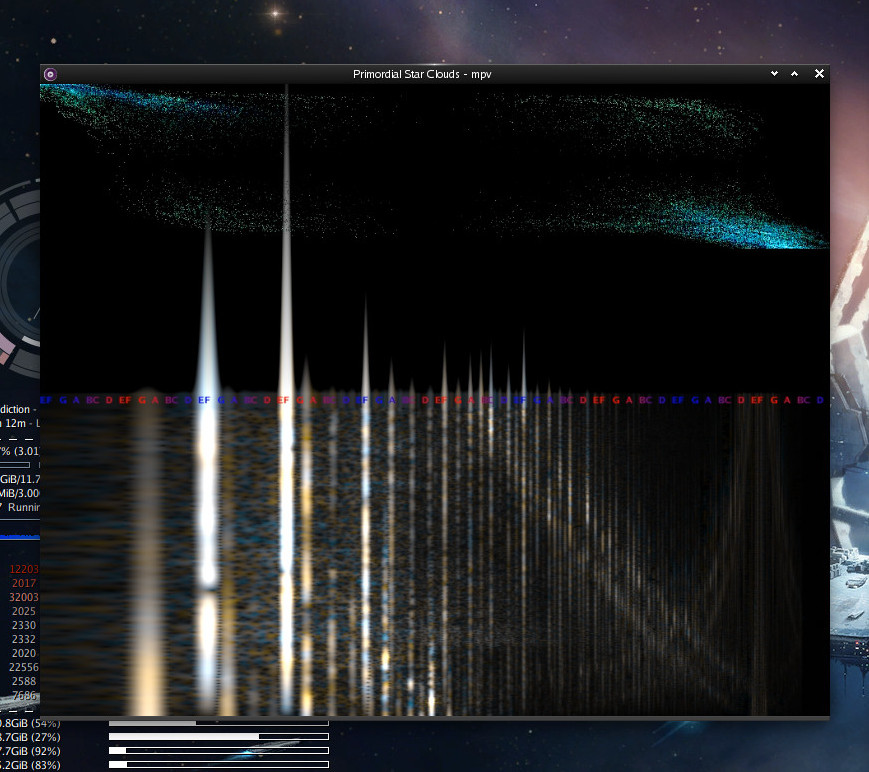

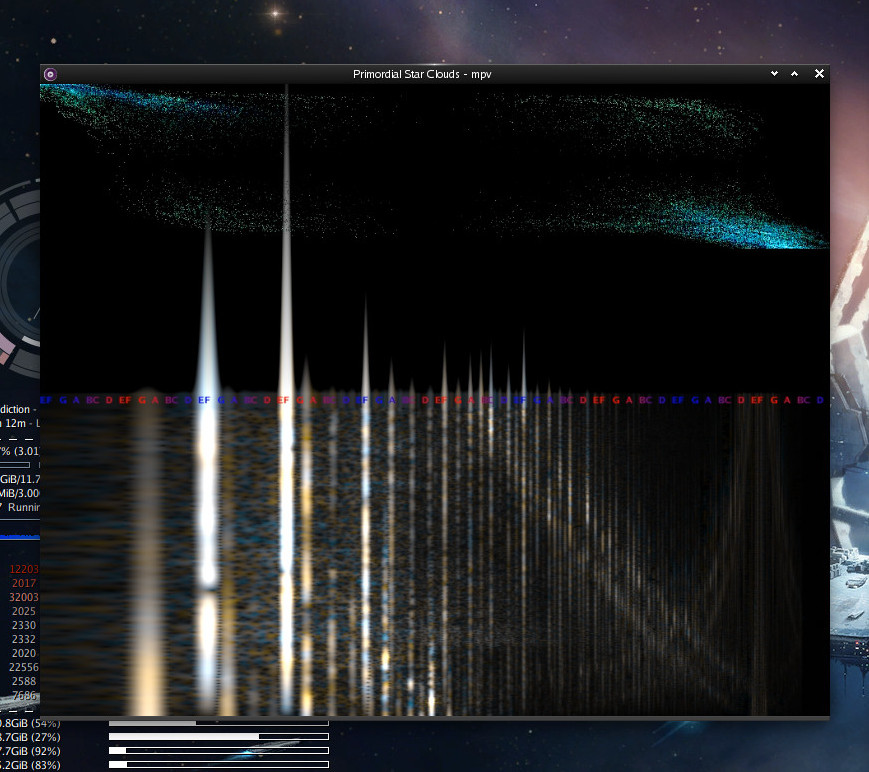

With some grand space-ambient electronic score, showcqt waterfall can move in

super-trippy ways, very much representative of the glacial underlying audio rythms:

(track in question is "Primordial Star Clouds" [45] from EVE Online soundtrack)

Script won't kick-in with --vo=null, --force-window not enabled, or if "vo-configured"

won't be set by mpv for whatever other reason (e.g. some video output error),

otherwise will be there with more pretty colors to brighten your day :)

Apr 10, 2018

It's been a mystery to me for a while how X terminal emulators (from xterm to

Terminology) manage to copy long bits of strings spanning multiple lines without

actually splitting them with \n chars, given that there's always something like

"screen" or "tmux" or even "mosh" running in there.

All these use ncurses which shouldn't output "long lines of text" but rather

knows width/height of a terminal and flips specific characters there when last

output differs from its internal "how it should be" state/buffer.

Regardless of how this works, terminals definitely get confused sometimes,

making copy-paste of long paths and commands from them into a minefield,

where you never know if it'll insert full path/command or just run random parts

of it instead by emitting newlines here and there.

Easy fix: bind a key combo in a WM to always "copy stuff as a single line".

Bonus points - also strip spaces from start/end, demanding no select-precision.

Even better - have it expose result as both primary and clipboard, to paste anywhere.

For a while used a trivial bash script for that, which did "xclip -in" from

primary selection, some string-mangling in bash and two "xclip -out" to put

result back into primary and clipboard.

It's a surprisingly difficult and suboptimal task for bash though, as - to my

knowledge - you can't even replace \n chars in it without running something

like "tr" or "sed".

And running xclip itself a few times is bad enough, but with a few extra

binaries and under CPU load, such "clipboard keys" become unreliable due to lag

from that script.

Hence finally got fed up by it and rewritten whole thing in C as a small

and fast 300-liner exclip tool, largely based on xclip code.

Build like this: gcc -O2 -lX11 -lXmu exclip.c -o exclip && strip exclip

Found something like it bound to a key (e.g. Win+V for verbatim copy, and

variants like Win+Shift+V for stripping spaces/newlines) to be super-useful

when using terminals and text-based apps, apps that mix primary/clipboard

selections, etc - all without needing to touch the mouse.

Tool is still non-trivial due to how selections and interaction with X work -

code has to be event-based, negotiate content type that it wants to get,

can have large buffers sent in incremental events, and then have to hold these

(in a forked subprocess) and negotiate sending to other apps - i.e. not just

stuff N bytes from buffer somewhere server-side and exit

("cut buffers" can work like that in X, but limited and never used).

Looking at all these was a really fun dive into how such deceptively-simple

(but ancient and not in fact simple at all) things like "clipboard" work.

E.g. consider how one'd hold/send/expose stuff from huge GIMP image selection

and paste it into an entirely different app (xclip -out -t TARGETS can give

a hint), especially with X11 and its network transparency.

Though then again, maybe humble string manipulation in C is just as fascinating,

given all the pointer juggling and tricks one'd usually have to do for that.

Nov 27, 2017

It's one thing that's non-trivial to get right with simple scripts.

I.e. how to check address on an interface? How to wait for it to be assigned?

How to check gateway address? Etc.

Few common places to look for these things:

/sys/class/net/

Has easily-accessible list of interfaces and a MAC for each in

e.g. /sys/class/net/enp1s0/address (useful as a machine id sometimes).

Pros: easy/reliable to access from any scripts.

Cons: very little useful info there.

/usr/lib/systemd/systemd-networkd-wait-online

As systemd-networkd is a common go-to network management tool these days,

this one complements it very nicely.

Allows to wait until some specific interface(s) (or all of them) get fully

setup, has built-in timeout option too.

E.g. just run systemd-networkd-wait-online -i enp1s0 from any script or even

ExecStartPre= of a unit file and you have it waiting for net to be available

reliably, no need to check for specific IP or other details.

Pros: super-easy to use from anywhere, even ExecStartPre= of unit files.

Cons: for one very specific (but very common) all-or-nothing use-case.

Doesn't always work for interfaces that need complicated setup by an extra

daemon, e.g. wifi, batman-adv or some tunnels.

Also, undocumented caveat: use ExecStartPre=-/.../systemd-networkd-wait-online ...

("=-" is the important bit) for anything that should start regardless of network

issues, as thing can exit with non-0 sometimes when there's no network for a

while (which does look like a bug, and might be fixed in future versions).

Update 2019-01-12: systemd-networkd 236+ also has RequiredForOnline=,

which allows to configure which ifaces global network.target should wait for

right in the .network files, if tracking only one state is enough.

iproute2 json output

If iproute2 is recent enough (4.13.0-4.14.0 and above), then GOOD NEWS!

ip-address and ip-link there have -json output.

(as well as "tc", stat-commands, and probably many other things in later releases)

Parsing ip -json addr or ip -json link output is trivial anywhere except

for sh scripts, so it's a very good option if required parts of "ip" are

jsonified already.

Pros: easy to parse, will be available everywhere in the near future.

Cons: still very recent, so quite patchy and not ubiquitous yet, not for sh scripts.

APIs of running network manager daemons

E.g. NetworkManager has nice-ish DBus API (see two polkit rules/pkla snippets

here for how to enable it for regular users), same for wpa_supplicant/hostapd

(see wifi-client-match or wpa-systemd-wrapper scripts), dhcpcd has hooks.

systemd-networkd will probably get DBus API too at some point in the near

future, beyond simple up/down one that systemd-networkd-wait-online already

uses.

Pros: best place to get such info from, can allow some configuration.

Cons: not always there, can be somewhat limited or hard to access.

libc and getifaddrs() - low-level way

Same python has ctypes, so why bother with all the heavy/fragile deps and crap,

when it can use libc API directly?

Drop-in snippet to grab all the IPv4/IPv6/MAC addresses (py2/py3):

import os, socket, ctypes as ct

class sockaddr_in(ct.Structure):

_fields_ = [('sin_family', ct.c_short), ('sin_port', ct.c_ushort), ('sin_addr', ct.c_byte*4)]

class sockaddr_in6(ct.Structure):

_fields_ = [ ('sin6_family', ct.c_short), ('sin6_port', ct.c_ushort),

('sin6_flowinfo', ct.c_uint32), ('sin6_addr', ct.c_byte * 16) ]

class sockaddr_ll(ct.Structure):

_fields_ = [ ('sll_family', ct.c_ushort), ('sll_protocol', ct.c_ushort),

('sll_ifindex', ct.c_int), ('sll_hatype', ct.c_ushort), ('sll_pkttype', ct.c_uint8),

('sll_halen', ct.c_uint8), ('sll_addr', ct.c_uint8 * 8) ]

class sockaddr(ct.Structure):

_fields_ = [('sa_family', ct.c_ushort)]

class ifaddrs(ct.Structure): pass

ifaddrs._fields_ = [ # recursive

('ifa_next', ct.POINTER(ifaddrs)), ('ifa_name', ct.c_char_p),

('ifa_flags', ct.c_uint), ('ifa_addr', ct.POINTER(sockaddr)) ]

def get_iface_addrs(ipv4=False, ipv6=False, mac=False, ifindex=False):

if not (ipv4 or ipv6 or mac or ifindex): ipv4 = ipv6 = True

libc = ct.CDLL('libc.so.6', use_errno=True)

libc.getifaddrs.restype = ct.c_int

ifaddr_p = head = ct.pointer(ifaddrs())

ifaces, err = dict(), libc.getifaddrs(ct.pointer(ifaddr_p))

if err != 0:

err = ct.get_errno()

raise OSError(err, os.strerror(err), 'getifaddrs()')

while ifaddr_p:

addrs = ifaces.setdefault(ifaddr_p.contents.ifa_name.decode(), list())

addr = ifaddr_p.contents.ifa_addr

if addr:

af = addr.contents.sa_family

if ipv4 and af == socket.AF_INET:

ac = ct.cast(addr, ct.POINTER(sockaddr_in)).contents

addrs.append(socket.inet_ntop(af, ac.sin_addr))

elif ipv6 and af == socket.AF_INET6:

ac = ct.cast(addr, ct.POINTER(sockaddr_in6)).contents

addrs.append(socket.inet_ntop(af, ac.sin6_addr))

elif (mac or ifindex) and af == socket.AF_PACKET:

ac = ct.cast(addr, ct.POINTER(sockaddr_ll)).contents

if mac:

addrs.append('mac-' + ':'.join(

map('{:02x}'.format, ac.sll_addr[:ac.sll_halen]) ))

if ifindex: addrs.append(ac.sll_ifindex)

ifaddr_p = ifaddr_p.contents.ifa_next

libc.freeifaddrs(head)

return ifaces

print(get_iface_addrs())

Result is a dict of iface-addrs (presented as yaml here):

enp1s0:

- 192.168.65.19

- fc65::19

- fe80::c646:19ff:fe64:632f

enp2s7:

- 10.0.1.1

- fe80::1ebd:b9ff:fe86:f439

lo:

- 127.0.0.1

- ::1

ve: []

wg:

- 10.74.0.1

Or to get IPv6+MAC+ifindex only - get_iface_addrs(ipv6=True, mac=True,

ifindex=True):

enp1s0:

- mac-c4:46:19:64:63:2f

- 2

- fc65::19

- fe80::c646:19ff:fe64:632f

enp2s7:

- mac-1c:bd:b9:86:f4:39

- 3

- fe80::1ebd:b9ff:fe86:f439

lo:

- mac-00:00:00:00:00:00

- 1

- ::1

ve:

- mac-36:65:67:f7:99:dc

- 5

wg: []

Tend to use this as a drop-in boilerplate/snippet in python scripts that need IP

address info, instead of adding extra deps - libc API should be way more

stable/reliable than these anyway.

Same can be done in any other full-featured scripts, of course, not just python,

but bash scripts are sorely out of luck.

Pros: first-hand address info, stable/reliable/efficient, no extra deps.

Cons: not for 10-liner sh scripts, not much info, bunch of boilerplate code.

libmnl - same way as iproute2 does it

libc.getifaddrs() doesn't provide much info beyond very basic ip/mac addrs and

iface indexes, and the rest should be fetched from kernel via netlink sockets.

libmnl wraps those, and is used by iproute2, so comes out of the box on any

modern linux, so its API can be used in the same way as libc above from

full-featured scripts like python:

import os, socket, resource, struct, time, ctypes as ct

class nlmsghdr(ct.Structure):

_fields_ = [

('len', ct.c_uint32),

('type', ct.c_uint16), ('flags', ct.c_uint16),

('seq', ct.c_uint32), ('pid', ct.c_uint32) ]

class nlattr(ct.Structure):

_fields_ = [('len', ct.c_uint16), ('type', ct.c_uint16)]

class rtmsg(ct.Structure):

_fields_ = ( list( (k, ct.c_uint8) for k in

'family dst_len src_len tos table protocol scope type'.split() )

+ [('flags', ct.c_int)] )

class mnl_socket(ct.Structure):

_fields_ = [('fd', ct.c_int), ('sockaddr_nl', ct.c_int)]

def get_route_gw(addr='8.8.8.8'):

libmnl = ct.CDLL('libmnl.so.0.2.0', use_errno=True)

def _check(chk=lambda v: bool(v)):

def _check(res, func=None, args=None):

if not chk(res):

errno_ = ct.get_errno()

raise OSError(errno_, os.strerror(errno_))

return res

return _check

libmnl.mnl_nlmsg_put_header.restype = ct.POINTER(nlmsghdr)

libmnl.mnl_nlmsg_put_extra_header.restype = ct.POINTER(rtmsg)

libmnl.mnl_attr_put_u32.argtypes = [ct.POINTER(nlmsghdr), ct.c_uint16, ct.c_uint32]

libmnl.mnl_socket_open.restype = mnl_socket

libmnl.mnl_socket_open.errcheck = _check()

libmnl.mnl_socket_bind.argtypes = [mnl_socket, ct.c_uint, ct.c_int32]

libmnl.mnl_socket_bind.errcheck = _check(lambda v: v >= 0)

libmnl.mnl_socket_get_portid.restype = ct.c_uint

libmnl.mnl_socket_get_portid.argtypes = [mnl_socket]

libmnl.mnl_socket_sendto.restype = ct.c_ssize_t

libmnl.mnl_socket_sendto.argtypes = [mnl_socket, ct.POINTER(nlmsghdr), ct.c_size_t]

libmnl.mnl_socket_sendto.errcheck = _check(lambda v: v >= 0)

libmnl.mnl_socket_recvfrom.restype = ct.c_ssize_t

libmnl.mnl_nlmsg_get_payload.restype = ct.POINTER(rtmsg)

libmnl.mnl_attr_validate.errcheck = _check(lambda v: v >= 0)

libmnl.mnl_attr_get_payload.restype = ct.POINTER(ct.c_uint32)

if '/' in addr: addr, cidr = addr.rsplit('/', 1)

else: cidr = 32

buf = ct.create_string_buffer(min(resource.getpagesize(), 8192))

nlh = libmnl.mnl_nlmsg_put_header(buf)

nlh.contents.type = 26 # RTM_GETROUTE

nlh.contents.flags = 1 # NLM_F_REQUEST

# nlh.contents.flags = 1 | (0x100|0x200) # NLM_F_REQUEST | NLM_F_DUMP

nlh.contents.seq = seq = int(time.time())

rtm = libmnl.mnl_nlmsg_put_extra_header(nlh, ct.sizeof(rtmsg))

rtm.contents.family = socket.AF_INET

addr, = struct.unpack('=I', socket.inet_aton(addr))

libmnl.mnl_attr_put_u32(nlh, 1, addr) # 1=RTA_DST

rtm.contents.dst_len = int(cidr)

nl = libmnl.mnl_socket_open(0) # NETLINK_ROUTE

libmnl.mnl_socket_bind(nl, 0, 0) # nl, 0, 0=MNL_SOCKET_AUTOPID

port_id = libmnl.mnl_socket_get_portid(nl)

libmnl.mnl_socket_sendto(nl, nlh, nlh.contents.len)

addr_gw = None

@ct.CFUNCTYPE(ct.c_int, ct.POINTER(nlattr), ct.c_void_p)

def data_ipv4_attr_cb(attr, data):

nonlocal addr_gw

if attr.contents.type == 5: # RTA_GATEWAY

libmnl.mnl_attr_validate(attr, 3) # MNL_TYPE_U32

addr = libmnl.mnl_attr_get_payload(attr)

addr_gw = socket.inet_ntoa(struct.pack('=I', addr[0]))

return 1 # MNL_CB_OK

@ct.CFUNCTYPE(ct.c_int, ct.POINTER(nlmsghdr), ct.c_void_p)

def data_cb(nlh, data):

rtm = libmnl.mnl_nlmsg_get_payload(nlh).contents

if rtm.family == socket.AF_INET and rtm.type == 1: # RTN_UNICAST

libmnl.mnl_attr_parse(nlh, ct.sizeof(rtm), data_ipv4_attr_cb, None)

return 1 # MNL_CB_OK

while True:

ret = libmnl.mnl_socket_recvfrom(nl, buf, ct.sizeof(buf))

if ret <= 0: break

ret = libmnl.mnl_cb_run(buf, ret, seq, port_id, data_cb, None)

if ret <= 0: break # 0=MNL_CB_STOP

break # MNL_CB_OK for NLM_F_REQUEST, don't use with NLM_F_DUMP!!!

if ret == -1: raise OSError(ct.get_errno(), os.strerror(ct.get_errno()))

libmnl.mnl_socket_close(nl)

return addr_gw

print(get_route_gw())

This specific boilerplate will fetch the gateway IP address to 8.8.8.8 (i.e. to

the internet), used it in systemd-watchdog script recently.

It might look a bit too complex for such apparently simple task at this point,

but allows to do absolutely anything network-related - everything "ip"

(iproute2) does, including configuration (addresses, routes),

creating/setting-up new interfaces ("ip link add ..."), all the querying

(ip-route, ip-neighbor, ss/netstat, etc), waiting and async monitoring

(ip-monitor, conntrack), etc etc...

Pros: can do absolutely anything, directly, stable/reliable/efficient, no extra deps.

Cons: definitely not for 10-liner sh scripts, boilerplate code.

Conclusion

iproute2 with -json output flag should be good enough for most cases where

systemd-networkd-wait-online is not sufficient, esp. if more commands there

(like ip-route and ip-monitor) will support it in the future (thanks to Julien

Fortin and all other people working on this!).

For more advanced needs, it's usually best to query/control whatever network

management daemon or go to libc/libmnl directly.

Oct 11, 2017

Bumped into this issue when running latest mainline kernel (4.13) on ODROID-C2 -

default fb console for HDMI output have to be configured differently there

(and also needs a dtb patch to hook it up).

Old vendor kernels for that have/use a bunch of cmdline options for HDMI -

hdmimode, hdmitx (cecconfig), vout (hdmi/dvi/auto), overscan_*, etc - all

custom and non-mainline.

With mainline DRM (as in Direct Rendering Manager) and framebuffer modules,

video= option seem to be the way to set/force specific output and resolution

instead.

When display is connected on boot, it can work without that if stars align

correctly, but that's not always the case as it turns out - only 1 out of 3

worked that way.

But even if display works on boot, plugging HDMI after boot never works anyway,

and that's the most (only) useful thing for it (debug issues, see logs or kernel

panic backtrace there, etc)!

DRM module (meson_dw_hdmi in case of C2) has its HDMI output info in

/sys/class/drm/card0-HDMI-A-1/, which is where one can check on connected

display, dump its EDID blob (info, supported modes), etc.

cmdline option to force this output to be used with specific (1080p60) mode:

video=HDMI-A-1:1920x1080@60e

More info on this spec is in Documentation/fb/modedb.txt, but the gist is

"<ouput>:<WxH>@<rate><flags>" with "e" flag at the end is "force the display to

be enabled", to avoid all that hotplug jank.

Should set mode for console (see e.g. fbset --info), but at least with

meson_dw_hdmi this is insufficient, which it's happy to tell why when loading

with extra drm.debug=0xf cmdline option - doesn't have any supported modes,

returns MODE_BAD for all non-CEA-861 modes that are default in fb-modedb.

Such modelines are usually supplied from EDID blobs by the display, but if there

isn't one connected, blob should be loaded from somewhere else (and iirc there

are ways to define these via cmdline).

Luckily, kernel has built-in standard EDID blobs, so there's no need to put

anything to /lib/firmware, initramfs or whatever:

drm_kms_helper.edid_firmware=edid/1920x1080.bin video=HDMI-A-1:1920x1080@60e

And that finally works.

Not very straightforward, and doesn't seem to be documented in one place

anywhere with examples (ArchWiki page on KMS probably comes closest).

Jun 09, 2017

Tend to mention random trivial tools I write here, but somehow forgot about this

one - acme-cert-tool.

Implemented it a few months back when setting-up TLS on, and wasn't satisfied by

any existing things for ACME / Let's Encrypt cert management.

Wanted to find some simple python3 script that's a bit less hacky than

acme-tiny, not a bloated framework with dozens of useless deps like certbot

and has ECC certs covered, but came up empty.

acme-cert-tool has all that in a single script with just one dep on a standard

py crypto toolbox (cryptography.io), and does everything through a single

command, e.g. something like:

% ./acme-cert-tool.py --debug -gk le-staging.acc.key cert-issue \

-d /srv/www/.well-known/acme-challenge le-staging.cert.pem mydomain.com

...to get signed cert for mydomain.com, doing all the generation, registration

and authorization stuff as necessary, and caching that stuff in

"le-staging.acc.key" too, not doing any extra work there either.

Add && systemctl reload nginx to that, put into crontab or .timer and done.

There are bunch of other commands mostly to play with accounts and such, plus

options for all kinds of cert and account settings, e.g. ... -e

myemail@mydomain.com -c rsa-2048 -c ec-384 to also have cert with rsa key

generated for random outdated clients and add email for notifications (if not

added already).

README on

acme-cert-tool github page and -h/--help output should have more details:

Jun 02, 2017

There are way more tools that happily forward TCP ports than ones for UDP.

Case in point - it's usually easy to forward ssh port through a bunch of hosts

and NATs, with direct and reverse ssh tunnels, ProxyCommand stuff, tools like

pwnat, etc, but for mosh UDP connection it's not that trivial.

Which sucks, because its performance and input prediction stuff is exactly

what's lacking in super-laggy multi-hop ssh connections forwarded back-and-forth

between continents through such tunnels.

There are quite a few long-standing discussions on how to solve it properly in

mosh, which didn't get any traction so far, unfortunately:

One obvious way to make it work, is to make some tunnel (like OpenVPN or

wireguard) from destination host (server) to a client, and use mosh over that.

But that's some extra tools and configuration to keep around on both sides, and

there is much easier way that works perfectly for most cases - knowing both

server and client IPs, pre-pick ports for mosh-server and mosh-client, then

punch hole in the NAT for these before starting both.

How it works:

- Pick some UDP ports that server and client will be using, e.g. 34700 for

server and 34701 for client.

- Send UDP packet from server:34700 to client:34701.

- Start mosh-server, listening on server:34700.

- Connect to that with mosh-client, using client:34701 as a UDP source port.

NAT on the router(s) in-between the two will see this exchange as a server

establishing "udp connection" to a client, and will allow packets in both

directions to flow through between these two ports.

Once mosh-client establishes the connection and keepalive packets will start

bouncing there all the time, it will be up indefinitely.

mosh is generally well-suited for running manually from an existing console,

so all that's needed to connect in a simple case is:

server% mosh-server new

MOSH CONNECT 60001 NN07GbGqQya1bqM+ZNY+eA

client% MOSH_KEY=NN07GbGqQya1bqM+ZNY+eA mosh-client <server-ip> 60001

With hole-punching, two additional wrappers are required with the current mosh

version (1.3.0):

- One for mosh-server to send UDP packet to the client IP, using same port on

which server will then be started: mosh-nat

- And a wrapper for mosh-client to force its socket to bind to specified local

UDP port, which was used as a dst by mosh-server wrapper above: mosh-nat-bind.c

Making connection using these two is as easy as with stock mosh above:

server% ./mosh-nat 74.59.38.152

mosh-client command:

MNB_PORT=34730 LD_PRELOAD=./mnb.so

MOSH_KEY=rYt2QFJapgKN5GUqKJH2NQ mosh-client <server-addr> 34730

client% MNB_PORT=34730 LD_PRELOAD=./mnb.so \

MOSH_KEY=rYt2QFJapgKN5GUqKJH2NQ mosh-client 84.217.173.225 34730

(with server at 84.217.173.225, client at 74.59.38.152 and using port 34730 on

both ends in this example)

Extra notes:

- "mnb.so" used with LD_PRELOAD is that mosh-nat-bind.c wrapper, which can be

compiled using: gcc -nostartfiles -fpic -shared -ldl -D_GNU_SOURCE

mosh-nat-bind.c -o mnb.so

- Both mnb.so and mosh-nat only work with IPv4, IPv6 shouldn't use NAT anyway.

- 34730 is the default port for -c/--client-port and -s/--server-port opts in

mosh-nat script.

- Started mosh-server waits for 60s (default) for mosh-client to connect.

- Continous operation relies on mosh keepalive packets without interruption, as

mentioned, and should break on (long enough) net hiccups, unlike direct mosh

connections established to server that has no NAT in front of it (or with a

dedicated port forwarding).

- No roaming of any kind is possible here, again, unlike with original mosh - if

src IP/port changes, connection will break.

- New MOSH_KEY is generated by mosh-server on every run, and is only good for

one connection, as server should rotate it after connection gets established,

so is pretty safe/easy to use.

- If client is behind NAT as well, its visible IP should be used, not internal one.

- Should only work when NAT on either side doesn't rewrite source ports.

Last point can be a bummer with some "Carrier-grade" NATs, which do rewrite src

ports out of necessity, but can be still worked around if it's only on the

server side by checking src port of the hole-punching packet in tcpdump and

using that instead of whatever it was supposed to be originally.

Requires just python to run wrapper script on the server and no additional

configuration of any kind.

Both linked wrappers are from here:

May 15, 2017

Mostly use unorthodox variable-width font for coding, but do need monospace

sometimes, e.g. for jagged YAML files or .rst.

Had weird issue with my emacs for a while, where switching to monospace font

will slow window/frame rendering significantly, to a noticeable degree, having

stuff blink and lag, making e.g. holding key to move cursor impossible, etc.

Usual profiling showed that it's an actual rendering via C code, so kinda hoped

that it'd go away in one of minor releases, but nope - turned out to be the

dumbest thing in ~/.emacs:

(set-face-font 'fixed-pitch "DejaVu Sans Mono-7.5")

That one line is what slows stuff down to a crawl in monospace ("fixed-pitch")

configuration, just due to non-integer font size, apparently.

Probably not emacs' fault either, just xft or some other lower-level rendering

lib, and a surprising little quirk that can affect high-level app experience a lot.

Changing font size there to 8 or 9 gets rid of the issue. Oh well...

May 14, 2017

"ssh -R" a is kinda obvious way to setup reverse access tunnel from something

remote that one'd need to access, e.g. raspberry pi booted from supplied img

file somewhere behind the router on the other side of the world.

Being part of OpenSSH, it's available on any base linux system, and trivial to

automate on startup via loop in a shell script, crontab or a systemd unit, e.g.:

[Unit]

Wants=network.service

After=network.service

[Service]

Type=simple

User=ssh-reverse-access-tunnel

Restart=always

RestartSec=10

ExecStart=/usr/bin/ssh -oControlPath=none -oControlMaster=no \

-oServerAliveInterval=6 -oServerAliveCountMax=10 -oConnectTimeout=180 \

-oPasswordAuthentication=no -oNumberOfPasswordPrompts=0 \

-oExitOnForwardFailure=yes -NnT -R "1234:localhost:22" tun-user@tun-host

[Install]

WantedBy=multi-user.target

On the other side, ideally in a dedicated container or VM, there'll be sshd

"tun-user" with an access like this (as a single line):

command="echo >&2 'No shell access!'; exit 1",

no-X11-forwarding,no-agent-forwarding,no-pty ssh-ed25519 ...

Or have sshd_config section with same restrictions and only keys in

authorized_keys, e.g.:

Match User tun-*

# GatewayPorts yes

PasswordAuthentication no

X11Forwarding no

AllowAgentForwarding no

PermitTTY no

PermitTunnel no

AllowStreamLocalForwarding no

AllowTcpForwarding remote

ForceCommand echo 'no shell access!'; exit 1

And that's it, right?

No additional stuff needed, "ssh -R" will connect reliably on boot, keep

restarting and reconnecting in case of any errors, even with keepalives to

detect dead connections and restart asap.

Nope!

There's a bunch of common pitfalls listed below.

Problem 1:

When device with a tunnel suddenly dies for whatever reason - power or network

issues, kernel panic, stray "kill -9" or what have you - connection on sshd

machine will hang around for a while, as keepalive options are only used by

the client.

Along with (dead) connection, listening port will stay open as well, and "ssh

-R" from e.g. power-cycled device will not be able to bind it, and that client

won't treat it as a fatal error either!

Result: reverse-tunnels don't survive any kind of non-clean reconnects.

Fix:

- TCPKeepAlive in sshd_config - to detect dead connections there faster,

though probably still not fast enough for e.g. emergency reboot.

- Detect and kill sshd pids without listening socket, forcing "ssh -R" to

reconnect until it can actually bind one.

- If TCPKeepAlive is not good or reliable enough, kill all sshd pids

associated with listening sockets that don't produce the usual

"SSH-2.0-OpenSSH_7.4" greeting line.

Problem 2:

Running sshd on any reasonably modern linux, you get systemd session for each

connection, and killing sshd pids as suggested above will leave logind

sessions from these, potentially creating hundreds or thousands of them over

time.

Solution:

- "UsePAM no" to disable pam_systemd.so along with the rest of the PAM.

- Dedicated PAM setup for ssh tunnel logins on this dedicated system, not

using pam_systemd.

- Occasional cleanup via loginctl list-sessions/terminate-session for ones

that are in "closing"/"abandoned" state.

Killing sshd pids might be hard to avoid on fast non-clean reconnect, since

reconnected "ssh -R" will hang around without a listening port forever,

as mentioned.

Problem 3:

If these tunnels are not configured on per-system basis, but shipped in some

img file to use with multiple devices, they'll all try to bind same listening

port for reverse-tunnels, so only one of these will work.

Fixes:

More complex script to generate listening port for "ssh -R" based on

machine id, i.e. serial, MAC, local IP address, etc.

Get free port to bind to out-of-band from the server somehow.

Can be through same ssh connection, by checking ss/netstat output or

/proc/net/tcp there, if commands are allowed there (probably a bad idea for

random remote devices).

Problem 4:

Device identification in the same "mutliple devices" scenario.

I.e. when someone sets up 5 RPi boards on the other end, how to tell which

tunnel leads to each specific board?

Can usually be solved by:

- Knowing/checking quirks specific to each board, like dhcp hostname,

IP address, connected hardware, stored files, power-on/off timing, etc.

- Blinking LEDs via /sys/class/leds, ethtool --identify or GPIO pins.

- Output on connected display - just "wall" some unique number

(e.g. reverse-tunnel port) or put it to whatever graphical desktop.

Problem 5:

Round-trip through some third-party VPS can add significant console lag,

making it rather hard to use.

More general problem than with just "ssh -R", but when doing e.g. "EU -> US ->

RU" trip and back, console becomes quite unusable without something like

mosh, which can't be used over that forwarded tcp port anyway!

Kinda defeats the purpose of the whole thing, though laggy console (with an

option to upgrade it, once connected) is still better than nothing.

Not an exhaustive or universally applicable list, of course, but hopefully shows

that it's actually more hassle than "just run ssh -R on boot" to have something

robust here.

So choice of ubiquitous / out-of-the-box "ssh -R" over installing some dedicated

tunneling thing like OpenVPN (or, wireguard - much better choice on linux) is

not as clear-cut in favor of the former as it would seem, taking all such quirks

(handled well by dedicated tunneling apps) into consideration.

As I've bumped into all of these by now, addressed them by:

ssh-tunnels-cleanup script to (optionally) do three things, in order:

- Find/kill sshd pids without associated listening socket

("ssh -R" that re-connected quickly and couldn't bind one).

- Probe all sshd listening sockets with ncat (nc that comes with nmap) and

make sure there's an "SSH-2.0-..." banner there, otherwise kill.

- Cleanup all dead loginctl sessions, if any.

Only affects/checks sshd pids for specific user prefix (e.g. "tun-"), to avoid

touching anything but dedicated tunnels.

ssh-reverse-mux-server / ssh-reverse-mux-client scripts.

For listening port negotiation with ssh server,

using bunch of (authenticated) UDP packets.

Essentially a wrapper for "ssh -R" on the client, to also pass all the

required options, replacing ExecStart= line in above systemd example

with e.g.:

ExecStart=/usr/local/bin/ssh-reverse-mux-client \

--mux-port=2200 --ident-rpi -s pkt-mac-key.aGPwhpya tun-user@tun-host

ssh-reverse-mux-server on the other side will keep .db file of --ident strings

(--ident-rpi uses hash of RPi board serial from /proc/cpuinfo) and allocate

persistent port number (from specified range) to each one, which client will

use with actual ssh command.

Simple symmetric key (not very secret) is used to put MAC into packets and

ignore any noise traffic on either side that way.

https://github.com/mk-fg/fgtk#ssh-reverse-mux

Hook in ssh-reverse-mux-client above to blink bits of allocated port on some

available LED.

Sample script to do the morse-code-like bit-blinking:

And additional hook option for command above:

... -c 'sudo -n led-blink-arg -f -l led0 -n 2/4-2200'

(with last arg-num / bits - decrement spec there to blink only last 4 bits

of the second passed argument, which is listening port, e.g. "1011" for "2213")

Given how much OpenSSH does already, having all this functionality there

(or even some hooks for that) would probably be too much to ask.

...at least until it gets rewritten as some systemd-accessd component :P

Apr 27, 2017

Running Wireless AP on linux is pretty much always done through handy hostapd

tool, which sets the necessary driver parameters and handles authentication

and key management aspects of an infrastructure mode access point operation.

Its configuration file has plenty of options, which get initialized to a

rather conserative defaults, resulting in suboptimal bendwidth with anything

from this decade, e.g. 802.11n or 802.11ac cards/dongles.

Furthermore, it seem to assume decent amount of familiarity with IEEE standards

on WiFi protocols, which are mostly paywalled (though can easily be pirated ofc,

just use google).

Specifically, channel selection for VHT (802.11ac) there is a bit of a

nightmare, as hostapd code not only has (undocumented afaict) whitelist for

these, but also needs more than one parameter to set them.

I'm not an expert on wireless links and wifi specifically, just had to setup one

recently (and even then, without going into STBC, Beamforming and such), so

don't take this info as some kind of authoritative "how it must be done" guide -

just my 2c and nothing more.

Anyway, first of all, to get VHT ("Very High Throughput") aka 802.11ac mode at

all, following hostapd config can be used as a baseline:

# https://w1.fi/cgit/hostap/plain/hostapd/hostapd.conf

ssid=my-test-ap

wpa_passphrase=set-ap-password

country_code=US

# ieee80211d=1

# ieee80211h=1

interface=wlan0

driver=nl80211

wpa=2

wpa_key_mgmt=WPA-PSK

rsn_pairwise=CCMP

logger_syslog=0

logger_syslog_level=4

logger_stdout=-1

logger_stdout_level=0

hw_mode=a

ieee80211n=1

require_ht=1

ieee80211ac=1

require_vht=1

vht_oper_chwidth=1

channel=36

vht_oper_centr_freq_seg0_idx=42

There, important bits are obviously stuff at the top - ssid and wpa_passphrase.

But also country_code, as it will apply all kinds of restrictions on 5G channels

that one can use.

ieee80211d/ieee80211h are related to these country_code restrictions, and are

probably necessary for some places and when/if DFS (dynamic frequency selection)

is used, but more on that later.

If that config doesn't work (started with e.g. hostapd myap.conf), and not

just due to some channel conflict or regulatory domain (i.e. country_code) error,

probably worth running hostapd command with -d option and seeing where it fails

exactly, though most likely after nl80211: Set freq ... (ht_enabled=1,

vht_enabled=1, bandwidth=..., cf1=..., cf2=...) log line (and list of options

following it), with some "Failed to set X: Invalid argument" error from kernel

driver.

When that's the case, if it's not just bogus channel (see below), probably worth

to stop right here and see why driver rejects this basic stuff - could be that

it doesn't actually supports running AP and/or VHT mode (esp. for proprietary ones)

or something, which should obviously be addressed first.

VHT (Very High Throughput mode, aka 802.11ac, page 214 in 802.11ac-2013.pdf) is

extension of HT (High Throughput aka 802.11n) mode and can use 20 MHz, 40 MHz,

80 MHz, 160 MHz and 80+80 MHz channel widths, which basically set following caps

on bandwidth:

- 20 MHz - 54 Mbits/s

- 40 MHz - 150-300 Mbits/s

- 80 MHz - 300+ Mbits/s

- 160 MHz or 80+80 MHz (two non-contiguous 80MHz chans) - moar!!!

Most notably, 802.11ac requires to support only up to 80MHz-wide chans, with

160 and 80+80 being optional, so pretty much guaranteed to be not supported by

95% of cheap-ish dongles, even if they advertise "full 802.11ac support!",

"USB 3.0!!!" or whatever - forget it.

"vht_oper_chwidth" parameter sets channel width to use, so "vht_oper_chwidth=1"

(80 MHz) is probably safe choice for ac here.

Unless ACS - Automatic Channel Selection - is being used (which is maybe a good

idea, but not described here at all), both "channel" and

"vht_oper_centr_freq_seg0_idx" parameters must be set (and also

"vht_oper_centr_freq_seg1_idx" for 80+80 vht_oper_chwidth=3 mode).

"vht_oper_centr_freq_seg0_idx" is "dot11CurrentChannelCenterFrequencyIndex0"

from 802.11ac-2013.pdf (22.3.7.3 on page 248 and 22.3.14 on page 296),

while "channel" option is "dot11CurrentPrimaryChannel".

Relation between these for 80MHz channels is the following one:

vht_oper_centr_freq_seg0_idx = channel + 6

Where "channel" can only be picked from the following list (see

hw_features_common.c in hostapd sources):

36 44 52 60 100 108 116 124 132 140 149 157 184 192

And vht_oper_centr_freq_seg0_idx can only be one of:

42 58 106 122 138 155

Furthermore, picking anything but 36/42 and 149/155 is probably restricted by

DFS and/or driver, and if you have any other 5G APs around, can also be

restricted by conflicts with these, as detected/reported by hostapd on start.

Which is kinda crazy - you've got your fancy 802.11ac hardware and maybe can't

even use it because hostapd refuses to use any channels if there's other 5G AP

or two around.

BSS conflicts (with other APs) are detected on start only and are easy to

patch-out with hostapd-2.6-no-bss-conflicts.patch - just 4 lines to

hw_features.c and hw_features_common.c there, should be trivial to adopt for any

newer hostpad version.

But that still leaves all the DFS/no-IR and whatever regdb-special channels locked,

which is safe for legal reasons, but also easy to patch-out in crda (loader tool

for regdb) and wireless-regdb (info on regulatory domains, e.g. US and such)

packages, e.g.:

crda patch is needed to disable signature check on loaded db.txt file,

and alternatively different public key can be used there, but it's less hassle this way.

Note that using DFS/no-IR-marked frequencies with these patches is probably

breaking the law, though no idea if and where these are actually enforced.

Also, if crda/regdb is not installed or country_code not picked, "00" regulatory

domain is used by the kernel, which is the most restrictive subset (to be ok to

use anywhere), and is probably never a good idea.

All these tweaks combined should already provide ~300 Mbits/s (half-duplex) on

a single 80 MHz channel (any from the lists above).

Beyond that, I think "vht_capab" set should be tweaked to enable STBC/LDPC

(space-time block coding) capabilities - i.e. using multiple RX/TX antennas -

which are all disabled by default, and beamforming stuff.

These are all documented in hostapd.conf, but dongles and/or rtl8812au driver

I've been using didn't have support for any of that, so didn't go there myself.

There's also bunch of wmm_* and tx_queue_* parameters, which seem to be for QoS

(prioritizing some packets over others when at 100% capacity).

Tinkering with these doesn't affect iperf3 resutls obviously, and maybe should

be done in linux QoS subsystem ("tc" tool) instead anyway.

Plenty of snippets for tweaking them are available on mailing lists and such,

but should probably be adjusted for specific traffic/setup.

One last important bandwidth optimization for both AP and any clients (stations)

is disabling all the power saving stuff with iw dev wlan0 set power_save off.

Failing to do that can completely wreck performance, and can usually be done

via kernel module parameter in /etc/modprobe.d/ instead of running "iw".

No patches or extra configuration for wpa_supplicant (tool for infra-mode

"station" client) are necessary - it will connect just fine to anything and pick

whatever is advertised, if hw supports all that stuff.

Mar 21, 2017

Traditionally glusterd (glusterfs storage node) runs as root without any kind of

namespacing, and that's suboptimal for two main reasons:

- Grossly-elevated privileges (it's root) for just using net and storing files.

- Inconvenient to manage in the root fs/namespace.

Apart from being historical thing, glusterd uses privileges for three things

that I know of:

- Set appropriate uid/gid on stored files.

- setxattr() with "trusted" namespace for all kinds of glusterfs xattrs.

- Maybe running nfsd? Not sure about this one, didn't use its nfs access.

For my purposes, only first two are useful, and both can be easily satisfied in

non-uid-mapped contained, e.g. systemd-nspawn without -U.

With user_namespaces(7), first requirement is also satisfied, as chown works

for pseudo-root user inside namespace, but second one will never work without

some kind of namespace-private fs or xattr-mapping namespace.

"user" xattr namespace works fine there though, so rather obvious fix is to make

glusterd use those instead, and it has no obvious downsides, at least if backing

fs is used only by glusterd.

xattr names are unfortunately used quite liberally in the gluster codebase, and

don't have any macro for prefix, but finding all "trusted" outside of tests/docs

with grep is rather easy, seem to be no caveats there either.

Would be cool to see something like that upstream eventually.

It won't work unless all nodes are using patched glusterfs version though,

as non-patched nodes will be sending SETXATTR/XATTROP for trusted.* xattrs.

Two extra scripts that can be useful with this patch and existing setups:

First one is to copy trusted.* xattrs to user.*, and second one to set upper

16 bits of uid/gid to systemd-nspawn container id value.

Both allow to pass fs from old root glusterd to a user-xattr-patched glusterd

inside uid-mapped container (i.e. bind-mount it there), without loosing anything.

Both operations are also reversible - can just nuke user.* stuff or upper part

of uid/gid values to revert everything back.

One more random bit of ad-hoc trivia - use getfattr -Rd -m '.*' /srv/glusterfs-stuff

(getfattr without -m '.*' hack hides trusted.* xattrs)

Note that I didn't test this trick extensively (yet?), and only use simple

distribute-replicate configuration here anyway, so probably a bad idea to run

something like this blindly in an important and complicated production setup.

Also wow, it's been 7 years since I've written here about glusterfs last,

time (is made of) flies :)