Apr 26, 2021

bsdtar from libarchive is the usual go-to tool for packing stuff up in linux

scripts, but it always had an annoying quirk for me - no data checksums built

into tar formats.

Ideally you'd unpack any .tar and if it's truncated/corrupted in any way, bsdtar

will spot that and tell you, but that's often not what happens, for example:

% dd if=/dev/urandom of=file.orig bs=1M count=1

% cp -a file{.orig,} && bsdtar -czf file.tar.gz -- file

% bsdtar -xf file.tar.gz && b2sum -l96 file{.orig,}

2d6b00cfb0b8fc48d81a0545 file.orig

2d6b00cfb0b8fc48d81a0545 file

% python -c 'f=open("file.tar.gz", "r+b"); f.seek(512 * 2**10); f.write(b"\1\2\3")'

% bsdtar -xf file.tar.gz && b2sum -l96 file{.orig,}

2d6b00cfb0b8fc48d81a0545 file.orig

c9423358edc982ba8316639b file

In a compressed multi-file archives such change can get tail of an archive

corrupted-enough that it'd affect one of tarball headers, and those have

checksums, so might be detected, but it's very unreliable, and won't affect

uncompressed archives (e.g. media files backup which won't need compression).

Typical solution is to put e.g. .sha256 files next to archives and hope that

people check those, but of course no one ever does in reality - bsdtar itself

has to always do it implicitly for that kind of checking to stick,

extra opt-in steps won't work.

Putting checksum in the filename is a bit better, but still not useful for the

same reason - almost no one will ever check it, unless it's automatic.

Luckily bsdtar has at least some safe options there, which I think should always

be used by default, unless there's a good reason not to in some particular case:

bsdtar --xz (and its --lzma predecessor):

% bsdtar -cf file.tar.xz --xz -- file

% python -c 'f=open("file.tar.xz", "r+b"); f.seek(...); f.write(...)'

% bsdtar -xf file.tar.xz && b2sum -l96 file{.orig,}

file: Lzma library error: Corrupted input data

% tar -xf file.tar.xz && b2sum -l96 file{.orig,}

xz: (stdin): Compressed data is corrupt

Heavier on resources than .gz and might be a bit less compatible, but given that even

GNU tar supports it out of the box and much better compression (with faster decompression)

in addition to mandatory checksumming, should always be a default for compressed archives.

Lowering compression level might help a bit with performance as well.

bsdtar --format zip:

% bsdtar -cf file.zip --format zip -- file

% python -c 'f=open("file.zip", "r+b"); f.seek(...); f.write(...)'

% bsdtar -xf file.zip && b2sum -l96 file{.orig,}

file: ZIP bad CRC: 0x2c1170b7 should be 0xc3aeb29f

Can be an OK option if there's no need for unixy file metadata, streaming decompression,

and/or max compatibility is a plus, as good old zip should be readable everywhere.

Simple deflate compression is inferior to .xz, so not the best for linux-only

stuff or if compression is not needed, BUT there is --options zip:compression=store,

which basically just adds CRC32 checksums.

bsdtar --use-compress-program zstd but NOT its built-in --zstd flag:

% bsdtar -cf file.tar.zst --use-compress-program zstd -- file

% python -c 'f=open("file.tar.zst", "r+b"); f.seek(...); f.write(...)'

% bsdtar -xf file.tar.zst && b2sum -l96 file{.orig,}

file: Lzma library error: Corrupted input data

% tar -xf file.tar.zst && b2sum -l96 file{.orig,}

file: Zstd decompression failed: Restored data doesn't match checksum

Very fast and efficient, gains popularity quickly, but bsdtar --zstd flag

will use libzstd defaults (using explicit zstd --no-check with binary too)

and won't add checksums (!!!), even though it validates data against them on

decompression.

Still good alternative to above, as long as you pretend that --zstd option

does not exist and always go with explicit zstd command instead.

GNU tar does not seem to have this problem, as --zstd there always uses

binary and its defaults (and -C/--check in particular).

bsdtar --lz4 --options lz4:block-checksum:

% bsdtar -cf file.tar.lz4 --lz4 --options lz4:block-checksum -- file

% python -c 'f=open("file.tar.lz4", "r+b"); f.seek(...); f.write(...)'

% bsdtar -xf file.tar.lz4 && b2sum -l96 file{.orig,}

bsdtar: Error opening archive: malformed lz4 data

% tar -I lz4 -xf file.tar.lz4 && b2sum -l96 file{.orig,}

Error 66 : Decompression error : ERROR_blockChecksum_invalid

lz4 barely adds any compression resource overhead, so is essentially free,

same for xxHash32 checksums there, so can be a safe replacement for uncompressed tar.

bsdtar manpage says that lz4 should have stream checksum default-enabled,

but it doesn't seem to help at all with corruption - only block-checksums

like used here do.

GNU tar doesn't understand lz4 by default, so requires explicit -I lz4.

bsdtar --bzip2 - actually checks integrity, but is very inefficient algo

cpu-wise, so best to always avoid it in favor of --xz or zstd these days.

bsdtar --lzop - similar to lz4, somewhat less common,

but always respects data consistency via adler32 checksums.

bsdtar --lrzip - opposite of --lzop above wrt compression, but even

less-common/niche wrt install base and use-cases. Adds/checks md5 hashes by default.

It's still sad that tar can't have some post-data checksum headers, but always

using one of these as a go-to option seem to mitigate that shortcoming,

and these options seem to cover most common use-cases pretty well.

What DOES NOT provide consistency checks with bsdtar: -z/--gz, --zstd (not even

when it's built without libzstd!), --lz4 without lz4:block-checksum option,

base no-compression mode.

With -z/--gz being replaced by .zst everywhere, hopefully either libzstd changes

its no-checksums default or bsdtar/libarchive might override it, though I wouldn't

hold much hope for either of these, just gotta be careful with that particular mode.

Aug 28, 2020

Recently Google stopped sending email notifications for YouTube subscription

feeds, as apparently conversion of these to page views was 0.1% or something.

And even though I've used these to track updates exclusively,

guess it's fair, as I also had xdg-open script jury-rigged to just open any

youtube links in mpv instead of bothering with much inferior ad-ridden

in-browser player.

One alternative workaround is to grab OPML of subscription Atom feeds

and only use those from now on, converting these to familiar to-watch

notification emails, which I kinda like for this purpose because they are

persistent and have convenient state tracking (via read/unread and tags) and

info text without the need to click through, collected in a dedicated mailbox dir.

Fetching/parsing feeds and sending emails are long-solved problems (feedparser

in python does great job on former, MTAs on the latter), while check intervals

and state tracking usually need to be custom, and can have a few tricks that I

haven't seen used too widely.

Trick One - use moving average for an estimate of when events (feed updates) happen.

Some feeds can have daily activity, some have updates once per month, and

checking both every couple hours would be incredibly wasteful to both client and server,

yet it seem to be common practice in this type of scenario.

Obvious fix is to get some "average update interval" and space-out checks in

time based on that, but using simple mean value ("sum / n") has significant

drawbacks for this:

- You have to keep a history of old timestamps/intervals to calculate it.

- It treats recent intervals same as old ones, even though they are more relevant.

Weighted moving average value fixes both of these elegantly:

interval_ewma = w * last_interval + (1 - w) * interval_ewma

Where "w" is a weight for latest interval vs all previous ones, e.g. 0.3 to have

new value be ~30% determined by last interval, ~30% of the remainder by pre-last,

and so on.

Allows to keep only one "interval_ewma" float in state (for each individual feed)

instead of a list of values needed for mean and works better for prediction

due to higher weights for more recent values.

For checking feeds in particular, it can also be updated on "no new items" attempts,

to have backoff interval increase (up to some max value), instead of using last

interval ad infinitum, long past the point when it was relevant.

Trick Two - keep a state log.

Very useful thing for debugging automated stuff like this, where instead of keeping

only last "feed last_timestamp interval_ewma ..." you append every new one to a log file.

When such log file grows to be too long (e.g. couple megs), rename it to .old

and seed new one with last states for each distinct thing (feed) from there.

Add some timestamp and basic-info prefix before json-line there and it'd allow

to trivially check when script was run, which feeds did it check, what change(s)

it did detect there (affecting that state value), as well as e.g. easily remove

last line for some feed to test-run processing last update there again.

When something goes wrong, this kind of log is invaluable, as not only you can

re-trace what happened, but also repeat last state transition with

e.g. some --debug option and see what exactly happened there.

Keeping only one last state instead doesn't allow for any of that, and you'd

have to either keep separate log of operations for that anyway, and manually

re-construct older state from there to retrace last script steps properly,

tweak the inputs to re-construct state that way, or maybe just drop old state

and hope that re-running script with latest inputs without it hits same bug(s).

I.e. there's basically no substitute for that, as text log is pretty much same thing,

describing state changes in non-machine-readable and often-incomplete text,

which can be added to such state-log instead as an extra metadata anyway.

With youtube-rss-tracker script with multiple feeds, it's a single log file,

storing mostly-json lines like these (wrapped for readability):

2020-08-28 05:59 :: UC2C_jShtL725hvbm1arSV9w 'CGP Grey' ::

{"chan": "UC2C_jShtL725hvbm1arSV9w", "delay_ewma": 400150.7167069313,

"ts_last_check": 1598576342.064458, "last_entry_id": "FUV-dyMpi8K_"}

2020-08-28 05:59 :: UCUcyEsEjhPEDf69RRVhRh4A 'The Great War' ::

{"chan": "UCUcyEsEjhPEDf69RRVhRh4A", "delay_ewma": 398816.38761319243,

"ts_last_check": 1598576342.064458, "last_entry_id": "UGomntKCjFJL"}

Text prefix there is useful when reading the log, while script itself only cares

about json bit after that.

Anything doesn't work well - notifications missing, formatted badly, errors, etc -

you just remove last state and tweak/re-run the script (maybe in --dry-run mode too),

and get pretty much exactly same thing as happened last, aside from any input

(feed) changes, which should be very predictable in this particular case.

Not sure what this concept is called in CS, there gotta be some fancy name for it.

Link to YouTube feed email-notification script used as an example here:

Jun 26, 2020

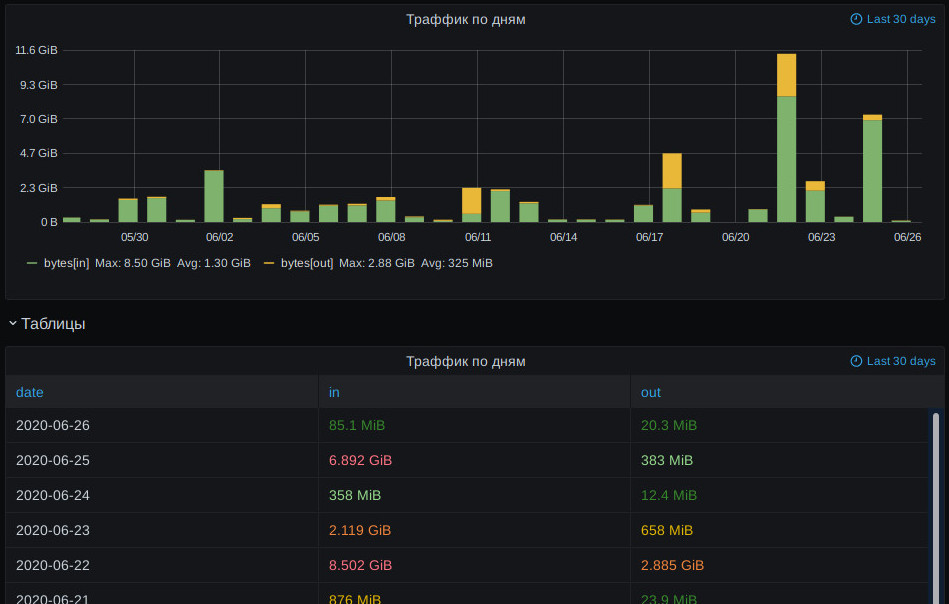

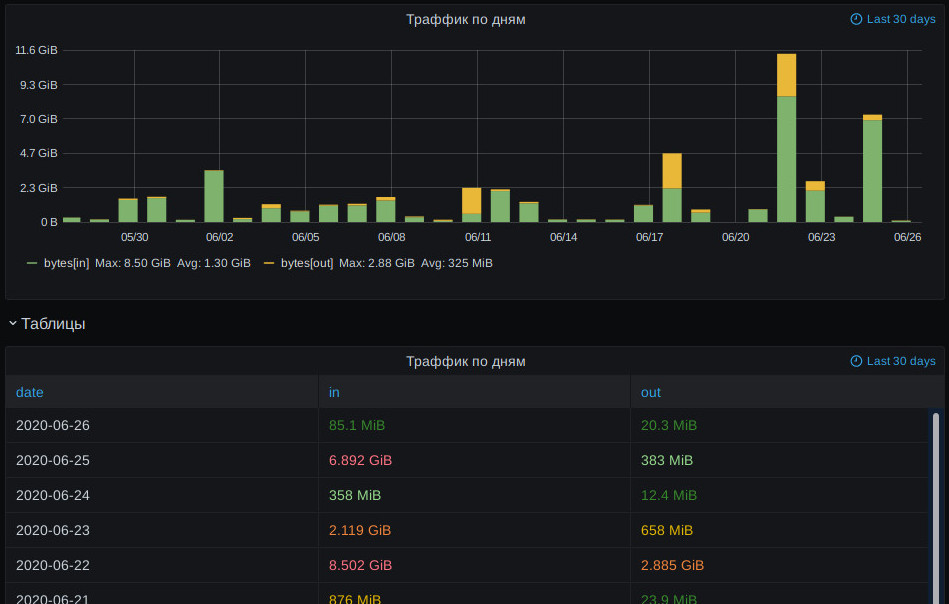

Usual and useful way to represent traffic counters for accounting purposes like

"how much inbound/outbound traffic passed thru within specific day/month/year?"

are bar charts or tables I think, i.e. something like this:

With one bar or table entry there for each day, month or year (accounting periods).

Counter values in general are not special in prometheus, so grafana builds the

usual monotonic-line graphs for these by default, which are not very useful.

Results of prometheus query like increase(iface_traffic_bytes_total[1d])

are also confusing, as it returns arbitrary sliding windows,

with points that don't correspond to any fixed accounting periods.

But grafana is not picky about its data sources, so it's easy to query

prometheus and re-aggregate (and maybe cache) data as necessary,

e.g. via its "Simple JSON" datasource plugin.

To get all historical data, it's useful to go back to when metric first appeared,

and official prometheus clients add "_created" metric for counters, which can be

queried for min value to get (slightly pre-) earliest value timestamp, for example

min_over_time(iface_traffic_bytes_created[10y]).

From there, value for each bar will be diffs between min/max for each accounting

interval, that can be queried naively via min_over_time + max_over_time (like

created-time above), but if exporter is written smartly or persistent 64-bit counters

are used (e.g. SNMP IF-MIB::ifXEntry), these lookups can be simplified a lot by

just querying min/max values at the start and end of the period,

instead of having prometheus do full sweep,

which can be especially bad on something like a year of datapoints.

Such optimization can potentially return no values, if data at that interval

start/end was missing, but that's easy to work around by expanding lookup range

until something is returned.

Deltas between resulting max/min values are easy to dump as JSON for bar chart

or table in response to grafana HTTP requests.

Had to implement this as a prometheus-grafana-simplejson-aggregator py3

script (no deps), which runs either on timer to query/aggregate latest data from

prometheus (to sqlite), or as a uWSGI app which can be queried anytime from grafana.

Also needed to collect/export data into prometheus over SNMP for that,

so related script is prometheus-snmp-iface-counters-exporter for SNMPv3

IF-MIB/hrSystemUptime queries (using pysnmp), exporting counters via

prometheus client module.

(note - you'd probably want to check and increase retention period in prometheus

for data like that, as it defaults to dropping datapoints older than a month)

I think aggregation for such accounting use-case can be easily implemented in

either prometheus or grafana, with a special fixed-intervals query type in the

former or more clever queries like described above in the latter, but found that

both seem to explicitly reject such use-case as "not supported". Oh well.

Code links (see README in the repo and -h/--help for usage info):

Jun 21, 2020

File tagging is one useful concept that stuck with me since I started using tmsu

and made a codetag tool a while ago to auto-add tags to local files.

For example, with code, you'd usually have code files and whatever assets

arranged in some kind of project trees, with one dir per project and all files

related to it in git repo under that.

But then when you work on something unrelated and remember "oh, I did implement

or seen this already somewhere", there's no easy and quick "grep all python

files" option with such hierarchy, as finding all of them on the whole fs tends

to take a while, or too long for a quick check anyway to not be distracting.

And on top of that filesystem generally only provides filenames as metadata,

while e.g. script shebangs or file magic are not considered, so "filetag" python

script won't even be detected when naively grepping all *.py files.

Easy and sufficient fix that I've found for that is to have cronjob/timer to go

over files in all useful non-generic fs locations and build a db for later querying,

which is what codetag and tmsu did for years.

But I've never came to like golang in any way (would highly recommend checking

out OCAML instead), and tmsu never worked well for my purposes - was slow to

interface with, took a lot of time to build db, even longer to check and clean

it up, while quierying interface was clunky and lackluster (long commands, no

NUL-separated output, gone files in output, etc).

So couple months ago found time to just rewrite all that in one python script -

filetag - which does all codetag + tmsu magic in something like 100 lines of

actual code, faster, and doesn't have shortcomings of the old tools.

Was initially expecting to use sqlite there, but then realized that I only

index/lookup stuff by tags, so key-value db should suffice, and it won't

actually need to be updated either, only rebuilt from scratch on each indexing,

so used simple gdbm at first.

Didn't want to store many duplicates of byte-strings there however, so split

keys into three namespaces and stored unique paths and tags as numeric indexes,

which can be looked-up in the same db, which ended up looking like this:

"\0" "tag_bits" = tag1 "\0" tag2 ...

"\1" path-index-1 = path-1

"\1" path-index-2 = path-2

...

"\2" tag-index-1 = path-index-1 path-index-2 ...

"\2" tag-index-2 = path-index-1 path-index-2 ...

...

So db lookup loads "tag_bits" value, finds all specified tag-indexes there

(encoded using minimal number of bytes), then looks up each one, getting a set

of path indexes for each tag (uint64 numbers).

If any logic have to be applied on such lookup, i.e. "want these tags or these,

but not those", it can be compiled into DNF "OR of bitmasks" list,

which is then checked against each tag-bits of path-index superset,

doing the filtering.

Resulting paths are looked up by their index and printed out.

Looks pretty minimal and efficient, nothing is really duplicated, right?

In RDBMS like sqlite, I'd probably store this as a simple tag + path table,

with index on the "tag" field and have it compress that as necessary.

Well, running filetag on my source/projects dirs in ~ gets 100M gdbm file with

schema described above and 2.8M sqlite db with such simple schema.

Massive difference seem to be due to sqlite compressing such repetitive and

sometimes-ascii data and just being generally very clever and efficient.

Compressing gdbm file with zstd gets 1.5M too, i.e. down to 1.5% - impressive!

And it's not mostly-empty file, aside from all those zero-bytes in uint64 indexes.

Anyhow, point and my take-away here was, once again - "just use sqlite where

possible, and don't bother with other local storages".

It's fast, efficient, always available, very reliable, easy to use, and covers a

ton of use-cases, working great for all of them, even when they look too simple

for it, like the one above.

One less-obvious aspect from the list above, which I've bumped into many times

myself, and probably even mentioned on this blog already, is "very reliable" -

dbm modules and many other "simple" databases have all sorts of poorly-documented

failure modes, corrupting db and loosing data where sqlite always "just works".

Wanted to document this interesting fail here mostly to reinforce the notion

in my own head once more.

sqlite is really awesome, basically :)

Jun 02, 2020

After replacing DNS resolver daemons a bunch of weeks ago in couple places,

found the hard way that nothing is quite as reliable as (t)rusty dnscache

from djbdns, which is sadly too venerable and lacks some essential features

at this point.

Complex things like systemd-resolved and unbound either crash, hang

or just start dropping all requests silently for no clear reason

(happens outside of conditions described in that email as well, to this day).

But whatever, such basic service as name resolver needs some kind of watchdog

anyway, and seem to be easy to test too - delegate some subdomain to a script

(NS entry + glue record) which would give predictable responses to arbitrary

queries and make/check those.

Implemented both sides of that testing process in dns-test-daemon script,

which can be run with some hash-key for BLAKE2S HMAC:

% ./dns-test-daemon -k hash-key -b 127.0.0.1:5533 &

% dig -p5533 @127.0.0.1 aaaa test.com

...

test.com. 300 IN AAAA eb5:7823:f2d2:2ed2:ba27:dd79:a33e:f762

...

And then query it like above, getting back first bytes of keyed name hash after

inet_ntop conversion as a response address.

Good thing about it is that name can be something random like

"o3rrgbs4vxrs.test.mydomain.com", to force DNS resolver to actually do its job

and not just keep returning same "google.com" from the cache or something.

And do it correctly too, as otherwise resulting hash won't match expected value.

So same script has client mode to use same key and do the checking,

as well as randomizing queried names:

% dns-test-daemon -k hash-key --debug @.test.mydomain.com

(optionally in a loop too, with interval/retries/timeout opts, and checking

general network availability via fping to avoid any false alarms due to that)

Ended up running this tester/hack to restart unbound occasionally when it

craps itself, which restored reliable DNS operation for me,

essential thing for any outbound network access, pretty big deal.

May 09, 2020

One of my weird hobbies have always been collecting "personal favorite" images

from places like DeviantArt or ArtStation for desktop backgrounds.

And thing about arbitrary art is that they never fit any kind of monitor

resolution - some images are tall, others are wide, all have to be scaled, etc -

and that processing has to be done somewhere.

Most WMs/DEs seem to be cropping largest aspect-correct rectangle from the

center of the image and scaling that, which doesn't work well for tall images on

wide displays and generally can be improved upon.

Used my aura project for that across ~10 years, which did a lot of custom

processing using GIMP plugin, as it was the only common image-processing thing

supporting seam carving (or liquid rescale / lqr) algo at the time

(around 2011) for neat content-aware image resizing.

It always worked fairly slowly, mostly due to GIMP startup times and various

inefficiencies in the process there, and by now it is also heavily deprecated

due to using Python2 (which is no longer supported in any way past April 2020),

as well as GIMP's Python-Fu API, which will probably also be gone in GIMP 3.0+

(with its migration to gobject-introspection bindings).

Wanted to document how it was working somewhere for myself, which was useful for

fgbg rewrite (see below), and maybe it might be useful to cherry-pick ideas

from to someone else who'd randomly stumble upon this list :)

Tool was doing roughly this:

aura.sh script running as a daemon, with some wakeup interval to update backgrounds.

Most details of the process were configurable in ~/.aurarc.

xprintidle was used to check if desktop is idle - no need to change backgrounds if so.

Number of displays to run lqr_wpset.py for was checked via xrandr cli tool.

Image was picked mostly-randomly, but with bias towards "favorites" and

ignoring blacklisted ones.

Both faves and blacklist was supported and updated via cli options

(-f/--fave and -b/--blacklist), allowing to easily set "like" or "never use

again" status for current image, with lists of these stored in ~/.aura.

Haven't found much use for these honestly - all images work great with proper processing,

and there seem to be little use in limiting their variety that way.

GIMP was run in batch mode and parameters passed via env, using lqr_wpset.py

plugin to either set background on specified display or print some

"WPS-ERR:" string to pick some other image (on errors or some sanity-checks failing there).

Image picks and all GIMP output were stored in ~/.aura/picker.log

(overridable via aurarc, same as most other params), with a special file for

storing just currently-used image source path(s).

Command-line options to wake up daemon via signal or print currently-used

image source were also supported and quite useful.

Actual heavy-lifting was done in lqr_wpset.py GIMP plugin, which handled

image processing, some caching to speed things up when re-using same source

image, as well as actual background-setting.

Uses old dbus and pygtk modules to set background in various DEs at the last step.

Solid-color edges are stripped from the image - e.g. black stripes on the

top/bottom - to get actual image size and contents to use.

This is done by making a "mask" layer from image, which gets blurred and

then contrast-adjusted to average-out any minor color fluctuations in these

edges, and then cropped by gimp to remove them.

Resulting size/position of cropped remains of that "mask" is then used to

crop relevant part out of the original image.

50% random chance to flip image horizontally for more visual variety.

Given that parts of my desktop background are occluded by conky and

terminals, this is actually very useful, as it will show diff parts of same

image(s) from under these.

Only works weirdly with text on images, which is unreadable when mirrored,

but that's very rare and definitely not a big deal, as it's often there for

signage and such, not for actual reading.

If image is way too large or small - e.g. 6x diff by area or 3x diff by

width/height, abort processing, as it'll be either too expensive cpu-wise or

won't get nice result anyway (for tiny images).

If image aspect is too tall compared to display's - scale it smartly to one

side of the screen.

This is somewhat specific to my use-case, as my most used virtual desktop is #1

with transparent conky system-monitor on the left and terminal window on the right.

So background shows through on the left there, and tall images can easily

fill that space, but "gravity" value can be configured in the script to

position such image anywhere horizontally (0-100, default - 25 for "center

at 25% left-to-right").

Processing in this case is a bit complicated:

Render specified bg color (if any) on display-sized canvas, e.g. just black.

Scale/position image in there using specified "gravity" value as center

point, or against left/right side, if it'd go beyond these.

Pick specified number of "edge" pixels (e.g. 25px) on left/right sides of

the image, which aren't bumping into canvas edge, and on a layer

in-between solid-color background (first step) and scaled/positioned image, do:

- Scale this edge to fill rest of the canvas in empty direction.

- Blur result a lot, so it'd look vague and indistinct, like background noise.

- Use some non-100% opacity for it, something like 70%, to blend-in with bg color.

This would produce a kind of "blurred halo" stretching from tall image

sides, and filling otherwise-empty parts of canvas very nicely.

Gradient-blend above "edge" pixels with produced stretched/blurred background.

Arrived at this process after some experimentation, I think something like

that with scaling and blur is common way to make fitting bg underlay for

sharp centered images in e.g. documentaries and such.

If image is at least 30% larger by area, scale it preserving aspect with the

regular "cubic" algo.

This turns out to be very important pre-processing step for LQR scaling

later - on huge source images, such scaling can take many minutes, e.g. when

scaling 4k image to 1080p.

And also - while this tool didn't do that (fixed later in fgbg script) -

it's also important to scale ALL images as close to final resolution as

possible, so that seam carving algo will add as little distortion as possible.

Generally you want LQR to do as little work as possible, if other

non-distorting options are available, like this aspect-scaling option.

Run seam carving / lqr algo to match image aspect to display size exactly.

Look it up on e.g. wikipedia or youtube if you've never seen it - a very

cool and useful algorithm.

Cache produced result, to restart from this step when using same source

image and h-flip-chance next time.

Text added on top in the next step can vary with current date/time,

so intermediate result is cached here.

This helps a lot with performance, obviously.

Add text plaque in the image corner with its filename, timestamps and/or

some tag metadata.

This is mostly useful when proper image titles stored in EXIF tags, as well

as creation time/place for photos.

Metadata from exiv2 (used via pyexiv2) has a ton of various keys for same

things, so script does its best to include ~20 potential keys for each

useful field like "author", "creation date" or "title".

Font used to be rendered in a contrasting color, picked via L*a*b*

colorspace against "averaged" background color (via blur or such).

This produced too wild and still bad results on busy backgrounds, so

eventually switched to a simpler and better "light text with dark outline" option.

Outline is technically rendered as a "glow" - a soft gradient shadow (solid

dark color to full trasparency) expanding in all directions from font outline.

Try all known background-setting options, skipping expected errors,

as most won't work with one specific DE running.

Can ideally be configured via env (from ~/.aurarc) to skip unnecessary

work here, but they all are generally easy/quick to try anyway.

GNOME/Unity - gsettings set org.gnome.desktop.background picture-uri

file://... command.

Older GNOME/XFCE - use "gconf" python module to set

"/desktop/gnome/background/picture_filename" path.

XFCE - set via DBus call to /org/xfce/Xfconf [org.xfce.Xfconf].

Has multiple different props to set there.

Enlightenment (E17+) - DBus calls to /org/enlightenment/wm/RemoteObject

[org.enlightenment.wm.service].

Can have many images there, for each virtual desktop and such.

Paint X root window via pygtk!

This works for many ancient window managers, and is still showing through

in some DEs too, occasionally.

Collected and added these bg-setting steps via experiments with different

WMs/DEs over the years, and it's definitely nowhere near exhaustive list.

These days there might be some more uniform way to do it, especially with

wayland compositors.

At some point, mostly due to everything in this old tool being deprecated out of

existance, did a full rewrite with all steps above in some form, as well as

major improvements, in the form of modern fgbg script (in mk-fg/de-setup repo).

It uses ImageMagick and python3 Wand module, which also support LQR and all

these relatively-complex image manipulations these days, but work few orders of

magnitude faster than old "headless GIMP" for such automated processing purpose.

New script is much less complicated, as well as self-contained daemon,

with only optional extra wand-py and xprintidle (see above) dependencies

(when e.g. image processing is enabled via -p/--process option).

Also does few things more and better, drawing all lessions from that old aura

project, which can finally be scrapped, I guess.

Actually, one missing bit there atm (2020-05-09) is various background-setting

methods from different DEs, as I've only used it with Enlightement so far,

where it can set multiple background images in configurable ways via DBus

(using xrandr and sd-bus lib from systemd via ctypes).

Should be relatively trivial to support more DEs there by adding specific

commands for these, working more-or-less same as in the old script (and maybe

just copying methods from there), but these just need to be tested, as my

limited knowledge of interfaces in all these DEs is probably not up to date.

Jan 03, 2020

This is a fix for a common "bots hammering on all doors on the internet" issue,

applied in this case to nginx http daemon, where random bots keep creating

bunch of pointless server load by indexing or requesing stuff that they never

should bother with.

Example can be large dump of static files like distro packages mirror or any

kind of dynamic content prohibited by robots.txt, which nice bots tend to

respect, but dumb and malicious bots keep scraping over and over again without

any limits or restraint.

One way of blocking such pointless busywork-activity is to process access_log

and block IPs via ipsets, nftables sets or such, but this approach blocks ALL

content on http/https port instead of just hosts and URLs where such bots have

no need to be.

So ideally you'd have something like ipsets in nginx itself, blocking only "not

for bots" locations, and it actually does have that, but paywalled behind Nginx

Plus subscription (premium version) in keyval module, where dynamic blacklist

can be updated in-memory via JSON API.

Thinking about how to reimplement this as a custom module for myself, in some

dead-simple and efficient way, thought of this nginx.conf hack:

try_files /some/block-dir/$remote_addr @backend;

This will have nginx try to serve templated /some/block-dir/$remote_addr

file path, or go to @backend location if it doesn't exists.

But if it does exist, yet can't be accessed due to filesystem permissions, nginx

will faithfully return "403 Forbidden", which is pretty much the desired result for me.

Except this is hard to get working with autoindex module (i.e. have nginx

listing static filesystem directories), looks up paths relative to root/alias

dirs, has ton of other limitations, and is a bit clunky and non-obvious.

So, in the same spirit, implemented "stat_check" command via nginx-stat-check module:

load_module /usr/lib/nginx/modules/ngx_http_stat_check.so;

...

location /my-files {

alias /srv/www/my-files;

autoindex on;

stat_check /tmp/blacklist/$remote_addr;

}

This check runs handler on NGX_HTTP_ACCESS_PHASE that either returns NGX_OK or

NGX_HTTP_FORBIDDEN, latter resulting in 403 error (which can be further handled

in config, e.g. via custom error page).

Check itself is what it says on the tin - very simple and liteweight stat()

call, checking if specified path exists, and - as it is for blacklisting -

returning http 403 status code if it does when accessing that location block.

This also allows to use any of the vast number of nginx variables,

including those matched by regexps (e.g. from location URL), mapped via "map",

provided by modules like bundled realip, geoip, ssl and such, any third-party

ones or assembled via "set" directive, i.e. good for use with pretty much any

parameter known to nginx.

stat() looks up entry in a memory-cached B-tree or hash table dentry list

(depends on filesystem), with only a single syscall and minimal overhead

possible for such operation, except for maybe pure in-nginx-memory lookups, so

might even be better solution for persistent blacklists than keyval module.

Custom dynamic nginx module .so is very easy to build, see "Build / install"

section of README in the repo for exact commands.

Also wrote corresponding nginx-access-log-stat-block script that maintains such

filesystem-database blacklist from access.log-like file (only cares about

remote_addr being first field there), produced for some honeypot URL, e.g. via:

log_format stat-block '$remote_addr';

location = /distro/package/mirror/open-and-get-banned.txt {

alias /srv/pkg-mirror/open-and-get-banned.txt;

access_log /run/nginx/bots.log stat-block;

}

Add corresponding stat_check for dir that script maintains in "location" blocks

where it's needed and done.

tmpfs (e.g. at /tmp or /run) can be used to keep such block-list completely in

memory, or otherwise I'd recommend using good old ReiserFS (3.6 one that's in

mainline linux) with tail packing, which is enabled by default there, as it's

incredibly efficient with large number of small files and lookups for them.

Files created by nginx-access-log-stat-block contain blocking timestamp and

duration (which are used to unblock addresses after --block-timeout), and are

only 24B in size, so ReiserFS should pack these right into inodes (file metadata

structure) instead of allocating extents and such (as e.g. ext4 would do),

being pretty much as efficient for such data as any disk-backed format can

possibly be.

Note that if you're reading this post in some future, aforementioned premium

"keyval" module might be already merged into plebeian open-source nginx release,

allowing on-the-fly highly-dynamic configuration from external tools out of the box,

and is probably good enough option for this purpose, if that's the case.

Jan 03, 2020

Doesn't seem to be a common thing to pay attention to outside of graphic/UI/UX

design world, but if you stare at the code for significant chunk of the day,

it's a good thing to customize/optimize at least a bit.

I've always used var-width fonts for code in emacs, and like them vs monospace

ones for general readability and being much more compact, but noticed one major

shortcoming over the years: some punctuation marks are very hard to tell apart.

While this is not an issue in free-form text, where you don't care much whether

some tiny dot is a comma or period, it's essential to differentiate these in code.

And in fonts which I tend to use (like Luxi Sans or Liberation Sans), "." and

"," in particular tend to differ by something like 1-2 on-screen pixels, which

is bad, as I've noticed straining to distinguish the two sometimes, or putting

one instead of another via typo and not noticing, because it's hard to notice.

It's a kind of thing that's like a thorn that always torments, but easy to

fix once you identify it as a problem and actually look into it.

Emacs in particular allows to replace one char with another visually:

(unless standard-display-table

(setq standard-display-table (make-display-table)))

(aset standard-display-table ?, (vconcat "˾"))

(aset standard-display-table ?. (vconcat "❟"))

Most fonts have ton of never-used-in-code unicode chars to choose distinctive

replacements from, which are easy to browse via gucharmap or such.

One problem can be emacs using faces with different font somewhere after such

replacement, which might not have these chars in them, so will garble these,

but that's rare and also easy to fix (via e.g. custom-set-faces).

Another notable (original) use of this trick - "visual tabs":

(aset standard-display-table ?\t (vconcat "˙ "))

I.e. marking each "tab" character by a small dot, which helps a lot with telling

apart indentation levels, esp. in langs like python where it matters just as

much as commas vs periods.

Recently also wanted to extend this to colons and semicolons, which are just as

hard to tell apart as these dots (: vs ;), but replacing them with something

weird everywhere seem to be more jarring, and there's a proper fix for all of

these issues - edit the glyphs in the font directly.

fontforge is a common app for that, and for same-component ".:,;" chars there's

an easy way to scale them, copy-paste parts between glyphs, positioning them

precisely at the same levels.

Scaling outlines by e.g. 200% makes it easy to tell them apart by itself,

but I've also found it useful to make a dot slightly horizontally stretched,

while leaving comma vertical - eye immediately latches onto this distinction,

unlike with just "dot" vs "dot plus a tiny beard".

It's definitely less of an issue in monospace fonts, and there seem to be a

large selection of "coding fonts" optimized for legibility, but still worth

remembering that glyphs in these are not immutable at all - you're not stuck

with whatever aesthetics-vs-legibility trade-offs their creators made for all

chars, and can easily customize them according to your own issues and needs,

same as with editor, shell/terminal, browser or desktop environemnt.

Dec 30, 2019

I've been hopping between browsers for as long as I remember using them,

and in this last iteration, had a chance to setup waterfox from scratch.

"Waterfox" is a fork of Mozilla Firefox Browser with no ads, tracking and other

user-monetization nonsense, and with mandatory extension signing disabled.

So thought to collect my (incomplete) list of hacks which had to be applied

on top and around it, to make the thing work like I want it to, though it's

impossible to remember them all, especially most obvious must-have stuff that

you don't even think about.

This list should get outdated fast, and probably won't be updated,

so be sure to check whether stuff on it is still relevant first.

waterfox itself, built from stable source, with any kind of local extra

patches applied and tracked in that build script (Arch PKGBUILD).

Note that it comes in two variants - Current and Classic, where "Classic" is

almost certainly not the one you want, unless you know exactly what you want it

for (old pre-webext addons, some integration features, memory constraints, etc).

Key feature for me, also mentioned above, is that it allows installing any

modified extensions - want to be able to edit anything I install, plus add my

own without asking mozilla for permission.

Removal of various Mozilla's adware and malware from it is also a plus.

Oh, and it has Dark Theme out of the box too!

Build takes a while and uses ~8G of RAM for linking libxul.so.

Not really a problem though - plenty of spare cpu in the background and overnight.

Restrictive AppArmor profile for waterfox-current

Modern browsers are very complex bloatware with ton of bugs and impossible to

secure, despite devs' monumental efforts to contain and patch these pillars of

crap from leaking, so something like this is absolutely essential.

I use custom AppArmor profile as I've been writing them for years,

but something pre-made like firejail would probably work too.

CGroups for controlling resource usage.

Again, browsers are notorious for this. Nuff said.

See earlier cgroup-v2-resource-limits-for-apps post here and cgrc tool

for more info, as well as kernel docs on cgroup-v2 (or cgroup-v1 docs if

you still use these).

They are very simple to use, even via something like mkdir ff && echo 3G >

ff/memory.max && pgrep waterfox > ff/cgroup.procs, without any extra tools.

ghacks user.js - basic cleanup of Mozilla junk and generally useless features.

There are some other similar user.js templates/collections,

see compare-user.js and Tor Browser user.js hacks somewhere.

Be sure to start from "what is user.js" page, and go through the whole thing,

overriding settings there that don't make sense for you.

I would suggest installing it not as a modified user.js, but as a vendor.js

file without any modification, which would make it easier to diff and maintain

later, as it won't copy all its junk to your prefs.js forever, and just use

values as about:config defaults instead.

vendor.js files are drop-in .js files in dir like

/opt/waterfox-current/browser/defaults/preferences, which are read

last-to-first alphabetically, so I'd suggest putting ghacks user.js as

"%ghacks.js" or such there, and it'll override anything.

Important note: replace user_pref( with pref( there, which should be

easy to replace back for diffs/patches later.

I'm used to browser always working in "Private Mode", storing anything I want

to remember in bookmarks or text files for later reference, and never

remembering anything between browser restarts, so most severe UI changes there

make sense for me, but might annoy someone more used to having e.g. urlbar

suggestions, persistent logins or password storage.

Notable user.js tweaks:

user_pref("privacy.resistFingerprinting.letterboxing", false);

Obnoxious privacy setting in ghacks to avoid fingerprinting by window size.

It looks really ugly and tbh I don't care that much about privacy.

user_pref("permissions.default.shortcuts", 2);

Disallows sites to be able to override basic browser controls.

Lower audio volume - prevents sites from deafening you every time:

user_pref("media.default_volume", "0.1");

user_pref("media.volume_scale", "0.01");

Various tabs-related behavior - order of adding, switching, closing, etc:

user_pref("browser.tabs.closeWindowWithLastTab", false);

user_pref("browser.tabs.loadBookmarksInTabs", true);

user_pref("browser.tabs.insertAfterCurrent", true);

user_pref("browser.ctrlTab.recentlyUsedOrder", false);

Disable all "where do you want to download?" dialogs, disable opening .mp3

and such in browser, disable "open with" (won't work from container anyway):

user_pref("browser.download.useDownloadDir", true);

user_pref("browser.download.forbid_open_with", true);

user_pref("media.play-stand-alone", false);

See also handlers.json file for tweaking filetype-specific behavior.

Disable media autoplay: user_pref("media.autoplay.default", 5);

Disable all web-notification garbage:

user_pref("dom.webnotifications.enabled", false);

user_pref("dom.webnotifications.serviceworker.enabled", false);

Disable browser-UI/remote debugging in user.js, so that you'd have to enable

it every time on per-session basis, when it's (rarely) needed:

user_pref("devtools.chrome.enabled", false);

user_pref("devtools.debugger.remote-enabled", false);

Default charset to utf-8 (it's 2019 ffs!):

user_pref("intl.charset.fallback.override", "utf-8");

Disable as many webapis and protocols that I never use as possible:

user_pref("permissions.default.camera", 2);

user_pref("permissions.default.microphone", 2);

user_pref("geo.enabled", false);

user_pref("permissions.default.geo", 2);

user_pref("network.ftp.enabled", false);

user_pref("full-screen-api.enabled", false);

user_pref("dom.battery.enabled", false);

user_pref("dom.vr.enabled", false);

Note that some of such APIs are disabled by ghacks, but not all of them,

as presumably some people want them, sometimes, maybe, not sure why.

Reader Mode (about:reader=<url>, see also keybinding hack below):

user_pref("reader.color_scheme", "dark");

user_pref("reader.content_width", 5);

Disable lots of "What's New", "Greetings!" pages, "Are you sure?" warnings,

"pocket" (malware) and "identity" (Mozilla tracking account) buttons:

user_pref("browser.startup.homepage_override.mstone", "ignore");

user_pref("startup.homepage_welcome_url", "");

user_pref("startup.homepage_welcome_url.additional", "");

user_pref("startup.homepage_override_url", "");

user_pref("browser.messaging-system.whatsNewPanel.enabled", false);

user_pref("extensions.pocket.enabled", false);

user_pref("identity.fxaccounts.enabled", false);

user_pref("browser.tabs.warnOnClose", false);

user_pref("browser.tabs.warnOnCloseOtherTabs", false);

user_pref("browser.tabs.warnOnOpen", false);

user_pref("full-screen-api.warning.delay", 0);

user_pref("full-screen-api.warning.timeout", 0);

Misc other stuff:

user_pref("browser.urlbar.decodeURLsOnCopy", true);

user_pref("browser.download.autohideButton", false);

user_pref("accessibility.typeaheadfind", false); - disable "Find As You Type"

user_pref("findbar.highlightAll", true);

user_pref("clipboard.autocopy", false); - Linux Xorg auto-copy

user_pref("layout.spellcheckDefault", 0);

user_pref("browser.backspace_action", 2); - 2=do-nothing

user_pref("general.autoScroll", false); - middle-click scrolling

user_pref("ui.key.menuAccessKey", 0); - alt-key for menu bar on top

Most other stuff I have there are overrides for ghacks vendor.js file,

so again, be sure to scroll through that one and override as necessary.

omni.ja keybinding hacks - browser quit key and reader key.

Linux-default Ctrl+Q key is too close to Ctrl+W (close tab), and is

frustrating to mis-press and kill all your tabs sometimes.

Easy to rebind to e.g. Ctrl+Alt+Shift+Q by unpacking

/opt/waterfox-current/omni.ja zip file and changing stuff there.

File you want in there is chrome/browser/content/browser/browser.xul,

set modifiers="accel,shift,alt" for key_quitApplication there,

and remove disabled="true" from key_toggleReaderMode (also use

modifiers="alt" for it, as Ctrl+Alt+R is already used for browser restart).

zip -qr0XD ../omni.ja * command can be used to pack stuff back into "omni.ja".

After replacing omni.ja, do rm -Rf ~/.cache/waterfox/*/startupCache/ too.

Note that bunch of other non-hardcoded stuff can also be changed there easily,

see e.g. shallowsky.com modifying-omni.ja post.

Increase browser UI font size and default page fonts.

First of all, user.js needs

user_pref("toolkit.legacyUserProfileCustomizations.stylesheets", true);

line to easily change UI stuff from profile dir (instead of omni.ja or such).

Then <profile>/chrome/userChrome.css can be used to set UI font size:

* { font-size: 15px !important; }

Page font sizes can be configured via Preferences or user.js:

user_pref("font.name.monospace.x-western", "Liberation Mono");

user_pref("font.name.sans-serif.x-western", "Liberation Sans");

user_pref("font.name.serif.x-western", "Liberation Sans");

user_pref("font.size.monospace.x-western", 14);

user_pref("font.size.variable.x-western", 14);

I also keep pref("browser.display.use_document_fonts", 0); from ghacks

enabled, so it's important to set some sane defaults here.

Hide all "search with" nonsense from URL bar and junk from context menus.

Also done via userChrome.css - see "UI font size" above for more details:

#urlbar-results .search-one-offs { display: none !important; }

If context menus (right-click) have options you never use,

they can also be removed:

#context-bookmarklink, #context-searchselect,

#context-openlinkprivate { display: none !important; }

See UserChrome.css_Element_Names/IDs page on mozillazine.org for IDs of

these, or enable "browser chrome" + "remote" debugging (two last ones) in

F12 - F1 menu and use Ctrl+Shift+Alt+I to inspect browser GUI (note that all

menu elements are already there, even if not displayed - look them up via css

selectors).

Remove crazy/hideous white backgrounds blinding you every time you open

browser windows or tabs there.

AFAIK this is not possible to do cleanly with extension only - needs

userChrome.css / userContent.css hacks as well.

All of these tweaks I've documented in mk-fg/waterfox#new-tab, with end

result being removing all white backgrounds in new browser/window/tab pages

and loading 5-liner html with static image background there.

Had to make my own extension, as all others doing this are overcomplicated,

and load background js into every tab, use angular.js and bunch of other junk.

Extensions!

I always install and update these manually after basic code check

and understanding how they work, as it's fun and helps to keep the bloat

as well as any unexpected surprises at bay.

Absolutely essential multipurpose ones:

uBlock Origin

Be sure to also check how to add "My Filters" there, as these are just as

useful as adblocking for me.

Modern web pages are bloated with useless headers, sidebars, stars, modal

popups, social crap, buttons, etc - just as much as with ads, so it's very

useful to remove all this shit, except for actual content.

For example - stackoverflow:

stackoverflow.com## .top-bar

stackoverflow.com## #left-sidebar

stackoverflow.com## #sidebar

stackoverflow.com## #js-gdpr-consent-banner

stackoverflow.com## .js-dismissable-hero

Just use Ctrl+Shift+C and tree to find junk elements and add their

classes/ids there on per-site basis like that, they very rarely change.

uMatrix - best NoScript-type addon.

Blocks all junk-js, tracking and useless integrations with minimal setup,

and is very easy to configure for sites on-the-fly.

General usability ones:

Add custom search engine - I use these via urlbar keywords all the time

(e.g. "d some query" for ddg), not just for search, and have few dozen of

them, all created via this handy extension.

Alternative can be using https://ready.to/search/en/ - which also generates

OpenSearch XML from whatever you enter there.

Firefox search is actually a bit limited wrt how it builds resulting URLs

due to forced encoding (e.g. can't transform "ghr mk-fg/blog" to github repo

URL), which can be fixed via an external tool - see mk-fg/waterfox#redirectorml

for more details.

Mouse Gesture Events - simplest/fastest one for gestures that I could find.

Some other ones are quite appalling wrt bloat they bring in, unlike this one.

HTTPS by default - better version of "HTTPS Everywhere" - much simpler

and more well-suited for modern web, where defaulting to http:// is just

wrong, as everyone and their dog are either logging these or putting

ads/malware into them on-the-fly.

Proxy Toggle with some modifications (see mk-fg/waterfox#proxy-toggle-local).

Allows to toggle proxy on/off in one keypress or click, with good visual

indication, and is very simple internally - only does what it says on the tin.

force-english-language - my fix for otherwise-useful ghacks'

anti-fingerprinting settings confusing sites into thinking that I want them

to guess language from my IP address.

This is never a good thing, so this simple 10-js-lines addon adds back

necessary headers and JS values to make sites always use english.

flush-site-data - clears all stuff that sites store in browser without

needing to restart it. Useful to log out of all sites and opt out of all tracking.

Handling for bittorrent magnet URLs.

Given AppArmor container (see above), using xdg-open for these is quite

"meh" - opens up a really fat security exception.

But there is another - simpler (for me at least) - way, to use some trivial

wrapper binary - see all details in mk-fg/waterfox#url-handler-c.

RSS and Atom feeds.

Browsers stopped supporting these, but they're still useful for some

periodic content.

Used to work around this limitation via extensions (rendering feeds in

browser) and webapps like feedjack, but it's not 2010 anymore, and remaining

feed contents are mostly good for notifications or for download links

(e.g. podcast feeds), both of which don't need browser at all, so ended up

making and using external tools for that - rss-get and riet.

Was kinda surprised to be able to work around most usability issues I had with

FF so far, without any actual C++ code patches, and mostly without patches at

all (keybindings kinda count, but can be done without rebuild).

People love to hate on browsers (me too), but looking at any of the issues above

(like "why can't I do X easier?"), there's almost always an open bug (which you

can subscribe to), often with some design, blockers and a roadmap even, so can

at least understand how these hang around for years in such a massive project.

Also, comparing it to ungoogled-chromium that I've used for about a year before

migrating here, FF still offers much more customization and power-user-friendliness,

even if not always out of the box, and not as much as it used to.

Oct 05, 2019

As a bit of a follow-up on earlier post about cgroup-v2 resource control -

what if you want to impose cgroup limits on some subprocess within a

flatpak-managed scope?

Had this issue with Steam games in particular, where limits really do come in

handy, but on a regular amd64 distro, only sane way to run Steam would be via

flatpak or something like that (32-bit container/chroot).

flatpak is clever enough to cooperate with systemd and flatpak --user run

com.valvesoftware.Steam creates e.g. flatpak-com.valvesoftware.Steam-165893.scope

(number there representing initial pid), into which it puts itself and by

extension all things related to that particular app.

Scope itself can be immediately limited via e.g. systemctl set-property

flatpak-com.valvesoftware.Steam-165893.scope MemoryHigh=4G, and that can be

good enough for most purposes.

Specific issue I had though was with the game connecting and hanging forever on

some remote API, and wanted to limit network access for it without affecting

Steam, so was figuring a way to move it from that flatpak.scope to its own one.

Since flatpak runs apps in a separate filesystem namespaces (among other

restrictions - see "flatpak info --show-permissions ..."), its apps - unless

grossly misconfigured by developers - don't have access to pretty much anything

on the original rootfs, as well as linux API mounts such as /sys or dbus,

so using something as involved as "systemd-run" in there to wrap things into a

new scope is rather tricky.

Here's how it can be done in this particular case:

Create a scope for a process subtree before starting it, e.g. in a wrapper

before "flatpak run ...":

#!/bin/bash

systemd-run -q --user --scope --unit game -- sleep infinity &

trap "kill $!" EXIT

flatpak run com.valvesoftware.Steam "$@"

("sleep infinity" process there is just to keep the scope around)

Allow specific flatpak app to access that specific scope (before starting it):

% systemd-run -q --user --scope --unit game -- sleep infinity &

% cg=$(systemctl -q --user show -p ControlGroup --value -- game.scope)

% kill %1

% flatpak --user override --filesystem "$cg" com.valvesoftware.Steam

Overrides can be listed later via --show option or tweaked directly via ini

files in ~/.local/share/flatpak/overrides/

Add wrapper to affected subprocess (running within flatpak container) that'd

move it into such special scope:

#!/bin/bash

echo $$ > /sys/fs/cgroup/user.slice/.../game.scope/cgroup.procs

/path/to/original/app

For most apps, replacing original binary with that is probably good enough.

With Steam in particular, this is even easier to add via "Set Lauch Command..."

in properties of a specific game, and use something like /path/to/wrapper

%command% there, which will also pass $1 as the original app path to it.

(one other cool use for such wrappers with Steam, can be killing it after app

exits when it was started with "-silent -applaunch ..." directly, as there's

no option to stop it from hanging around afterwards)

And that should do it.

To impose any limits on game.scope, some pre-configured slice unit (or hierarchy

of such) can be used, and systemd-run to have something like "--slice games.slice"

(see other post for more on that).

Also, as I wanted to limit network access except for localhost (which is used by

steam api libs to talk to main process), needed some additional firewall configuration.

iptables can filter by cgroup2 path, so a rule with "-m cgroup --path ..." can

work for that, but since I've switched to nftables here a while ago, couldn't do that,

as kernel patch to implement cgroup2 filtering there apparently fell through

the cracks back in 2016 and was never revisited :(

Solution I've ended up with instead was to use cgroup-attached eBPF filter:

(nice to have so many options, but that's a whole different story at this point)