There's one great app -

bournal ("when

nobody cares what you have to say!"). Essentialy it's a bash script, providing

a simple interface to edit and encrypt journal entries.

Idea behind it is quite opposite of blogging - keep your thoughts as far away

from everyone as possible. I've used the app for quite a while, ever since

I've noticed it among freshmeat release announcements. It's useful to keep

some thoughts or secrets (like keys or passwords) somewhere, aside from the

head, even if you'd never read these again.

Anyway, encryption there is done by the means of ccrypt utility, which is sorta CLI for openssl. I don't get the rationale behind using it instead

of openssl directly (like "openssl enc ..."), and there are actually even better

options, like gnupg, which won't need a special logic

to keep separate stream-cipher password, like it's done in bournal.

So today, as I needed bournal on exherbo laptop, I've faced the need to get

ccrypt binary just for that purpose again. Worse yet, I have to recall and

enter a password I've used there, and I don't actually need it to just encrypt

an entry... as if assymetric encryption, gpg-agent, smartcards and all the

other cool santa helpers don't exist yet.

I've decided to hack up my "ccrypt" which will use all-too-familiar gpg and

won't ask me for any passwords my agent or scd already know, and in an hour or

so, I've succeeded.

And

here goes - ccrypt, relying

only on "gpg -e -r $EMAIL" and "gpg -d". EMAIL should be in the env, btw.

It actually works as ccencrypt, ccdecrypt, ccat as well, and can do recursive

ops just like vanilla ccrypt, which is enough for bournal.

If you're downloading stuff off the 'net via bt like me, then TPB is probably quite a familiar place to you.

Ever since the '09 trial there were a problems with TPB trackers

(tracker.thepiratebay.org) - the name gets inserted into every .torrent yet it

points to 127.0.0.1.

Lately, TPB offshot, tracker.openbittorrent.com suffers from the same problem

and actually there's a lot of other trackers in .torrent files that point

either at 0.0.0.0 or 127.0.0.1 these days.

As I use

rtorrent, I have an issue with

this - rtorrent seem pretty dumb when it comes to tracker filtering so it

queries all of them on a round-robin basis, without checking where it's name

points to or if it's down for the whole app uptime, and queries take quite a

lot of time to timeout, so that means at least two-minute delay in starting

download (as there's TPB trackers first), plus it breaks a lot of other things

like manual tracker-cycling ("t" key), since it seem to prefer only top-tier

trackers and these are 100% down.

Now the problem with http to localhost can be solved with the firewall, of

course, although it's an ugly solution, and 0.0.0.0 seem to fail pretty fast

by itself, but stateless udp is still a problem.

Another way to tackle the issue is probably just to use a client that is

capable of filtering the trackers by ip address, but that probably means some

heavy-duty stuff like azureus or vanilla bittorrent which I've found pretty

buggy and also surprisingly heavy in the past.

So the easiest generic solution (which will, of course, work for rtorrent) I've

found is just to process the .torrent files before feeding them to the leecher

app. Since I'm downloading these via firefox exclusively, and there I use

FlashGot (not the standard "open with" interface since

I also use it to download large files on remote machine w/o firefox, and afaik

it's just not the way "open with" works) to drop them into an torrent bin via

script, it's actually a matter of updating the link-receiving script.

Bencode is not a mystery, plus it's

pure-py implementation is actually the reference one, since it's a part of

original python bittorrent client, so all I basically had to do is to rip

bencode.py from it and paste the relevant part into the script.

The right way might've been to add dependency on the whole bittorrent client,

but it's an overkill for such a simple task plus the api itself seem to be

purely internal and probably a subject to change with client releases anyway.

So, to the script itself...

# URL checker

def trak_check(trak):

if not trak: return False

try: ip = gethostbyname(urlparse(trak).netloc.split(':', 1)[0])

except gaierror: return True # prehaps it will resolve later on

else: return ip not in ('127.0.0.1', '0.0.0.0')

# Actual processing

torrent = bdecode(torrent)

for tier in list(torrent['announce-list']):

for trak in list(tier):

if not trak_check(trak):

tier.remove(trak)

# print >>sys.stderr, 'Dropped:', trak

if not tier: torrent['announce-list'].remove(tier)

# print >>sys.stderr, 'Result:', torrent['announce-list']

if not trak_check(torrent['announce']):

torrent['announce'] = torrent['announce-list'][0][0]

torrent = bencode(torrent)

That, plus the simple "fetch-dump" part, if needed.

No magic of any kind, just a plain "announce-list" and "announce" urls check,

dropping each only if it resolves to that bogus placeholder IPs.

I've wanted to make it as light as possible so no logging or optparse/argparse

stuff I tend cram everywhere I can, and the extra and heavy imports like

urllib/urllib2 are conditional as well. The only dependency is python (>=2.6)

itself.

Basic use-case is one of these:

% brecode.py < /path/to/file.torrent > /path/to/proccessed.torrent

% brecode.py http://tpb.org/torrents/myfile.torrent > /path/to/proccessed.torrent

% brecode.py http://tpb.org/torrents/myfile.torrent\

-r http://tpb.org/ -c "...some cookies..." -d /path/to/torrents-bin/

All the extra headers like cookies and referer are optional, so is the

destination path (dir, basename is generated from URL). My use-case in FlashGot

is this: "[URL] -r [REFERER] -c [COOKIE] -d /mnt/p2p/bt/torrents"

And there's the script itself.

Quick, dirty and inconclusive testing showed almost 100 KB/s -> 600 KB/s

increase on several different (two successive tests on the same file even with

clean session are obviously wrong) popular and unrelated .torrent files.

That's pretty inspiring. Guess now I can waste even more time on the TV-era

crap than before, oh joy ;)

From time to time I accidentally bump into new releases from the artists/bands

I listen to. Usually it happens on the web, since I don't like random radio

selections much, and quite a wide variety of stuff I like seem to ensure that

my

last.fm radio is a mess.

So, after accidentally seeing a few new albums for my collection, I've decided

to remedy the situation somehow.

Naturally, subscribing to something like an unfiltered flow of new music

releases isn't an option, but no music site other than last.fm out there knows

the acts in my collection to track updates for those, and last.fm doesn't seem

to have the functionality I need - just to list new studio releases from the

artists I listened to beyond some reasonable threshold, or I just haven't been

able to find it.

I thought of two options.

First is writing some script to submit my watch-list to some music site, so

it'd notify me somehow about updates to these.

Second is to query the updates myself, either through some public-available

APIs like last.fm, cddb, musicbrainz or even something like public atom feeds

from a music portals. It seemed like a pretty obvious idea, btw, yet I've

found no already-written software to do the job.

First one seemed easier, but not as entertaining as the second, plus I have

virtually no knowledge to pick a site which will be best-suited for that (and

I'd hate to pick a first thing from the google), and I'd probably have to

post-process what this site feeds me anyway. I've decided to stick with the

second way.

The main idea was to poll list of releases for every act in my collection, so

the new additions would be instantly visible, as they weren't there before.

Such history can be kept in some db, and an easy way to track such flow would

be just to dump db contents, ordered by addition timestamp, to an atom feed.

Object-db to a web content is a typical task for a web framework, so I chose to

use django as a basic template for the task.

Obtaining list of local acts for my collection is easy, since I prefer not to

rely on tags much (although I try to have them filled with the right data as

well), I keep a strict "artist/year_album/num_-_track" directory tree, so

it takes one readdir with minor post-processing for the names - replace

underscores with spaces, "..., The" to "The ...", stuff like that.

Getting a list of an already-have releases then is just one more listing for

each of the artists' dir.

To get all existing releases, there's cddb, musicbrainz and last.fm and co

readily available.

I chose to use

musicbrainz db (at least as the

first source), since it seemed the most fitting to my purposes, shouldn't be

as polluted as last.fm (which is formed arbitrarily from the tags ppl have in

the files, afaik) and have clear studio-whateverelse distinction.

There's handy

official py-api readily available, which I

query by name for the act, then query it (if found) for available releases

("release" term is actually from there).

The next task is to compare two lists to drop the stuff I already have (local

albums list) from the fetched list.

It'd also be quite helpful to get the release years, so all the releases which

came before the ones in the collection can be safely dropped - they certainly

aren't new, and there should actually be lots of them, much more than truly

new ones. Mbz-db have "release events" for that, but I've quickly found that

there's very little data in that section of db, alas. I wasn't happy about

dropping such an obviously-effective filter so I've hooked much fatter last.fm

db to query for found releases, fetching release year (plus some descriptive

metadata), if there's any, and it actually worked quite nicely.

Another thing to consider here is a minor name differences - punctuation,

typos and such. Luckily, python has a nice

difflib right in the stdlib, which can

compare the strings to get the fuzzy (to a defined threshold) matches, easy.

After that comes db storage, and there's not much to implement but a simple

ORM-model definition with a few unique keys and the django will take care of the

rest.

The last part is the data representation.

No surprises here either, django has

syndication feed framework module,

which can build db-to-feed mapping in a three lines of code, which is almost

too easy and non-challenging, but oh well...

Another great view into db data is the

django admin module,

allowing pretty filtering, searching and ordering, which is nice to have

beside the feed.

One more thing I've thought of is the caching - no need to strain free databases

with redundant queries, so the only non-cached data from these are the lists of

the releases which should be updated from time to time, the rest can be kept in

a single "seen" set of id's, so it'd be immediately obvious if the release was

processed and queried before and is of no more interest now.

To summarize: the tools are django,

python-musicbrainz2 and

pylast; last.fm and

musicbrainz - the data sources (although I might

extend this list); direct result - this feed.

Gave me several dozens of a great new releases for several dozen acts (out of

about 150 in the collection) in the first pass, so I'm stuffed with a new yet

favorite music for the next few months and probably any forseeable future (due

to cron-based updates-grab).

Problem solved.

Code is here, local acts'

list is provided by a simple generator that should be easy to replace for any

other source, while the rest is pretty simple and generic.

Feed (feeds.py) is hooked via django URLConf (urls.py) while the cron-updater

script is bin/forager.py. Generic settings like cache and api keys are in the

forager-app settings.py. Main processing code reside in models.py (info update

from last.fm) and mbdb.py (release-list fetching). admin.py holds a bit of

pretty-print settings for django admin module, like which fields should be

displayed, made filterable, searchable or sortable. The rest are basic django

templates.

While I'm on a vacation, I've decided to try out new distro I've been meaning

to for quite awhile -

exherbo.

Mostly it's the same source-based

gentoo-linux

derivative, yet it's not cloned from gentoo, like

funtoo or

sabayon, but built

from scratch by the guys who've seen gentoo and it's core concepts (like

portage or baselayout) as quite a stagnant thing.

While I don't share much of the disgust they have for gentoo legacy, the ideas

incorporated in that distro sound quite interesting, but I digress...

I don't believe in fairly common practice of "trying out" something new on a

VM - it just don't work for me, probably because I see it as a stupid and

posintless thing on some subconscious level, so I've decided to put it onto one

of my two laptops, which kinda needed a good cleanup anyway.

While at it, I thought it'd be a good idea to finally dump that stupid practice

of entering fs-password on boot, yet I did like the idea of encrypted fs,

especially in case of laptop, so I've needed to devise reasonably secure yet

paswordless boot method.

I use in-kernel LUKS-enabled dm-crypt (with the help of cryptsetup tool), and I need some initrd (or init-fs)

for LVM-root anyway.

There are lots of guides on how to do that with a key from a flash drive but I

don't see it particulary secure, since the key can always be copied from a

drive just about anywhere, plus I don't trust the flash drives much as they

seem to fail me quite often.

As an alternative to that, I have a smartcard-token, which can have a key that

can't be copied in any way.

Problem is, of course, that I need to see some key to decrypt filesystem, so

my idea was to use that key to sign some temporary data which then used to as

an encryption secret.

Furthermore, I thought it'd be nice to have a "dynamic key" that'd change on

every bootup, so even if anything would be able to snatch it from fs and use

token to sign it, that data would be useless after a single reboot.

Initrd software is obviously a

busybox, lvm and a

smartcard-related stuff.

Smartcard I have is Alladin eToken PRO 64k, it works fine with

OpenSC but not via

pcsc-lite, which seem to be preferred hardware

abstraction, but with

openct, which

seems a bit obsolete way. I haven't tried pcsc-lite in quite a while though,

so maybe now it supports eToken as well, but since openct works fairly stable

for me, I thought I'd stick with it anyway.

Boot sequence comes down to these:

- Mount pseudofs like proc/sys, get encrypted partition dev and real-rootfs

signature (for findfs tool, like label or uuid) from cmdline.

- Init openct, find smartcard in /sys by hardcoded product id and attach it to

openct.

- Mount persistent key-material storage (same /boot in my case).

- Read "old" key, replace it with a hashed version, aka "new key".

- Sign old key using smartcard, open fs with the resulting key.

- Drop this key from LUKS storage, add a signed "new" key to it.

- Kill openct processes, effectively severing link with smartcard.

- Detect and activate LVM volume groups.

- Find (findfs) and mount rootfs among currently-available partitions.

- Umount proc/sys, pivot_root, chroot.

- Here comes the target OS' init.

Took me some time to assemble and test this stuff, although it was fun playing

with linux+busybox mini-OS. Makes me somewhat wonder about what takes several

GiBs of space in a full-fledged OS when BB contains pretty much everything in

less than one MiB ;)

And it's probably a good idea to put some early check of /boot partition

(hashes, mounts, whatever) into booted OS init-scripts to see if it was not

altered in any significant way. Not really a guarantee that something nasty

weren't done to it (and then cleaned up, for example) plus there's no proof that

actual OS was booted up from it and the kernel isn't tainted in some malicious

way, but should be enough against some lame tampering or pranks, should these

ever happen.

Anyway, here's the repo with all the initrd

stuff, should anyone need it.

I'm a happy

git user for several years now, and the

best thing about it is that I've learned how VCS-es, and git in particular,

work under the hood.

It expanded (and in most aspects probably formed) my view on the time-series

data storage - very useful knowledge for wide range of purposes from log or

configuration storage to snapshotting, backups and filesystem

synchronisation. Another similar revelation in this area was probably

rrdtool, but still on much smaller scale.

Few years back, I've kept virtually no history of my actions, only keeping my

work in

CVS/

SVN, and even that was just for ease of

collaboration.

Today, I can easily trace, sync and transfer virtually everything that changes

and is important in my system - the code I'm working on, all the configuration

files, even auto-generated ones, tasks' and thoughts' lists, state-description

files like lists of installed packages (local sw state) and gentoo-portage

tree (global sw state), even all the logs and binary blobs like rootfs in

rsync-hardlinked backups for a few past months.

Git is a great help in these tasks, but what I feel lacking there is a

first - common timeline (spanning both into the past and the future) for

all these data series, and second - documentation.

Solution to the first one I've yet to find.

Second one is partially solved by commit-msgs, inline comments and even this

blog for the past issues and simple todo-lists (some I keep in plaintext, some

in tudu app) for the future.

Biggest problem I see here is the lack of consistency between all these:

todo-tasks end up as dropped lines in the git-log w/o any link to the past

issues or reverse link to the original idea or vision, and that's just the

changes.

Documentation for anything more than local implementation details and it's

history is virtually non-existant and most times it takes a lot of effort and

time to retrace the original line of thought, reasoning and purpose behind the

stuff I've done (and why I've done it like that) in the past, often with the

considerable gaps and eventual re-invention of the wheels and pitfalls I've

already done, due to faulty biological memory.

So, today I've decided to scour over the available project and task management

software to find something that ties the vcs repositories and their logs with

the future tickets and some sort of expanded notes, where needed.

Starting point was actually the

trac, which

I've used quite extensively in the past and present, and is quite fond of it's

outside simplicity yet fully-featured capabilities as both wiki-engine and

issue tracker. Better yet, it's py and can work with vcs.

The downside is that it's still a separate service and web-based one at that,

meaning that it's online-only, and that the content is anchored to the server

I deploy it to (not to mention underlying vcs). Hell, it's centralized and

laggy, and ever since git's branching and merging ideas of decentralized work

took root in my brain, I have issue with that.

It just looks like a completely wrong approach for my task, yet I thought that I

can probably tolerate that if there are no better options and then I've stumbled

upon Fossil VCS.

The name actually rang a bell, but from a

9p universe, where it's a name

for a vcs-like filesystem which was (along with venti, built on top of it) one

of two primary reasons I've even looked into

plan9 (the other being its 9p/styx

protocol).

Similary-named VCS haven't disappointed me as well, at least conceptually. The

main win is in the integrated ticket system and wiki, providing just the thing

I need in a distributed versioned vcs environment.

Fossil's overall design principles and concepts (plus this) are

well-documented on it's site (which is a just

a fossil repo itself), and the catch-points for me were:

- Tickets and wiki, of course. Can be edited locally, synced,

distributed, have local settings and appearance, based on tcl-ish

domain-specific

language.

- Distributed nature, yet rational position of authors on

centralization and synchronization topic.

- All-in-one-static-binary approach! Installing hundreds of git

binaries to every freebsd-to-debian-based system, was a pain, plus

I've ended up with 1.4-1.7 version span and some features (like "add

-p") depend on a whole lot of stuff there, like perl and damn lot of

it's modules. Unix-way is cool, but that's really more portable and

distributed-way-friendly.

- Repository in a single package, and not just a binary blob, but a

freely-browsable sqlite db. It certainly is a

hell lot more convenient than path with over nine thousand blobs with

sha1-names, even if the actual artifact-storage here is built

basically the same way. And the performance should be actually better

than the fs - with just index-selects BTree-based sqlite is as fast

as filesystem, but keeping different indexes on fs is by

sym-/hardlinking, and that's a pain that is never done right on fs.

- As simple as possible internal blobs' format.

- Actual symbolics and terminology. Git is a faceless tool, Fossil have

some sort of a style, and that's nice ;)

Yet there are some things I don't like about it:

- HTTP-only sync. In what kind of twisted world that can be better than

ssh+pam or direct access? Can be fixed with a wrapper, I guess, but

really, wtf...

- SQLite container around generic artifact storage. Artifacts are pure

data with a single sha1sum-key for it, and that is simple, solid and

easy to work with anytime, but wrapped into sqlite db it suddenly

depends on this db format, libs, command-line tool or language

bindings, etc. All the other tables can be rebuilt just from these

blobs, so they should be as accessible as possible, but I guess

that'd violate whole single-file design concept and would require a

lot of separate management code, a pity.

But that's nothing more than a few hours' tour of the docs and basic hello-world

tests, guess it all will look different after I'll use it for a while, which I'm

intend to do right now. In the worst case it's just a distributed issue

tracker + wiki with cli interface and great versioning support in one-file

package (including webserver) which is more than I can say about trac, anyway.

Last month I've been busy setting up a monitoring system at work.

Mostly it's the graphs with dynamic data like cpu/mem/io/net loads and

application-specific stats (which I'll probably get around to describing

sometime later), for which there is a nice RRD solutions (I've used

cacti +

snmpd +

my python

extensions +

pyrrd +

rrdtool directly), but there was also one specific

task of setting up websites' http-availability monitoring, spread on several

shared-hosting servers.

There's about 4k of such websites and the data needed is close to boolean -

whether site returns http code below 500 or it's considered "down", but it'd

have been nice to know the code it returns.

Plus, of course, this responses have to be logged, so availability for any

specific period can be calculated (in my case just as 1 - time_down /

time_total). And these shouldn't include random stuff like 503 "downtime"

because the poller got a bad luck on one poll or 500 because apache got a HUP

while processing a request (in theory, these shouldn't happen of course,

but...).

And on top of that, response delay have to be measured as well. And that is

data which should be averaged and selected on some non-trivial basis. Sites'

list changes on a daily basis, polled data should be closed to real-time, so

it's 5-10 minutes poll interval at most.

Clearly, it's time-series data yet rrd is unsuitable for the task - neither

it's well-suited for complex data analysis, nor it can handle dynamic

datasets. Creating a hundred rrds and maintaining the code for their

management on fs looks like a world of pain.

Plain-log approach looks highly unoptimal, plus it's a lot of processing and

logfile-management code.

Both approaches also needed some sort of (although trivial) network interface

to data as well.

SQL-based DB engines handle storage and some-criteria-based selection and have

an efficient network interface outta the box, so it wasn't much of a hard

choice. And the only decent DBs I know out there are PostgreSQL and Oracle,

sqlite or MySQL are rather limited solutions and I've never used

interbase/firebird.

4k*5min is a lot of values though, tens-hundreds of millions of them

actually, and RDBMS become quite sluggish on such amounts of data, so some

aggregation or processing was in order and that's what this entry's about.

First, I've needed to keep one list of domains to check.

These came from the individual hosts where they were, well, hosted, so poller

can periodically get this list and check all the domains there.

CREATE TABLE state_hoard.list_http_availability (

id serial NOT NULL,

target character varying(128) NOT NULL,

"domain" character varying(128) NOT NULL,

check_type state_hoard.check_type NOT NULL,

"timestamp" numeric,

source character varying(16),

CONSTRAINT state_ha__id PRIMARY KEY (id),

CONSTRAINT state_ha__domain_ip_check_type

UNIQUE (target, domain, check_type) );

It should probably be extended with other checks later on so there's check_type

field with enum like this:

CREATE TYPE state_hoard.check_type AS ENUM ('http', 'https');

Target (IP) and domain (hostname) are separate fields here, since dns data is

not to be trusted but the http request should have host-field to be processed

correctly.

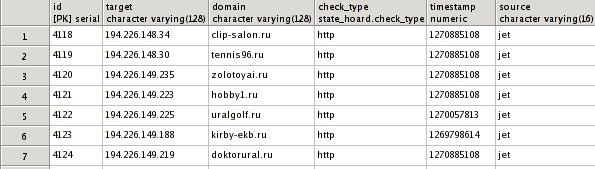

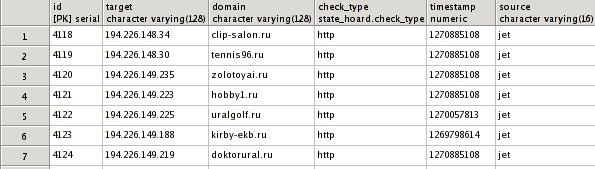

Resulting table:

List is updated via third-party scripts which shouldn't care for internal db

structure even a little bit, so they only need to do insert/delete ops when

the list changes, so the db can take care of the rest, thanks to triggers.

Replace via delete/insert approach isn't an option here, since other tables

are linked vs this one, so update is the way.

CREATE OR REPLACE FUNCTION state_hoard.list_ha_replace()

RETURNS trigger AS

$BODY$

DECLARE

updated integer;

BEGIN

-- Implicit timestamping

NEW.timestamp := COALESCE( NEW.timestamp,

EXTRACT('epoch' FROM CURRENT_TIMESTAMP) );

UPDATE state_hoard.list_http_availability

SET timestamp = NEW.timestamp, source = NEW.source

WHERE domain = NEW.domain

AND target = NEW.target

AND check_type = NEW.check_type;

-- Check if the row still need to be inserted

GET DIAGNOSTICS updated = ROW_COUNT;

IF updated = 0

THEN RETURN NEW;

ELSE RETURN NULL;

END IF;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER list_ha__replace

BEFORE INSERT

ON state_hoard.list_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.list_ha_replace();

From there I had two ideas on how to use this data and store immediate

results, from the poller perspective:

- To replicate the whole table into some sort of "check-list", filling

fields there as the data arrives.

- To create persistent linked tables with polled data, which just

replaced (on unique-domain basis) with each new poll.

While former looks appealing since it allows to keep state in DB, not

the poller, latter provides persistent availability/delay tables and

that's one of the things I need.

CREATE TABLE state_hoard.state_http_availability (

check_id integer NOT NULL,

host character varying(32) NOT NULL,

code integer,

"timestamp" numeric,

CONSTRAINT state_ha__check_host PRIMARY KEY (check_id, host),

CONSTRAINT state_http_availability_check_id_fkey FOREIGN KEY (check_id)

REFERENCES state_hoard.list_http_availability (id) MATCH SIMPLE

ON UPDATE RESTRICT ON DELETE CASCADE );

CREATE TABLE state_hoard.state_http_delay (

check_id integer NOT NULL,

host character varying(32) NOT NULL,

delay numeric,

"timestamp" numeric,

CONSTRAINT state_http_delay_check_id_fkey FOREIGN KEY (check_id)

REFERENCES state_hoard.list_http_availability (id) MATCH SIMPLE

ON UPDATE NO ACTION ON DELETE CASCADE );

These can be thought of as an extensions of the main (list_http_availability)

table, containing "current state" columns for each polled domain, and when

domain is no longer polled, it gets dropped from these tables as well.

Poller just gets the list and inserts the values into these, w/o even having

permissions to alter the list itself.

Since these tables are for latest data, duplicate inserts should be

handled and timestamps can be generated implicitly.

For current-state table it's just a replace on each insert. PostgreSQL doesn't

have convenient "replace" statement like MySQL but the triggers can easily

compensate for that:

CREATE OR REPLACE FUNCTION state_hoard.state_ha_replace()

RETURNS trigger AS

$BODY$

BEGIN

-- Drop old record, if any

DELETE FROM state_hoard.state_http_availability WHERE check_id = NEW.check_id AND host = NEW.host;

-- Implicit timestamp setting, if it's omitted

NEW.timestamp := COALESCE(NEW.timestamp, EXTRACT('epoch' FROM CURRENT_TIMESTAMP));

RETURN NEW;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER state_ha__replace

BEFORE INSERT

ON state_hoard.state_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_ha_replace();

Individual http delays can have quite high entropy, since the http-response

processing in poller can't be truly asynchronous with such a number of hosts

and in fact it's a single-thread eventloop (twisted) anyway, so values here

are kept for some time, so they can be averaged later with a simple group-by.

Timestamp-based cleanup is built into the poller itself, so the trigger here

only fills implicit timestamps.

CREATE OR REPLACE FUNCTION state_hoard.state_hd_insert()

RETURNS trigger AS

$BODY$

BEGIN

-- Implicit timestamp setting, if it's omitted

NEW.timestamp := COALESCE( NEW.timestamp,

EXTRACT('epoch' FROM CURRENT_TIMESTAMP) );

RETURN NEW;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER state_hd__insert

BEFORE INSERT

ON state_hoard.state_http_delay

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_hd_insert();

After that comes the logging part, and the logged part is http response codes.

These shouldn't change frequently, so it's only logical to write changes-only

log.

To grind out random errors I write a longer-than-poll-time (10 minutes,

actually) averages to the intermediate table, while keeping track of such

errors anyway, but in separate log table.

CREATE TABLE state_hoard.log_http_availability (

"domain" character varying(128) NOT NULL,

code integer,

"timestamp" numeric NOT NULL,

CONSTRAINT log_ha__domain_timestamp PRIMARY KEY (domain, "timestamp") );

Interval for these averages can be acquired via simple rounding, and it's

convenient to have single function for that, plus the step in retriveable

form. "Immutable" type here means that the results will be cached for each set

of parameters.

CREATE OR REPLACE FUNCTION state_hoard.log_ha_step()

RETURNS integer AS

'SELECT 600;'

LANGUAGE 'sql' IMMUTABLE

COST 100;

CREATE OR REPLACE FUNCTION state_hoard.log_ha_discrete_time(numeric)

RETURNS numeric AS

'SELECT (div($1, state_hoard.log_ha_step()::numeric) + 1) * state_hoard.log_ha_step();'

LANGUAGE 'sql' IMMUTABLE

COST 100;

"Averaging" for the logs is actually just dropping errors if there's at least

one success in the interval.

It's only logical to do this right on insert into the log-table:

CREATE OR REPLACE FUNCTION state_hoard.log_ha_coerce()

RETURNS trigger AS

$BODY$

DECLARE

updated integer;

BEGIN

-- Implicit timestamp setting, if it's omitted

NEW.timestamp := state_hoard.log_ha_discrete_time(

COALESCE( NEW.timestamp,

EXTRACT('epoch' FROM CURRENT_TIMESTAMP) )::numeric );

IF NEW.code = 200

THEN

-- Successful probe overrides (probably random) errors

UPDATE state_hoard.log_http_availability

SET code = NEW.code

WHERE domain = NEW.domain AND timestamp = NEW.timestamp;

GET DIAGNOSTICS updated = ROW_COUNT;

ELSE

-- Errors don't override anything

SELECT COUNT(*)

FROM state_hoard.log_http_availability

WHERE domain = NEW.domain AND timestamp = NEW.timestamp

INTO updated;

END IF;

-- True for first value in a new interval

IF updated = 0

THEN RETURN NEW;

ELSE RETURN NULL;

END IF;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER log_ha__coerce

BEFORE INSERT

ON state_hoard.log_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.log_ha_coerce();

The only thing left at this point is to actually tie this intermediate log-table

with the state-table, and after-insert/update hooks are good place for that.

CREATE OR REPLACE FUNCTION state_hoard.state_ha_log()

RETURNS trigger AS

$BODY$

DECLARE

domain_var character varying (128);

code_var integer;

-- Timestamp of the log entry, explicit to get the older one, checking for random errors

ts numeric := state_hoard.log_ha_discrete_time(EXTRACT('epoch' FROM CURRENT_TIMESTAMP));

BEGIN

SELECT domain FROM state_hoard.list_http_availability

WHERE id = NEW.check_id INTO domain_var;

SELECT code FROM state_hoard.log_http_availability

WHERE domain = domain_var AND timestamp = ts

INTO code_var;

-- This actually replaces older entry, see log_ha_coerce hook

INSERT INTO state_hoard.log_http_availability (domain, code, timestamp)

VALUES (domain_var, NEW.code, ts);

-- Random errors' trapping

IF code_var != NEW.code AND (NEW.code > 400 OR code_var > 400) THEN

code_var = CASE WHEN NEW.code > 400 THEN NEW.code ELSE code_var END;

INSERT INTO state_hoard.log_http_random_errors (domain, code)

VALUES (domain_var, code_var);

END IF;

RETURN NULL;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER state_ha__log_insert

AFTER INSERT

ON state_hoard.state_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_ha_log();

CREATE TRIGGER state_ha__log_update

AFTER UPDATE

ON state_hoard.state_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_ha_log();

From here, the log will get populated already, but in a few days it will get

millions of entries and counting, so it have to be aggregated and the most

efficient method for this sort of data seem to be in keeping just change-points

for return codes since they're quite rare.

"Random errors" are trapped here as well and stored to the separate table. They

aren't frequent, so no other action is taken there.

The log-diff table is just that - code changes. "code_prev" field is here for

convenience, since I needed to get if there were any changes for a given period,

so the rows there would give complete picture.

CREATE TABLE state_hoard.log_http_availability_diff (

"domain" character varying(128) NOT NULL,

code integer,

code_prev integer,

"timestamp" numeric NOT NULL,

CONSTRAINT log_had__domain_timestamp PRIMARY KEY (domain, "timestamp") );

Updates to this table happen on cron-basis and generated right inside the db,

thanks to plpgsql for that.

LOCK TABLE log_http_availability_diff IN EXCLUSIVE MODE;

LOCK TABLE log_http_availability IN EXCLUSIVE MODE;

INSERT INTO log_http_availability_diff

SELECT * FROM log_ha_diff_for_period(NULL, NULL)

AS data(domain character varying, code int, code_prev int, timestamp numeric);

TRUNCATE TABLE log_http_availability;

And the diff-generation code:

CREATE OR REPLACE FUNCTION state_hoard.log_ha_diff_for_period(ts_min numeric, ts_max numeric)

RETURNS SETOF record AS

$BODY$

DECLARE

rec state_hoard.log_http_availability%rowtype;

rec_next state_hoard.log_http_availability%rowtype;

rec_diff state_hoard.log_http_availability_diff%rowtype;

BEGIN

FOR rec_next IN

EXECUTE 'SELECT domain, code, timestamp

FROM state_hoard.log_http_availability'

|| CASE WHEN NOT (ts_min IS NULL AND ts_max IS NULL) THEN

' WHERE timestamp BETWEEN '||ts_min||' AND '||ts_max ELSE '' END ||

' ORDER BY domain, timestamp'

LOOP

IF NOT rec_diff.domain IS NULL AND rec_diff.domain != rec_next.domain THEN

-- Last record for this domain - skip unknown vals and code change check

rec_diff.domain = NULL;

END IF;

IF NOT rec_diff.domain IS NULL

THEN

-- Time-skip (unknown values) addition

rec_diff.timestamp = state_hoard.log_ha_discrete_time(rec.timestamp + 1);

IF rec_diff.timestamp < rec_next.timestamp THEN

-- Map unknown interval

rec_diff.code = NULL;

rec_diff.code_prev = rec.code;

RETURN NEXT rec_diff;

END IF;

-- rec.code here should be affected by unknown-vals as well

IF rec_diff.code != rec_next.code THEN

rec_diff.code_prev = rec_diff.code;

rec_diff.code = rec_next.code;

rec_diff.timestamp = rec_next.timestamp;

RETURN NEXT rec_diff;

END IF;

ELSE

-- First record for new domain or whole loop (not returned)

-- RETURN NEXT rec_next;

rec_diff.domain = rec_next.domain;

END IF;

rec.code = rec_next.code;

rec.timestamp = rec_next.timestamp;

END LOOP;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100

ROWS 1000;

So that's the logging into the database.

Not as nice and simple as rrd but much more flexible in the end.

And since PostgreSQL already

allows to hook up PL/Python, there's no

problem adding a few triggers to the log-diff table to send out notifications

in case there's a problem.

Whether it's wise to put all the logic into the database like that is a good

question though, I'd probably opt for some sort of interface on the database

-> outside-world path, so db queries won't have full-fledged scripting

language at their disposal and db event handlers would be stored on the file

system, where they belong, w/o tying db to the host that way.

Lately I've migrated back to

pidgin from

gajim through

jabber.el. The thing which made it necessary

was XFire support (via

gfire plugin), which I've

needed to communicate w/ my

spring clanmates.

I'd have preferred jabber-xfire transport instead, but most projects there

look abandoned and I don't really need extensive jabber-features support, so

pidgin is fine with me.

The only thing that's not working there is auto-away support, so it can change

my status due to inactivity.

Actually it changes the status to "away" but for no reason at all, regardless

of idle time, and refuses to set it back to active even when I click it's

window and options.

Well, good thing is that pidgin's mature enough to have dbus interface, so as

the most problems in life, this one can be solved with python ;)

First thing to check is

pidgin dbus interface and figure out how the states

work there: you have to create a "state" with the appropriate message or find

it among stored ones then set it as active, storing id of the previous one.

Next thing is to determine a way to get idle time.

Luckily, X keeps track of activity and I've already used

xprintidle with jabber.el,

so it's not a problem.

Not quite a native py solution, but it has workaround for

one bug and is much more

liteweight than code using py-xlib.

From there it's just an endless sleep/check loop with occasional dbus calls.

One gotcha there is that pidgin can die or be closed, so the loop has to deal

with this as well.

All there is...

Get idle time:

def get_idle():

proc = Popen(['xprintidle'], stdout=PIPE)

idle = int(proc.stdout.read().strip()) // 1000

proc.wait()

return idle

Simple dbus client code:

pidgin = dbus.SessionBus().get_object(

'im.pidgin.purple.PurpleService', '/im/pidgin/purple/PurpleObject' )

iface = dbus.Interface(pidgin, 'im.pidgin.purple.PurpleInterface')

Get initial (available) status:

st = iface.PurpleSavedstatusGetCurrent()

st_type = iface.PurpleSavedstatusGetType(st)

st_msg = iface.PurpleSavedstatusGetMessage(st)

Create away/na statuses:

st_away = iface.PurpleSavedstatusNew('', status.away)

iface.PurpleSavedstatusSetMessage(

st_away, 'AFK (>{0}m)'.format(optz.away // 60) )

st_na = iface.PurpleSavedstatusNew('', status.xaway)

iface.PurpleSavedstatusSetMessage(

st_na, 'AFK for quite a while (>{0}m)'.format(optz.na // 60) )

And the main loop:

while True:

idle = get_idle()

if idle > optz.away:

if idle > optz.na:

iface.PurpleSavedstatusActivate(st_na)

log.debug('Switched status to N/A (idle: {0})'.format(idle//60))

else:

iface.PurpleSavedstatusActivate(st_away)

log.debug('Switched status to away (idle: {0})'.format(idle//60))

sleep(optz.poll)

else:

if iface.PurpleSavedstatusGetType(

iface.PurpleSavedstatusGetCurrent() ) in (status.away, status.xaway):

iface.PurpleSavedstatusActivate(st)

log.debug('Restored original status (idle: {0})'.format(idle//60))

sleep(optz.away)

Bonus of such approach is that any other checks can be easily added -

fullscreen-video-status, for example, or emacs-dont-disturb status. I bet there

are other plugins for this purposes, but I'd prefer few lines of clean py to

some buggy .so anytime ;)

Here's the full code.

Docks.

You always have the touch-sensitive, solid, reliable dock right under your

hands - the keyboard, so what's the point of docks?

- Mouse-user-friendly

- Look cool (cairo-dock, kiba-dock, macosx)

- Provide control over the launched instances of each app

Two first points I don't care much about, but the last one sounds really

nice - instead of switching to app workspace, you can just push the same

hotkey and it'll even be raised for you in case WS is messed up with stacked

windows.

Kinda excessive to install a full-fledged dock for just that, besides it'd eat

screen space and resources for no good reason, so I made my own "dock".

But it's not really a "dock", since it's actually invisible and basically is

just a wrapper for launched commands to check if last process spawned by

identical command exists and just bring it to foreground in this case.

For reliable monitoring of spawned processes there has to be a daemon and

wrappers should relay either command (and env) or spawned process info to it,

which inplies some sort of IPC.

Choosing dbus as that IPC handles the details like watcher-daemon starting and

serialization of data and makes the wrapper itself quite liteweight:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

dbus_iface = 'net.fraggod.SID'

dbus_path = '/net/fraggod/SID'

import os, sys, dbus

sid = dbus.SessionBus().get_object(dbus_iface, dbus_path)

if sys.argv[1][0] != '/':

for path in os.getenv('PATH').split(os.pathsep):

path = os.path.join(path, sys.argv[1])

if os.path.exists(path):

sys.argv[1] = path

break

sid.instance_request(sys.argv[1:], dict(os.environ))

And that's it, most of these just resolves binary location via PATH so it can be

used as unique-index in daemon process right off the pipe.

Daemonized part of the scheme just takes the instance off it's stack, fires up a

new one or returs back some error message:

@dbus.service.method( dbus_iface,

in_signature='asa{ss}', out_signature='s' )

def instance_request(self, argz, env):

try:

data = self.pop_instance(argz, env)

return data if data else ''

except Exception, err: return 'ERROR: {0}'.format(err)

def pop_instance(self, argz, env):

ps = argz[0]

log.info('InstanceRequest: {0}'.format(argz))

if ps[0] != '/': raise TypeError, 'App path must be absolute'

ps = os.path.realpath(ps)

log.debug('Pulling out "{0}"'.format(ps))

try:

app = self.apps[ps]

log.debug('App "{0}" exists, pulling to fg'.format(ps))

app.show()

except KeyError:

log.debug('No app "{0}", starting'.format(ps))

self.apps[ps] = AppInstance(argz, env, self.log)

return 'Started'

Dead apps are collected on SIGCHLD and some extra precautions should be taken

for the case when the signal arrives during the collector code execution, like

when several apps die simultaneously:

def reap_apps(self, sig, frm):

log.debug('Got app exit signal')

try:

locked = self.lock.acquire(False)

self.lock_req = True # indicates that apps have to be re-checked

if not locked:

log.debug('Reap is in progress, re-check scheduled')

return

while self.lock_req:

self.lock_req = False

log.debug('Reaping dead apps')

for k,app in self.apps.iteritems():

if app.dead:

del self.apps[k]

log.debug('App "{0}" was released'.format(k))

finally:

if locked: self.lock.release()

global loop_interrupt

loop_interrupt = True

log.debug('Reaper done')

That way, collector should run until signals stop arriving and shouldn't miss

any app under any circumstances.

AppInstance objects incapsulate all operations concerning each app from starting

it to focus and waitpid:

class AppInstance(object):

_id = None # for debugging purposes only

_ps = _win = None

def __init__(self, argz, env, logfile=False):

log.debug('Creating instance with argz: {0}'.format(argz))

self._id = argz[0]

self._ps = exe.proc( *argz,

preexec_fn=os.setsid, env=env,

stdout=logfile, stderr=exe.STDOUT, stdin=False )

def show(self):

if self.windows:

for win in self.windows: win.focus()

else: log.debug('No window for app "{0}"'.format(self._id))

@property

def windows(self):

if self._win is None:

self._win = wm.Window.by_pid(self._ps.pid)

if self._win: self._win = list(self._win) # all windows for pid

else: self._win = False

return self._win

@property

def dead(self):

return self._ps.wait(0) is not None

WM ops here are from fgc package.

From here all that's left to code is to create dbus-handler instance and get

the loop running.

I called the daemon itself as "sid" and the wrapper as "sic".

To make dbus aware of the service, short note should be put to

"/usr/share/dbus-1/services/net.fraggod.SID.service" with path to daemon binary:

[D-BUS Service]

Name=net.fraggod.SID

Exec=/usr/libexec/sid

...plus the hotkeys rebound from "myapp" to just "sic myapp" and the key-dock is

ready.

Works especially well with WMs that can keep app windows' props between

launches, so just pressing the relevant keys should launch every app where it

belongs with correct window parameters and you won't have to do any WM-related

work at all.

Code: sic.py sid.py

What can be more user-friendly than that? Gotta think about it...

Since I've put some two-day effort into creation of net-snmp snmpd extension and had some free time to

report bug in source of this inspiration, thought I might as well save

someone trouble of re-inventing the wheel and publish it somewhere, since snmpd

extension definitely looks like a black area from python perspective.

I've used sf.net as a project admin before, publishing some

crappy php code for hyscar project

with pretty much the same reasons in mind, and I didn't like the experience

much - cvs for code storage and weird interface are among the reasons I can

remember, but I'll gladly take all this criticism back - current interface has

by far exceed all my expectations (well, prehaps they were too low in the first

place?).

Putting up a full-fledged project page took me (a complete n00b at that) about

half an hour, everything being simple and obvious as it is, native-to-me git

vcs, and even trac

among the (numerous) features. Damn pleasant xp, making you wanna upload

something else just for the sake of it ;)

Just an alpha right now, but I'll polish and deploy it in production in a day

or two, so no worries.