Availability stats (and history log) with relational database (postgresql)

CREATE TABLE state_hoard.list_http_availability (

id serial NOT NULL,

target character varying(128) NOT NULL,

"domain" character varying(128) NOT NULL,

check_type state_hoard.check_type NOT NULL,

"timestamp" numeric,

source character varying(16),

CONSTRAINT state_ha__id PRIMARY KEY (id),

CONSTRAINT state_ha__domain_ip_check_type

UNIQUE (target, domain, check_type) );

It should probably be extended with other checks later on so there's check_type field with enum like this:

CREATE TYPE state_hoard.check_type AS ENUM ('http', 'https');

Target (IP) and domain (hostname) are separate fields here, since dns data is not to be trusted but the http request should have host-field to be processed correctly.

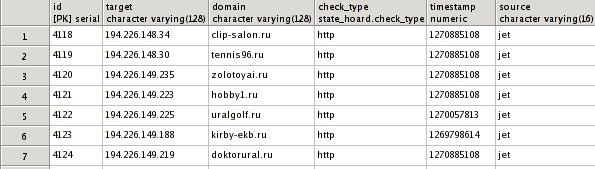

Resulting table:

CREATE OR REPLACE FUNCTION state_hoard.list_ha_replace()

RETURNS trigger AS

$BODY$

DECLARE

updated integer;

BEGIN

-- Implicit timestamping

NEW.timestamp := COALESCE( NEW.timestamp,

EXTRACT('epoch' FROM CURRENT_TIMESTAMP) );

UPDATE state_hoard.list_http_availability

SET timestamp = NEW.timestamp, source = NEW.source

WHERE domain = NEW.domain

AND target = NEW.target

AND check_type = NEW.check_type;

-- Check if the row still need to be inserted

GET DIAGNOSTICS updated = ROW_COUNT;

IF updated = 0

THEN RETURN NEW;

ELSE RETURN NULL;

END IF;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER list_ha__replace

BEFORE INSERT

ON state_hoard.list_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.list_ha_replace();

From there I had two ideas on how to use this data and store immediate results, from the poller perspective:

- To replicate the whole table into some sort of "check-list", filling fields there as the data arrives.

- To create persistent linked tables with polled data, which just replaced (on unique-domain basis) with each new poll.

While former looks appealing since it allows to keep state in DB, not the poller, latter provides persistent availability/delay tables and that's one of the things I need.

CREATE TABLE state_hoard.state_http_availability (

check_id integer NOT NULL,

host character varying(32) NOT NULL,

code integer,

"timestamp" numeric,

CONSTRAINT state_ha__check_host PRIMARY KEY (check_id, host),

CONSTRAINT state_http_availability_check_id_fkey FOREIGN KEY (check_id)

REFERENCES state_hoard.list_http_availability (id) MATCH SIMPLE

ON UPDATE RESTRICT ON DELETE CASCADE );

CREATE TABLE state_hoard.state_http_delay (

check_id integer NOT NULL,

host character varying(32) NOT NULL,

delay numeric,

"timestamp" numeric,

CONSTRAINT state_http_delay_check_id_fkey FOREIGN KEY (check_id)

REFERENCES state_hoard.list_http_availability (id) MATCH SIMPLE

ON UPDATE NO ACTION ON DELETE CASCADE );

CREATE OR REPLACE FUNCTION state_hoard.state_ha_replace()

RETURNS trigger AS

$BODY$

BEGIN

-- Drop old record, if any

DELETE FROM state_hoard.state_http_availability WHERE check_id = NEW.check_id AND host = NEW.host;

-- Implicit timestamp setting, if it's omitted

NEW.timestamp := COALESCE(NEW.timestamp, EXTRACT('epoch' FROM CURRENT_TIMESTAMP));

RETURN NEW;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER state_ha__replace

BEFORE INSERT

ON state_hoard.state_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_ha_replace();

CREATE OR REPLACE FUNCTION state_hoard.state_hd_insert()

RETURNS trigger AS

$BODY$

BEGIN

-- Implicit timestamp setting, if it's omitted

NEW.timestamp := COALESCE( NEW.timestamp,

EXTRACT('epoch' FROM CURRENT_TIMESTAMP) );

RETURN NEW;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER state_hd__insert

BEFORE INSERT

ON state_hoard.state_http_delay

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_hd_insert();

After that comes the logging part, and the logged part is http response codes.

CREATE TABLE state_hoard.log_http_availability (

"domain" character varying(128) NOT NULL,

code integer,

"timestamp" numeric NOT NULL,

CONSTRAINT log_ha__domain_timestamp PRIMARY KEY (domain, "timestamp") );

Interval for these averages can be acquired via simple rounding, and it's convenient to have single function for that, plus the step in retriveable form. "Immutable" type here means that the results will be cached for each set of parameters.

CREATE OR REPLACE FUNCTION state_hoard.log_ha_step()

RETURNS integer AS

'SELECT 600;'

LANGUAGE 'sql' IMMUTABLE

COST 100;

CREATE OR REPLACE FUNCTION state_hoard.log_ha_discrete_time(numeric)

RETURNS numeric AS

'SELECT (div($1, state_hoard.log_ha_step()::numeric) + 1) * state_hoard.log_ha_step();'

LANGUAGE 'sql' IMMUTABLE

COST 100;

CREATE OR REPLACE FUNCTION state_hoard.log_ha_coerce()

RETURNS trigger AS

$BODY$

DECLARE

updated integer;

BEGIN

-- Implicit timestamp setting, if it's omitted

NEW.timestamp := state_hoard.log_ha_discrete_time(

COALESCE( NEW.timestamp,

EXTRACT('epoch' FROM CURRENT_TIMESTAMP) )::numeric );

IF NEW.code = 200

THEN

-- Successful probe overrides (probably random) errors

UPDATE state_hoard.log_http_availability

SET code = NEW.code

WHERE domain = NEW.domain AND timestamp = NEW.timestamp;

GET DIAGNOSTICS updated = ROW_COUNT;

ELSE

-- Errors don't override anything

SELECT COUNT(*)

FROM state_hoard.log_http_availability

WHERE domain = NEW.domain AND timestamp = NEW.timestamp

INTO updated;

END IF;

-- True for first value in a new interval

IF updated = 0

THEN RETURN NEW;

ELSE RETURN NULL;

END IF;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER log_ha__coerce

BEFORE INSERT

ON state_hoard.log_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.log_ha_coerce();

The only thing left at this point is to actually tie this intermediate log-table with the state-table, and after-insert/update hooks are good place for that.

CREATE OR REPLACE FUNCTION state_hoard.state_ha_log()

RETURNS trigger AS

$BODY$

DECLARE

domain_var character varying (128);

code_var integer;

-- Timestamp of the log entry, explicit to get the older one, checking for random errors

ts numeric := state_hoard.log_ha_discrete_time(EXTRACT('epoch' FROM CURRENT_TIMESTAMP));

BEGIN

SELECT domain FROM state_hoard.list_http_availability

WHERE id = NEW.check_id INTO domain_var;

SELECT code FROM state_hoard.log_http_availability

WHERE domain = domain_var AND timestamp = ts

INTO code_var;

-- This actually replaces older entry, see log_ha_coerce hook

INSERT INTO state_hoard.log_http_availability (domain, code, timestamp)

VALUES (domain_var, NEW.code, ts);

-- Random errors' trapping

IF code_var != NEW.code AND (NEW.code > 400 OR code_var > 400) THEN

code_var = CASE WHEN NEW.code > 400 THEN NEW.code ELSE code_var END;

INSERT INTO state_hoard.log_http_random_errors (domain, code)

VALUES (domain_var, code_var);

END IF;

RETURN NULL;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100;

CREATE TRIGGER state_ha__log_insert

AFTER INSERT

ON state_hoard.state_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_ha_log();

CREATE TRIGGER state_ha__log_update

AFTER UPDATE

ON state_hoard.state_http_availability

FOR EACH ROW

EXECUTE PROCEDURE state_hoard.state_ha_log();

From here, the log will get populated already, but in a few days it will get millions of entries and counting, so it have to be aggregated and the most efficient method for this sort of data seem to be in keeping just change-points for return codes since they're quite rare.

"Random errors" are trapped here as well and stored to the separate table. They aren't frequent, so no other action is taken there.

The log-diff table is just that - code changes. "code_prev" field is here for convenience, since I needed to get if there were any changes for a given period, so the rows there would give complete picture.

CREATE TABLE state_hoard.log_http_availability_diff (

"domain" character varying(128) NOT NULL,

code integer,

code_prev integer,

"timestamp" numeric NOT NULL,

CONSTRAINT log_had__domain_timestamp PRIMARY KEY (domain, "timestamp") );

Updates to this table happen on cron-basis and generated right inside the db, thanks to plpgsql for that.

LOCK TABLE log_http_availability_diff IN EXCLUSIVE MODE;

LOCK TABLE log_http_availability IN EXCLUSIVE MODE;

INSERT INTO log_http_availability_diff

SELECT * FROM log_ha_diff_for_period(NULL, NULL)

AS data(domain character varying, code int, code_prev int, timestamp numeric);

TRUNCATE TABLE log_http_availability;

And the diff-generation code:

CREATE OR REPLACE FUNCTION state_hoard.log_ha_diff_for_period(ts_min numeric, ts_max numeric)

RETURNS SETOF record AS

$BODY$

DECLARE

rec state_hoard.log_http_availability%rowtype;

rec_next state_hoard.log_http_availability%rowtype;

rec_diff state_hoard.log_http_availability_diff%rowtype;

BEGIN

FOR rec_next IN

EXECUTE 'SELECT domain, code, timestamp

FROM state_hoard.log_http_availability'

|| CASE WHEN NOT (ts_min IS NULL AND ts_max IS NULL) THEN

' WHERE timestamp BETWEEN '||ts_min||' AND '||ts_max ELSE '' END ||

' ORDER BY domain, timestamp'

LOOP

IF NOT rec_diff.domain IS NULL AND rec_diff.domain != rec_next.domain THEN

-- Last record for this domain - skip unknown vals and code change check

rec_diff.domain = NULL;

END IF;

IF NOT rec_diff.domain IS NULL

THEN

-- Time-skip (unknown values) addition

rec_diff.timestamp = state_hoard.log_ha_discrete_time(rec.timestamp + 1);

IF rec_diff.timestamp < rec_next.timestamp THEN

-- Map unknown interval

rec_diff.code = NULL;

rec_diff.code_prev = rec.code;

RETURN NEXT rec_diff;

END IF;

-- rec.code here should be affected by unknown-vals as well

IF rec_diff.code != rec_next.code THEN

rec_diff.code_prev = rec_diff.code;

rec_diff.code = rec_next.code;

rec_diff.timestamp = rec_next.timestamp;

RETURN NEXT rec_diff;

END IF;

ELSE

-- First record for new domain or whole loop (not returned)

-- RETURN NEXT rec_next;

rec_diff.domain = rec_next.domain;

END IF;

rec.code = rec_next.code;

rec.timestamp = rec_next.timestamp;

END LOOP;

END;

$BODY$

LANGUAGE 'plpgsql' VOLATILE

COST 100

ROWS 1000;