Feb 04, 2013

Was hacking something irrelevant together again and, as often happens with

such things, realized that I implemented something like that before.

It can be some simple - locking function in python, awk pipe to get some

monitoring data, chunk of argparse-based code to process multiple subcommands,

TLS wrapper for requests, dbapi wrapper, multi-module parser/generator for

human-readable dates, logging buffer, etc...

Point is - some short snippet of code is needed as a base for implementing

something new or maybe even to re-use as-is, yet it's not noteworthy enough on

it's own to split into a module or generally do anything specific about it.

Happens a lot to me, as over the years, a lot of such ad-hoc yet reusable code

gets written, and I can usually remember enough implementation details

(e.g. which modules were used there, how the methods/classes were called and

such), but going "grep" over the source dir takes a shitload of time.

Some things make it faster - ack or pss tools can scan only relevant things

(like e.g. "grep ... **/*.py" will do in zsh), but these also run for

minutes, as even simple "find" does - there're several django source trees in

appengine sdk, php projects with 4k+ files inside, maybe even whole linux kernel

source tree or two...

Traversing all these each time on regular fs to find something that can be

rewritten in a few minutes will never be an option for me, but luckily there're

cool post-fs projects like tmsu, which allow to transcend

single-hierarchy-index limitation of a traditional unix fs in much more elegant

and useful way than gazillion of symlinks and dentries.

tmsu allows to attach any tags to any files, then query these files back using a

set of tags, which it does really fast using sqlite db and clever indexes there.

So, just tagging all the "*.py" files with "lang:py" will allow to:

% time tmsu files lang:py | grep myclass

tmsu files lang:py 0.08s user 0.01s system 98% cpu 0.094 total

grep --color=auto myclass 0.01s user 0.00s system 10% cpu 0.093 total

That's 0.1s instead of several minutes for all the python code in the

development area on this machine.

tmsu can actually do even cooler tricks than that with fuse-tagfs mounts, but

that's all kinda wasted until all the files won't be tagged properly.

Which, of course, is a simple enough problem to solve.

So here's my first useful

Go project -

codetag.

I've added taggers for things that are immediately useful for me to tag files

by - implementation language, code hosting (github, bitbucket, local project, as

I sometimes remember that snippet was in some public tool), scm type (git, hg,

bzr, svn), but it adding a new one is just a metter of writing a "Tagger"

function, which, given the path and config, returns a list of string tags, plus

they're only used if explicitly enabled in config.

Other features include proper python-like logging and rsync-like filtering (but

using more powerful

re2 regexps instead of simple glob patterns).

Being a proper compiled language, Go allows to make the thing into a single

static binary, which is quite neat, as I realized that I now have a tool to

tag all the things everywhere - media files on servers' remote-fs'es, like music

and movies, hundreds of configuration files by the app they belong to (think

tmsu files daemon:apache to find/grep all the horrible ".htaccess" things

and it's "*.conf" includes), distfiles by the os package name, etc... can be

useful.

So, to paraphrase well-known meme, Tag All The Things! ;)

github link

Jan 28, 2013

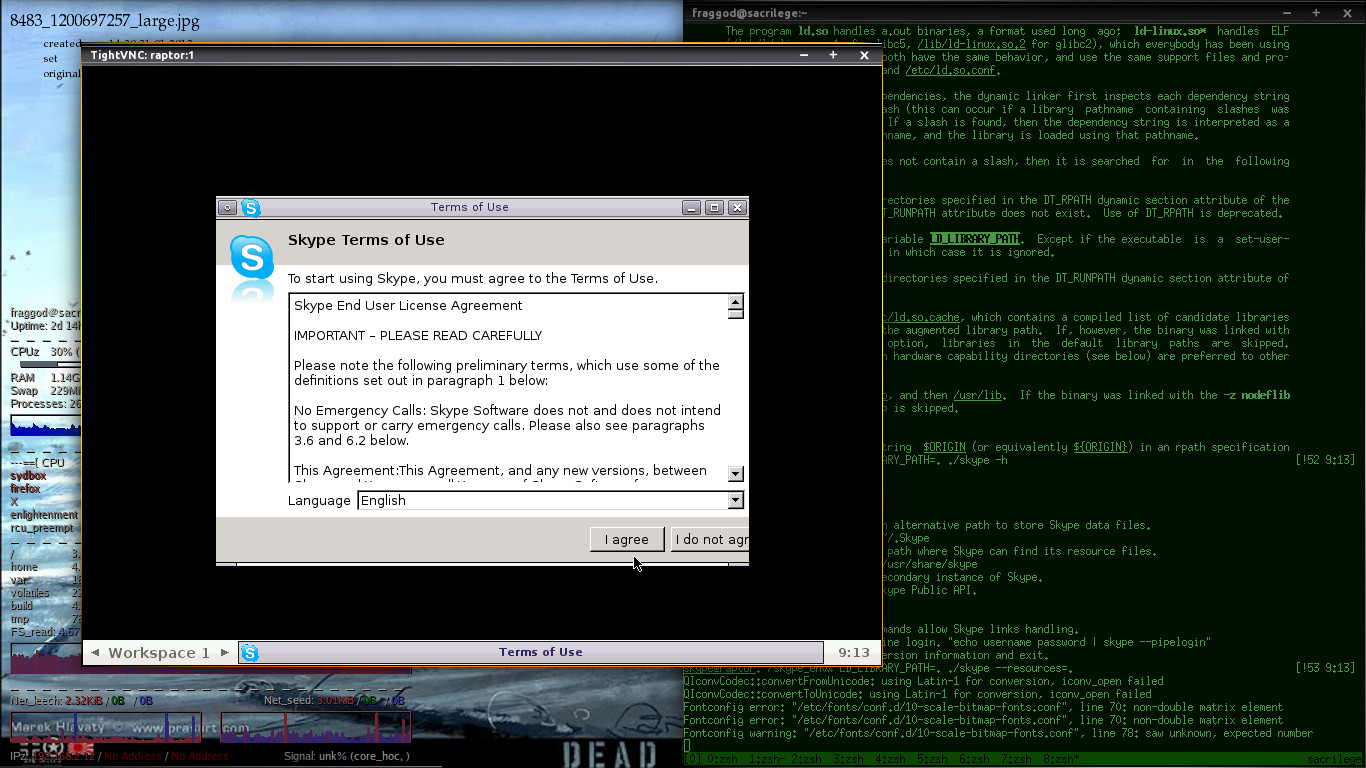

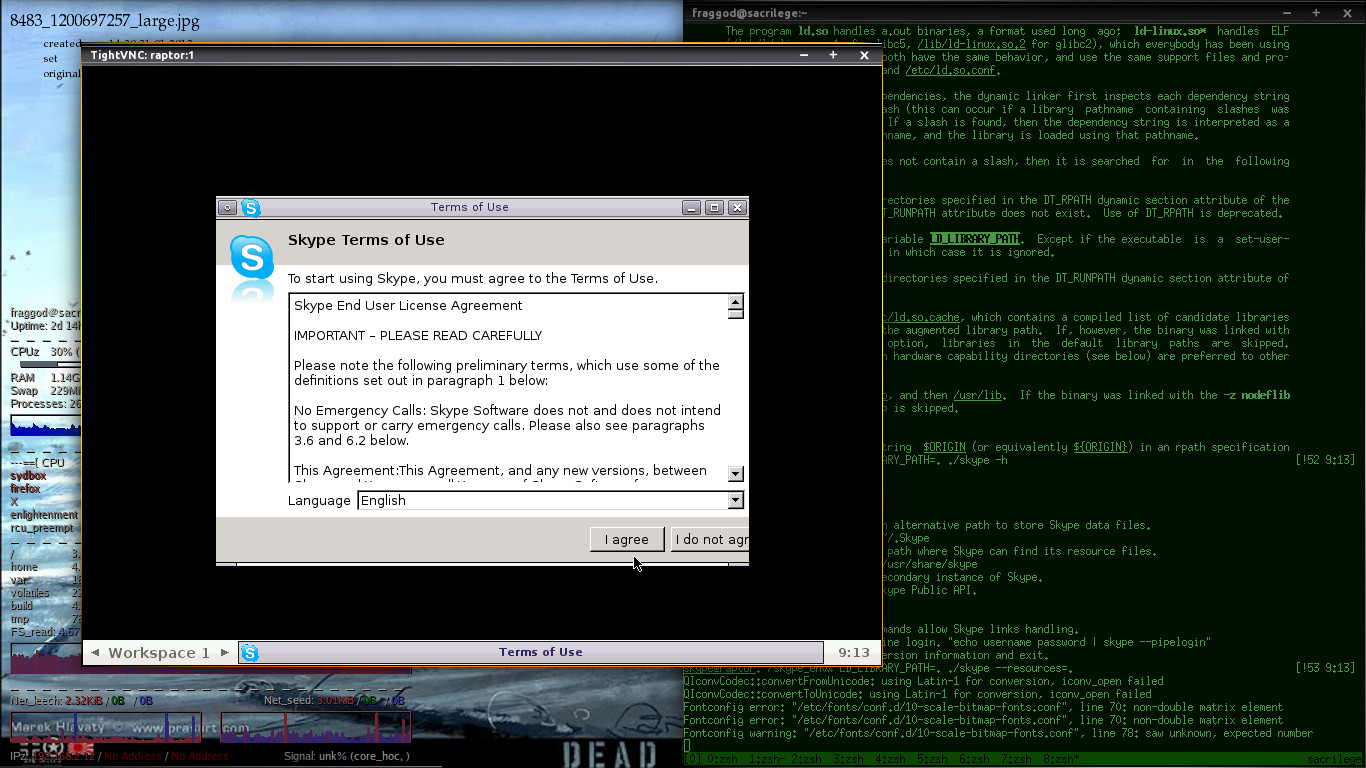

As per previous entry, with mock-desktop setup of Xvfb, fluxbox, x11vnc and

skype in place, the only thing left is to use skype interfaces (e.g. dbus) to

hook it up with existing IRC setup and maybe insulate skype process from the

rest of the system.

Last bit is even easier than usual, since all the 32-bit libs skype needs are

collected in one path, so no need to allow it to scan whatever system paths.

Decided to go with the usual simplistic apparmor-way here - apparmor.profile,

don't see much reason to be more paranoid here.

Also, libasound, used in skype gets quite noisy log-wise about not having the

actual hardware on the system, but I felt bad about supressing the whole

stderr stream from skype (to not miss the crash/hang info there), so had to

look up a way to /dev/null alsa-lib output.

General way seem to be having "null" module as "default" sink

pcm.!default {

type null

}

ctl.!default {

type null

}

(libasound can be pointed to a local config by ALSA_CONFIG_PATH env var)

That "null" module is actually a dynamically-loaded .so, but alsa prints just a

single line about it being missing instead of an endless stream of complaints

for missing hw, so the thing works, by accident.

Luckily, bitlbee has support for skype, thanks to vmiklos, with sane way to

run bitlbee and skype setup on different hosts (as it actually is in my case)

through "skyped" daemon talking to skype and bitlbee connecting to its tcp

(tls-wrapped) socket.

Using skyped shipped with bitlbee (which is a bit newer than on bitlbee-skype

github) somewhat worked, with no ability to reconnect to it (hangs after

handling first connection), ~1/4 chance of connection from bitlbee failing, it's

persistence in starting skype (even though it's completely unnecessary in my

case - systemd can do it way better) and such.

It's fairly simple python script though, based on somewhat unconventional

Skype4Py module, so was able to fix most annoying of these issues (code can

be found in the

skype-space repo).

Will try to get these merged into bitlbee as I'm not the only one having these

issues, apparently (e.g.

#966), but so many things seem to be broken in

that code (esp. wrt socket-handling), I think some major rewrite is in order,

but that might be much harder to push upstream.

One interesting quirk of skyped is that it uses TLS to protect connections

(allowing full control of the skype account) between bitlbee module and the

daemon, but it doesn't bothers with any authorization, making that traffic as

secure as plaintext to anyone in-between.

Quite a bit worse is that it's documented that the traffic is "encrypted",

which might get one to think "ok, so running that thing on vps I don't need

ssh-tunnel wrapping", which is kinda sad.

Add to that the added complexity it brings, segfaults in the plugin (crashing

bitlbee), unhandled errors like

Traceback (most recent call last):

File "./skyped", line 209, in listener

ssl_version=ssl.PROTOCOL_TLSv1)

File "/usr/lib64/python2.7/ssl.py", line 381, in wrap_socket

ciphers=ciphers)

File "/usr/lib64/python2.7/ssl.py", line 143, in __init__

self.do_handshake()

File "/usr/lib64/python2.7/ssl.py", line 305, in do_handshake

self._sslobj.do_handshake()

error: [Errno 104] Connection reset by peer

...and it seem to be classic "doing it wrong" pattern.

Not that much of an issue in my case, but I guess there should at least be a

big red warning for that.

Functionality-wise, pretty much all I needed is there - one-to-one chats,

bookmarked channels (as irc channels!), file transfers (just set "accept all"

for these) with notifications about them, user info, contact list (add/remove

with allow/deny queries),

But the most important thing by far is that it works at all, saving me plenty of

work to code whatever skype-control interface over irc, though I'm very tempted

to rewrite "skyped" component, which is still a lot easier with bitlbee plugin

on the other end.

Units and configs for the whole final setup can be found on github.

Jan 27, 2013

Thought it should be (hardly) worth a notice that Skype (well, Microsoft now)

offers a thing called SkypeKit.

To get it, one have to jump through a dozen of hoops, including long

registration form, $5 "tax for your interest in out platform" and wait for

indefinite amount of time for invite to the privileged circle of skype hackers.

Here's part of the blurb one have to agree to:

By registering with Skype Developer, you will have access to confidential

information and documentation relating to the SkypeKit program that has not

been publicly released ("Confidential Information") and you agree not to

disclose, publish or disseminate the Confidential Information to any third

party (including by posting on any developer forum); and to take reasonable

measures to prevent the unauthorised use, disclosure, publication or

dissemination of the Confidential Information.

Just WOW!

What a collossal douchebags people who came up with that must be.

I can't even begin to imagine sheer scale of idiocy that's going on in the

organization to come up with such things.

But I think I'd rather respect the right of whoever came up with that "hey,

let's screw developers" policy, if only to avoid (admittedly remote) chance of

creating something useful for a platform like that.

Jan 27, 2013

Skype is a necessary evil for me, but just for text messages, and it's quite

annoying that its closed nature makes it hard to integrate it into existing

IM/chat infrastructure (for which I use ERC + ZNC + bitlbee + ejabberd).

So, finally got around to pushing the thing off my laptop machine.

Despite being quite a black-box product, skype has a surprisingly useful API,

allowing to do pretty much everything desktop client allows to, which is

accessible via several means, one of them being dbus.

Wish that API was accessible on one of their servers, but no such luck, I

guess. Third-party proxies are actually available, but I don't think +1 point of

trust/failure is necessary here.

Since they stopped providing amd64 binaries (and still no word of sources, of

course) and all the local non-laptop machines around are amd64, additional quirk

is either enabling multibuild and pulling it everything up to and including Qt

and WebKit to the poor headless server or just put what skype needs there built

on 32-bit machine.

Not too enthusiastic about building lots of desktop crap on atom-based mini-ITX

server, decided to go with the latter option, and dependency libs turn out to be

fairly lean:

% ldd /opt/skype/skype | awk '$3 {print $3}' |

xargs ls -lH | awk '{sum+=$5} END {print sum}'

49533468

Naturally, 50M is not an issue for a reasonably modern amounts of RAM.

But, of course, skype runs on X server, so Xvfb (cousing of X, drawing to memory

instead of some GPU hardware):

# cave resolve -zx1 xorg-server x11vnc fluxbox

Concrete example above is for source-based exherbo, I think binary distros like

debian might package Xvfb binary separately from X (in some "xvfb" package).

fluxbox is there to have easy time interacting with skype-created windows.

Note - no heavy DE stuff is needed here, and as I was installing it on a machine

hosting cairo-based graphite web frontend, barely any packages are actually

needed here, aside from a bunch of X protocol headers and the things specified.

So, to run Xvfb with VNC I've found a bunch of simple shell scripts, which were

guaranteed to not provide a lot of things a normal desktop session does, miss

stray pids, create multiple instances for all the things involved, loose output,

no xdg session, etc.

In general (and incomplete) case, something like this should be done:

export DISPLAY=:0

Xvfb $DISPLAY -screen 0 800x600x16 &

x11vnc -display $DISPLAY -nopw -listen localhost &

fluxbox &

skype &

wait

So, to not reinvent the same square wheel, decided to go with trusty systemd

--user, as it's used as a system init anyway.

skype-desktop.service:

[Service]

User=skype

PAMName=login

Type=notify

Environment=DISPLAY=:1

Environment=DBUS_SESSION_BUS_ADDRESS=unix:path=%h/tmp/session_bus_socket

ExecStart=/usr/lib/systemd/systemd --user

[Install]

WantedBy=multi-user.target

Aside from a few quirks like hardcoding dbus socket, that already fixes a lot of

XDG_* related env-stuff, proper start/stop cleanup (no process escapes from that

cgroup), monitoring (state transitions for services are echoed on irc to me),

logging (all output will end up in queryable journal and syslog) and such, so

highly recommend not going the "simple" bash-way here.

Complimentary session units generally look like this (Xvfb.service):

[Service]

SyslogIdentifier=%p

ExecStart=/usr/bin/Xvfb $DISPLAY -screen 0 800x600x16

And with systemct start skype-desktop, nice (but depressingly empty) fluxbox

desktop is now accessible over ssh+vnc (don't trust vnc enough to run it on

non-localhost, plus should be rarely needed anyway):

% ssh -L 5900:localhost:5900 user@host &

% vncclient localhost

Getting skype to run on the target host was a bit more difficult than I've

expected though - local x86 machine has -march=native in CFLAGS and core-i3 cpu,

so just copying binaries/libs resulted in a predictable:

[271817.608818] traps: ld-linux.so.2[7169]

trap invalid opcode ip:f77dad60 sp:ffb91860 error:0 in ld-linux.so.2[f77c6000+20000]

Fortunately, there're always generic-arch binary distros, so had to spin up a

qemu with ubuntu livecd iso, install skype there and run the same

collect-all-the-deps script.

Basically, what's needed for skype to run is it's own data/media files

("/opt/skype", "/usr/share/skype"), binary ("/usr/lib/skype",

"/opt/skype/skype") and all the so's it's linked against.

There's no need to put them all in "/usr/lib" or such, aside from

"ld-linux.so.2", path to which ("/lib/ld-linux.so.2") is hard-compiled into

skype binary (and is honored by linker).

Should be possible to change it there, but iirc skype checked it's binary

checksum as well, so might be a bit more complicated than just "sed".

LD_LIBRARY_PATH=. ./skype --resources=. is the recipie for dealing with

the rest.

Yay!

So, to the API-to-IRC scripts then... probably in the next entry, as I get to

these myself.

Also following might be revised apparmor profile for such setup and maybe a

script to isolate the whole thing even further into namespaces (which is

interesting thing to try, but not sure how it might be useful yet with LSM

already in place).

All the interesting stuff for the whole endeavor can be found in the ad-hoc repo

I've created for it: https://github.com/mk-fg/skype-space

Jan 25, 2013

Ditched bloog engine here in favor of static pelican yesterday, and while I

was able to remember about keeping legacy links working, pretty sure I forgot

about guids on the feed, so apologies to anyone who might care.

Guess it's pointless to fix these now.

All the entries can be found on github now in rst-format, though older ones

might be a bit harder to read in the source, as they were mostly auto-converted

by pandoc and I only checked if they're still rendered correctly to html.

As appengine also made me migrate from master-slave db replication to the shiny

high-replication blobstore, I wonder if hosting static html here now counts as

abuse...

Jan 21, 2013

As I've been decompiling dynamic E config in the past

anyway, wanted to back it up to git repo along with the rest of them.

Quickly stumbled upon a problem though - while E doesn't really modify it

without me making some conscious changes, it reorders (or at least eet

produces such) sections and values there, making straight dump to git a bit

more difficult.

Plus, I have a

pet project to update

background, and it also introduces transient changes, so some pre-git

processing was in order.

e.cfg looks like this:

group "E_Config" struct {

group "xkb.used_options" list {

group "E_Config_XKB_Option" struct {

value "name" string: "grp:caps_toggle";

}

}

group "xkb.used_layouts" list {

group "E_Config_XKB_Layout" struct {

value "name" string: "us";

...

Simple way to make it "canonical" is just to order groups/values there

alphabetically, blanking-out some transient ones.

That needs a parser, and while regexps aren't really suited to that kind of

thing, pyparsing should work:

number = pp.Regex(r'[+-]?\d+(\.\d+)?')

string = pp.QuotedString('"') | pp.QuotedString("'")

value_type = pp.Regex(r'\w+:')

group_type = pp.Regex(r'struct|list')

value = number | string

block_value = pp.Keyword('value')\

+ string + value_type + value + pp.Literal(';')

block = pp.Forward()

block_group = pp.Keyword('group') + string\

+ group_type + pp.Literal('{') + pp.OneOrMore(block) + pp.Literal('}')

block << (block_group | block_value)

config = pp.StringStart() + block + pp.StringEnd()

Fun fact: this parser doesn't work.

Bails with some error in the middle of the large (~8k lines) real-world config,

while working for all the smaller pet samples.

I guess some buffer size must be tweaked (kinda unusual for python module

though), maybe I made a mistake there, or something like that.

So, yapps2-based parser:

parser eet_cfg:

ignore: r'[ \t\r\n]+'

token END: r'$'

token N: r'[+\-]?[\d.]+'

token S: r'"([^"\\]*(\\.[^"\\]*)*)"'

token VT: r'\w+:'

token GT: r'struct|list'

rule config: block END {{ return block }}

rule block: block_group {{ return block_group }}

| block_value {{ return block_value }}

rule block_group:

'group' S GT r'\{' {{ contents = list() }}

( block {{ contents.append(block) }} )*

r'\}' {{ return Group(S, GT, contents) }}

rule value: S {{ return S }} | N {{ return N }}

rule block_value: 'value' S VT value ';' {{ return Value(S, VT, value) }}

Less verbose (even with more processing logic here) and works.

Embedded in a python code (doing the actual sorting), it all looks like this (might be useful to work

with E configs, btw).

yapps2 actually generates quite readable code from it, and it was just

simpler (and apparently more bugproof) to write grammar rules in it.

ymmv, but it's a bit of a shame that pyparsing seem to be the about the only

developed parser-generator of such kind for python these days.

Had to package yapps2 runtime to install it properly, applying some community

patches (from debian package) in process and replacing some scary cli code

from 2003. Here's a fork.

Jan 16, 2013

It's a documented feature that 0.17.0 release (even if late

pre-release version was used before) throws existing configuration out of the

window.

I'm not sure what warranted such a drastic usability bomb, but it's not

actually as bad as it seems - like 95% of configuration (and 100% of

*important* parts of it) can be just re-used (even if you've already started

new version!) with just a little bit of extra effort (thanks to ppurka in #e

for pointing me in the right direction here).

Sooo wasn't looking forward to restore all the keyboard bindings, for one

thing (that's why I actually did the update just one week ago or so).

E is a bit special (at least among wm's - fairly sure some de's do similar

things as well) in that it keeps its settings on disk compiled and compressed

(with eet) - but it's much easier

to work with than it might sound like at first.

So, to get the bits of config migrated, one just has to pull the old (pre-zero)

config out, then start zero-release e to generate new config, decompile both of

these, pull compatible bits from old into the new one, then compile it and put

back into "~/.e/e/config"

Before zero update, config can be found in "~/.e/e/config/standard/e.cfg"

If release version was started already and dropped the config, then old one

should be "~/.e/e/config/standard/e.1.cfg" (or any different number instead of

"1" there, just mentally substitute it in examples below).

Note that "standard" there is a profile name, if it might be called differently,

check "~/.e/e/config/profile.cfg" (profile name should be readable there, or use

"eet -x ~/.e/e/config/profile.cfg config").

"eet -d ~/.e/e/config/standard/e.cfg config" should produce perfectly readable

version of the config to stdout.

Below is how I went about the whole process.

Make a repository to track changes (will help if the process might take more

merge-test iterations than one):

% mkdir e_config_migration

% cd e_config_migration

% git init

Before zero update:

% cp ~/.e/e/config/standard/e.cfg e_pre_zero

% eet -d e_pre_zero config > e_pre_zero.cfg

Start E-release (wipes the config, produces a "default" new one there).

% cp ~/.e/e/config/standard/e.cfg e_zero

% eet -d e_zero config > e_zero.cfg

% git add e_*

% git commit -m "Initial pre/post configurations"

% emacs e_pre_zero.cfg e_zero.cfg

Then copy all the settings that were used in any way to e_zero.cfg.

I copied pretty much all the sections with relevant stuff, checking that the

keys in them are the same - and they were, but I've used 0.17.0alpha8 before

going for release, so if not, I'd just try "filling the blanks", or, failing

that, just using old settings as a "what has to be setup through settings-panel"

reference.

To be more specific - "xkb" options/layouts (have 2 of them setup),

shelves/gadgets (didn't have these, and was lazy to click-remove existing ones),

"remembers" (huge section, copied all of it, worked!), all "bindings" (pain to

setup these).

After all these sections, there's a flat list of "value" things, which turned

out to contain quite a lot of hard-to-find-in-menus parameters, so here's what I

did:

- copy that list (~200 lines) from old config to some file - say,

"values.old", then from a new one to e.g. "values.new".

- sort -u values.old > values.old.sorted;

sort -u values.new > values.new.sorted

- diff -uw values.{old,new}.sorted

Should show everything that might need to be changed in the new config with

descriptive names and reveal all the genuinely new parameters.

Just don't touch "config_version" value, so E won't drop the resulting

config.

After all the changes:

% eet -e e_zero config e_zero.cfg 1

% git commit -a -m Merged-1

% cp e_zero ~/.e/e/config/standard/e.cfg

% startx

New config worked for me for all the changes I've made - wasn't sure if I can

copy *that* much from the start, but it turned out that almost no

reconfiguration was necessary.

Caveat is, of course, that you should know what you're doing here, and be ready

to handle issues / rollback, if any, that's why putting all these changes in git

might be quite helpful.

Sep 16, 2012

Right now I was working on python-skydrive module and further integration of MS

SkyDrive into tahoe-lafs as a cloud backend, to keep the stuff you really care

about safe.

And even if you don't trust SkyDrive to keep stuff safe, you still have to

register your app with these guys, especially if it's an open module, because

"You are solely and entirely responsible for all uses of Live Connect occurring

under your Client ID." and it's unlikely that a generic python interface author

will vouch for all it's uses like that.

What do "register app" mean? Agreeing to yet another "Terms of Service", of course!

Do anyone ever reads these?

What the hell "You may only use the Live SDK and Live Connect APIs to create

software." sentence means there?

Did you know that "You are solely and entirely responsible for all uses of

Live Connect occurring under your Client ID." (and that's an app-id, given out

to the app developers, not users)?

How many more of such "interesting" stuff is there?

I hardly care enough to read, but there's an app for

exactly that, and it's relatively well-known by now.

What might be not as well-known, is that there's now a

campaign on IndieGoGo to keep the thing

alive and make it better.

Please consider supporting the movement in any way, even just by spreading the

word, right now, it's really one of the areas where filtering-out of all the

legalese crap and noise is badly needed.

http://www.indiegogo.com/terms-of-service-didnt-read

Aug 16, 2012

A friend gave me this thing to play with (and eventually adapt to his purposes).

What's interesting here is that TI seem to give these things out for free.

Seriously, a box with a debug/programmer board and two microcontroller chips

(which are basically your

programmable computer with RAM, non-volatile flash memory, lots of interfaces,

temp sensor, watchdog, etc that can be powered from 2 AA cells), to any part of

the world with FedEx for a beer's worth - $5.

Guess it's time to put a computer into every doorknob indeed.

Aug 09, 2012

Having a bit of free time recently, worked a bit on

feedjack web rss reader / aggregator project.

To keep track of what's already read and what's not, historically I've used

js + client-side localStorage approach, which has quite a few advantages:

- Works with multiple clients, i.e. everyone has it's own state.

- Server doesn't have to store any data for possible-infinite number of

clients, not even session or login data.

- Same pages still can be served to all clients, some will just hide

unwanted content.

- Previous point leads to pages being very cache-friendly.

- No need to "recognize" client in any way, which is commonly acheived

with authentication.

- No interation of "write" kind with the server means much less

potential for abuse (DDoS, spam, other kinds of exploits).

Flip side of that rosy picture is that localStorage only works in one browser

(or possibly several synced instances), which is quite a drag, because one

advantage of a web-based reader is that it can be accessed from anywhere, not

just single platform, where you might as well install specialized app.

To fix that unfortunate limitation, about a year ago I've added ad-hoc storage

mechanism to just dump localStorage contents as json to some persistent

storage on server, authenticated by special "magic" header from a browser.

It was never a public feature, requiring some browser tweaking and being a

server admin, basically.

Recently, however, remoteStorage project from

unhosted group has caught my attention.

Idea itself and the movement's goals are quite ambitious and otherwise

awesome - to return to "decentralized web" idea, using simple already

available mechanisms like webfinger for service discovery (reminds of

Thimbl concept by telekommunisten.net), WebDAV for storage and

OAuth2 for authorization (meaning no special per-service passwords or similar

crap).

But the most interesting thing I've found about it is that it should be

actually easier to use than write ad-hoc client syncer and server storage

implementation - just put off-the-shelf remoteStorage.js to the page (it even

includes "syncer" part to sync localStorage to remote server) and depoy or

find any remoteStorage provider and you're all set.

In practice, it works as advertised, but will have quite significant changes

soon (with the release of 0.7.0 js version) and had only ad-hoc

proof-of-concept server implementation in python (though there's also

ownCloud in php and node.js/ruby versions), so I

wrote

django-unhosted

implementation, being basically a glue between simple WebDAV,

oauth2app and Django Storage API (which

has

backends for

everything).

Using that thing in feedjack now (

here, for example) instead of that hacky

json cache I've had with django-unhosted deployed on my server, allowing to

also use it with all the

apps with support out there.

Looks like a really neat way to provide some persistent storage for any webapp

out there, guess that's one problem solved for any future webapps I might

deploy that will need one.

With JS being able to even load and use binary blobs (like images) that way

now, it becomes possible to write even unhosted facebook, with only events

like status updates still aggregated and broadcasted through some central

point.

I bet there's gotta be something similar, but with facebook, twitter or maybe

github backends, but as proven in many cases, it's not quite sane to rely on

these centralized platforms for any kind of service, which is especially a

pain if implementation there is one-platform-specific, unlike one

remoteStorage protocol for any of them.

Would be really great if they'd support some protocol like that at some point

though.

But aside for short-term "problem solved" thing, it's really nice to see such

movements out there, even though whole stack of market incentives (which

heavily favors

control over data, centralization and monopolies) is against them.