Jan 12, 2026

When tinkering with something outside PC/laptop screen, I often need a reminder

pad for a wiring schematic or recipe, or even some hotkeys, maps and other quickref-data

when playing games on main display, and I'm using e-ink pad for that,

combining any kind of text/icons/diagrams/images/etc into one reference card

via common GIMP image editor.

Particular pad that I have is a relatively cheap 7.5" WaveShare NFC-powered e-Paper one

from AliExpress, which is thin and light, as it doesn't have a battery, and is updated by

NFC from a phone (quick demo like this 30s youtube video might help to get the idea).

As an aside, full process of updating such pad goes something like this:

- Cook up whatever thing in GIMP, Ctrl-E there to save 1-bit PNG.

- Grab phone, tap BT and NFC tiles to enable those on it.

- Run bt-obex -p <phone-addr> image.png from shell history (bluez-tools).

- Tap "Accept" on the phone, run nfc-epaper-writer app and "Load Image" there.

- Put phone on the tablet, wait some 5-10s to upload/refresh epaper display image.

- Disable BT/NFC, put phone away, grab the pad and be off to do something with it.

Phone works as a cache for images, which can later be put onto pad in one step,

but other than that, eink pad with updates via USB cable would've had less steps.

So NFC power+updates there sounds neat, but not actually that useful.

Upside of eink for this is that whatever info/reminder always stays there to check

anytime (incl. hours, days or months later), without needing free/clean hands to drop

everything and tinker with small phone screen, which has to be clumsily tapped/scrolled,

goes to sleep, discharges and dies, wash hands afterwards, etc.

But downside of this particular pad at least, is that it's purely 1-bit monochrome,

i.e. not even grayscale, has only black-or-white pixels.

Which tbf has its own charm with dithering for images (see didder wrapper at the end),

icons/schematics there just don't need color, and large/bold text is perfectly fine

with it too.

For a long bunch of text or md data tables however (csvmd can align those nicely),

fonts tend to be small and can look a bit grating when line thickness in them flips

between 1-2px within same glyphs/letters somewhat arbitrarily after scaling.

Monochrome bitmap fonts don't have that issue, and work great in this

particular use-case, as they were made for similar low pixel density monochrome

displays, where every dot was placed manually for best human-eye legibility

at that exact size.

Only problem is that they're kinda out of fashion nowadays, as modern

displays don't need them, and tend to be only supported in terminal emulator apps

(likely because unix people are used to them), embedded programming with 1-bit LED

panels or similar e-paper displays, and retro-styled pixel-art game engines.

I still use XTerm as a day-to-day terminal emulator myself (fast -

compatible - familiar), which still uses bitmap fonts by default,

misc-fixed 9x18 font in particular. It still looks great for me on up

to 1080p displays, with incredibly crisp and distinctive letters,

but HiDPI displays probably have that with any vector fonts too.

GIMP and all apps based on GTK toolkit have dropped support for PCF/BDF

bitmap fonts some years ago in particular (around 2019-ish), which seem to be

most common formats for these, so I was using worse-looking scaled-down

TTFs/OTFs for smaller monochrome text, until finally bothered to lookup

how to fix the issue.

One obvious fix can be to grab some old GIMP AppImage - e.g.

aferrero2707/gimp-appimage releases date back to 2018, so should work -

but modern GIMP has nice features too, and jumping back-and-forth between

the two or only sticking to an ancient version seems kinda silly.

Another (better) fix can be to edit whatever text in emacs, render it out and

paste into GIMP - monobit-banner tool from monobit project can do that.

For all its oddities, interactive text editing in GIMP - using multiple boxes,

reflowing, condensing, etc - is still way nicer than that paste-and-see method,

and looking up options, I've stumbled upon a great (and surprisingly recent)

2025 Libre Graphics Meeting (LGM) "Let's All Go Back To Bitmap Fonts!" talk

by Nathan Willis, which presents a working solution for this particular problem

as a part of it (and covers other issues related to bitmap fonts too) - convert

font to OpenType Bitmap format (OTB, .otb), which is still widely supported.

So it looks like support for bitmap fonts isn't completely gone yet,

just need to use that specific format instead of more common old PCF files.

Same monobit toolkit works great for converting to OTB as well:

monobit-convert /usr/share/fonts/misc/9x18.pcf.gz \

set-property family Fixed set-property subfamily 9x18 \

to fixed-9x18.otb --overwrite

Resulting .otb file can be dropped into ~/.fonts/ and GIMP will pick it up there

(or from any dir under Edit - Preferences - Folders - Fonts), displaying with

"<family> <subfamily>" name in the font selection dialogs, hence overriding

those to "Fixed 9x18" above, to know specific matching height to pick for it.

GIMP or its underlying font rendering libs also seem smart about scaling these

OTB fonts only in some discrete steps to avoid loosing their distinctive sharp edges,

but there's probably little practical reason to do that - they're already tiny

(e.g. misc-fixed has 4x6 variant), and vector fonts work fine for larger sizes.

It's a niche use-case for sure, but still nice that all those hand-crafted

pixel-perfect fonts from past decades of computer history seem to still be usable

with modern tools without too much hassle.

Side-note on dithering - there's a nice non-interactive didder tool for that,

but usually it's even nicer to interactively tweak strength/brightness

parameters for each specific image, depending on its overall contents and what

it will be used for.

(e.g. background image can probably have less black pixels, although contrast

text halo/outline usually takes care of any foreground-font legibility issues,

but inherent contrast with subdued outlines looks better)

My basic ad-hoc solution to turning that non-interactive tool into an interactive

one, is to wrap it into a bash script, using zenity to display a couple sliders

for those parameters:

#!/bin/bash

src=$1 dst=$2

[[ -n "$src" && -n "$dst" ]] || { echo >&2 "Usage: $0 image.src.png image.png"; exit 1; }

didder=./didder_1.3.0_linux_64-bit didder_args=(

# Added to --strength N% --brightness M% from zenity

-x 800 -y 480 -p '0 255' -i "$src" -o "$dst" bayer 32x32 )

feh=feh feh_args=( # used to display and auto-reload image on the second screen

-ZNxsrd. -g=1920x1080+1920 -B checks --info 'echo -e " [ %t %wx%h %Ppx %SB %z%% ]\n"' )

declare -A last; render= open=t

fdlinecombine \

<(zenity --title='dither strength' --scale --text='' --print-partial \

--step=1 --min-value 0 --max-value 100 | stdbuf -oL sed 's/^/str /') \

<(zenity --title='dither brightness' --scale --text='' --print-partial \

--step=1 --min-value 0 --max-value 100 | stdbuf -oL sed 's/^/br /') |

while read -rt 0.5 t val ||:; do

[[ -z "$val" ]] || { last[$t]=$val; render=t; continue; }

[[ -z "$render" ]] && continue || render=

[[ -n "${last[str]}" && -n "${last[br]}" ]] || continue

opts=( --strength "${last[str]}"% --brightness "${last[br]}"% )

echo "[ $(printf '%(%F %T)T' -1) ] Render: ${opts[@]}"

"$didder" "${opts[@]}" "${didder_args[@]}" && sleep 0.5

[[ -z "$open" ]] || { "$feh" "${feh_args[@]}" "$(realpath "$dst")" & open=; }

done

It puts feh image-viewer on the second screen and auto-reloads images after every tweak,

with bash running didder in an event-loop after some debouncing.

Small non-posix fdlinecombine tool is used there to merge parameter updates from

any number of sliders, but can probably be replaced by tail, subshell or something

more generic, I just tend to use it in a pinch for such dynamic-concatenation needs.

Nov 02, 2025

Using standard rm(1) tool in something like a file-backup script,

with any "untrusted" list of paths OR an untrusted dir is wildly unsafe,

but it's kinda frustrating to me that it doesn't have to be.

On modern linux, "rm" can fairly easily have some --restrict-to-dir

option, which guarantees that all removed files will be under that dir,

making it much safer to use in a script which needs to e.g. run comm(1)

on some file-lists and remove a bunch of unneded ones from some storage-dir.

Without such tool, using old "rm" has many semantical and TOCTOU issues:

- Paths can be plain-bad like /etc/passwd.

- Sneakier version of that can be /mnt/storage/../../etc/passwd.

- Or what if /mnt/storage/somedir is a symlink to /etc,

even if path on the list is nominally /mnt/storage/somedir/passwd.

- And even if /mnt/storage/somedir checks out to be a real dir to stat()

or such, if you run straight-up "rm" or unlink() on that path, it might be

quickly replaced to be a symlink under that.

- Relative paths add another layer of mess into this.

- In addition to symlinks there are also mountpoints, which do same thing

too, although in less potential scenarios.

To fix all of these issues, linux has openat2() syscall since 5.6,

which supports using following simple pattern to avoid everything listed above:

Given some path, run p = realpath(path) on it.

So it either resolves to a canonical absolute form, with no-symlink

components separated by single slashes in there, or immediately returns errno

code if it's missing or inaccessible.

Check all restrictions on that canonical path p, e.g. whether it's under

realpath of the dir you want it to be ("realpaths" are nicely string-comparable).

Run fd = openat2(p, RESOLVE_NO_SYMLINKS) to open that path (with optional

RESOLVE_NO_XDEV also in there to prevent racy mountpoints), and only use that

fd for the file/dir/etc from now on.

Error here will indicate that something changed since realpath() was used,

and you either have to run it again (where realpath() will likely fail too),

or treat that as an special "file vanished" error.

Afaik this should shutdown any symlink-related race-conditions, as openat2()

ensures that realpath you check is the one you'll end up opening, with nothing

redirecting it in-between these calls.

For removing files in "rm" tool, you don't really "open" files themselves,

instead open their dirs - e.g. produced by realpath(dirname(file)) -

with same exact check-sequence, and unlinkat() the name there.

So using openat2() + unlinkat() combo instead of direct unlink(file) allows

to introduce "make sure you only remove stuff under <this-dir>" safety restriction,

which can be nice even to just protect against typos and accidental spaces in

human-input paths (see many examples like rm -rf /usr /lib/nvidia-current/xorg/xorg

in bumblebee years ago), but especially useful in a script or tool working

with some specific storage dir, which is very common.

Given proliferation of "rewrite in rust" tools and learning projects, tried

looking up some version of "rm" already doing something like that,

but failed to find one - seems hard enough to even find anyone using openat2(),

despite it being in the kernel for 5+ years by now.

Most "safe rm" tools are for moving files into some kind of "trash" dir,

with somewhat different user-interactive use-case (regret after removal) and priorities.

As usual, ended up writing it for myself - rmx.c in fgtk repo.

It's intended to be used with -d <dir> option, does realpath on that dir and

checks all files' parent dir realpaths against that prefix before removing anything,

uses RESOLVE_NO_SYMLINKS by default, but also has -x option for cross-mount checks.

For example: rmx -f -d /mnt/storage -- "${file_list[@]}"

Doesn't have recursive mode, as I don't really need it atm, and that one

probably has its own bunch of caveats.

Other general ways to fix similar issues is chroot(), using mount namespaces,

LSM profiles (SELinux/AppArmor/LandLock/etc), idmapping + special uid/gid for that,

and other sandboxing-adjacent techniques.

That seems excessively complicated for a humble "rm <files>" command,

and they're often lacking in different ways (e.g. chroot is leaky and requires root),

but can be useful to wrap anything more complex that deals with paths a lot into

(like for a fuse-filesystem overlay like acfs, where fixing every access to be

sanitized like this is a bit more work).

Sep 15, 2025

When retrying some failed check or operation, common ways to algorithmically

wait for next attempt seem to be:

Make N retries with a static interval in-between, e.g. "retry every 10s".

Works well when it's not a problem to retry too often, or need to know when

stuff starts working again ASAP (like within 10s), and when expected downtime

is predictable (something like minutes with 10s retries, not <1s or days/months).

Use exponential backoff to avoid having to predict availability/downtime.

Idea is to multiply interval each time, so it can start with 1s and rises up

quickly as exponential curve does, reacting well to both quick outages and

at the same time not still retrying needlessly every 10s after days.

Exponential backoff can quickly get out of hand, so cap its max interval.

Good tweak to set how-late-at-most working state will be detected again.

But vast majority of use-cases for retries I have seem to also need "give up

after some time" limit, so for example a tool fetching a file, will fail with

an error in a few minutes, which I can detect and see if it can be fixed in some

other way than just retrying endlessly (as in give router a kick or something).

That timeout usually seems like the most important part to set for me - i.e.

"I expect this to finish within 20m, so keep checking and process results when

available".

And above algortithms don't work too well under this constraint:

Fixed-interval attempts can be spaced evenly within timeout, but that's

usually suboptimal for same reasons as already mentioned - couple quick

initial attempts/retries are often more useful, and there's no point trying

too often after a while.

People - me included - are notoriously bad at understanding exponentials,

so it's nigh-impossible to tell with any exponential delay how many attempts

it'd imply within known timeout.

That is, depending on exact formula, could be that intervals will go up past

half of the timeout span fast and become effectively useless, not trying enough,

or otherwise make too many needless attempts throughout when timeout is known

to be quite long.

Long-time solution I had for this rate-limiting use-case is to not pick

exponential function effectively "at random" or "by intuition" (which again seems

difficult to get right), but instead use a simple chunk of code to calculate it.

Idea is to be able to say "I want 20 more-and-more delayed retries within 15m"

or "make 100 attempts in 1h" and not worry about further details, where backoff

function constants will be auto-picked to space them out nicely within that interval.

Specific function I've used in dozens of scripts where such "time-capped retries"

are needed goes something like this:

def retries_within_timeout( tries, timeout,

backoff_func=lambda e,n: ((e**n-1)/e), slack=0.01 ):

'Return list of delays to make exactly n retries within timeout'

a, b = 1, timeout

while True:

m = (a + b) / 2

delays = list(backoff_func(m, n) for n in range(tries))

if abs(error := sum(delays) - timeout) < slack: return delays

elif error > 0: b = m

else: a = m

Sometimes with adjusted backoff_func or slack value, depending on specific use.

For e.g. "10 retries within 60s timeout" it'd return a list with delays like this:

0.0, 0.3, 0.8, 1.5, 2.5, 4.0, 6.2, 9.4, 14.2, 21.1

I.e. retry immediately, then wait 0.3s, then 0.8s, and up to 14s or 21s towards

the end, when it's more obviously not a thing that's fixable by a quick retry.

As all these will sum up to 60s (with just ±0.01s slack), they can be easily

plugged into a for-loop like this:

for delay in retries_within_timeout(10, 60):

time.sleep(delay)

if everything_works(): break

else: raise TimeoutError('Stuff failed to work within 60s')

Without then needing any extra timeout checks or a wrapper to stop the loop after,

hence also quite convenient and expressive language abstraction imo.

There's probably an analytic solution for this, to have exact formulas for

calculating coefficients for backoff_func, that'd produce a set of N delays to

fit precisely within timeout, but I've never been interested enough to find

one when it's trivial to find "close enough" values algorithmically via bisection,

like that function does.

Also usually there's no need to calculate such delays often, as they're either

static or pre-configured, or used on uncommon error-handling code-paths, so having

a heavier solution-bruteforcing algorithm isn't an issue.

Not sure why, but don't think I've bumped into this kind of "attempts within timeout"

configuration/abstractions anywhere, so might be a quirk of how I think of rate-limits

in my head, or maybe it's just rarely considered to be worth adding 10 lines of code over.

Still, seem to make much more intuitive sense to me than other common limits above.

Recently I've also wanted to introduce a min-delay constraint there, which ideally

will discard those initial short-delay values in the example list above, and pick ones

more to the right, i.e. won't just start with delay_min + 0.0, 0.3, 0.8, ... values.

This proved somewhat error-prone for such bruteforce algorithm, because often

there's simply no curve that fits neatly within narrow-enough min-max range of

values that all sum up to specified timeout, so had to make algo a bit more complicated:

def retries_within_timeout(tries, timeout, delay_min=0, slack=None, e=10):

'Return list of delays to make exactly n retries within timeout'

if tries * delay_min * 1.1 > timeout: raise ValueError('tries * delay_min ~> timeout')

if tries == 1: return [max(delay_min, timeout / 2)]

# delay_calc is picked to produce roughly [0, m] range with n=[1, tries] inputs

delay_calc = lambda m,n,_d=e**(1/tries-1): m * (e ** (n / tries - 1) - _d)

a, b = 0, delay_min + timeout * 1.1

if not slack: slack = max(0.5 * delay_min, 0.1 * (timeout / tries))

try:

for bisect_step in range(50):

n, m, delays = 0, (a + b) / 2, list()

while len(delays) < tries:

if (td := delay_calc(m, n := n + 1)) < delay_min: continue

delays.append(td)

if a == b or abs(error := sum(delays) - timeout) < slack: return delays

elif error > 0: b = m

else: a = m

except OverflowError: pass # [tries*delay_min, timeout] range can be too narrow

if not delay_min: slack *= 2

return list( (delay_min+td) for td in

retries_within_timeout(tries, timeout - delay_min*(tries-1), slack=slack, e=e) )

(all this is a lot less confusing when graphed, either as values from delay_calc

or sum-of-delays-up-to-N, which can be easy to do with a bit of HTML/JS)

As per comment in the code, delay_calc is hardcoded to produce predictable output

range for known "attempt number" inputs, and a couple pathological-case checks

are needed to avoid asking for impossible values (like "fit 10 retries in 10s

with min 3s intervals" so 10*3 = 30s within 10s) or impossible quadratic curve shapes

(for which there're couple fallbacks at the end).

Default e=10 exponent base value makes delays more evenly-spaced,

which get more samey with lower e-values or rise more sharply with higher ones,

e.g. e=1000 will change result like this:

retries_within_timeout(10, 60, e=10) : 0.0, 0.6, 1.4, 2.4, 3.6, 5.2, 7.2, 9.6, 12.8, 16.7

retries_within_timeout(10, 60, e=1000): 0.0, 0.1, 0.2, 0.4, 0.9, 1.9, 3.8, 7.6, 15.2, 30.4

So seem to be good enough for occasional tweaks instead of replacing whole delay_calc formula.

Aug 20, 2025

As a long-time conky user, always liked "system status at a glance" visibility

it provides, so you're pretty much always aware of what's normal resource usage

pattern for various apps and system as a whole, and easily notice anything odd there.

(though mostly kept conky config same since last post here about sensors data in 2014,

only updating it to work for new lua syntax and to use lm-sensors cli tool json

output for data from those instead of an extra binary)

One piece that's been missing for me however, is visibility into apps' network usage -

it's kinda important to know which apps have any kind of "unexpected" connectivity,

like telemetry tracking, "cloud" functionality, or maybe some more sinister

security/privacy issues and leaks even.

This use-case requires a bunch of filtering/grouping and configurability in general,

as well as getting more useful information about processes initiating connections

than what common netstat / ss tools or conky's /proc/net/tcp lines provide.

I.e. usual linux 16-byte /proc/<pid>/comm is not good enough, as it just says

"python3", "ssh", "curl" or some kind of "socket thread" most of the time in practice,

while I'd want to know which systemd service/scope/unit and uid/user it was started from.

So that "curl" ran by some game launcher, started from a dedicated gaming-uid

in a game-specific flatpak scope isn't displayed same as "curl" that I run from

terminal or that some system service runs, having all that user/cgroup/etc info

with it front-and-center.

And wrt configurability - over time there's a lot of normal-usage stuff that

doesn't need to draw much attention, like regular ssh sessions, messengers,

imap, ntp or web browser traffic, which can be noisy while also being least

interesting and important (beyond "it's all there as usual"), but is entirely

user-specific, same as most useful data to display in a conky window for your system.

Main idea is to have a good mental image of what's "normal" wrt network usage

for local apps, and when e.g. running something new, like a game or a flatpak,

to be able to instantly tell whether it's connecting somewhere and when

(and might need a firewall rule or net-blocking bpf loaded in its cgroup),

or is completely offline.

Haven't found any existing application that does something like this well,

and especially in this kind of "background desktop widget" way as conky does,

but most notable ones for such use-case are systemd-cgtop (comes with systemd),

atop's network monitoring tab (usable with an extra netatop-bpf component)

and OpenSnitch interactive-firewall app.

All of course are still for quite different uses, although atop can display a lot

of relevant process information nowadays, like cgroups and full process command.

But its network tab is still a TUI for process traffic counters, which I don't

actually care much about - focus should be on new connections made from new places

and filtering/tailoring.

Eventually got around to look into this missing piece and writing an app/widget

covering this maybe odd and unorthodox use-case, named in my usual "relevant words

that make an acronym" way - linux-ebpf-connection-overseer or "leco".

Same as netatop-bpf and OpenSnitch, it uses eBPF for network connection monitoring,

which is surprisingly simple tool for that - all TCP/UDP connections pass through

same two send/recv tracepoints (that netatop-bpf also uses), with kernel "struct sock"

having almost all needed network-level info, and hooks can identify process/cgroup

running the operation, so it's all just there.

But eBPF is of course quite limited by design:

- Runs in kernel, while app rendering stuff on-screen has to be in userspace.

- Should be very fast and not do anything itself, to not affect networking in any way.

- Has to be loaded into kernel by root.

- Needs to export/stream data from kernel to userspace, passing its

root-only file descriptors of data maps to an unprivileged application.

- Terse data collected in-kernel, like process and cgroup id numbers has to be

expanded into human-readable names from /proc, /sys/fs/cgroup and such sources.

- Must be written in a subset of C or similar low-level language, unsuitable for other purposes.

So basically can't do anything but grab all relevant id/traffic numbers

and put into table/queue for userspace to process and display later.

Solution to most of these issues is to have a system-level service that

loads eBPF hooks and pulls data from there, resolves/annotates all numbers

and id's into/with userful names/paths, formats in a nice way,

manages any useful caches, etc.

Problem is that this has to be a system service, like a typical daemon,

where some initial things are even done as root, and under some kind of system

uid beyond that, while any kind of desktop widget would run in a particular

user session/container, ideally with no access to most of that system-level stuff,

and with a typical GUI eventloop to worry about instead.

Which is how this app ended up with 3 separate main components:

- eBPF code linked into one "loader" binary.

- "pipe" script that reads from eBPF maps and outputs nicely-formatted event info.

- Desktop widget that reads event info lines and displays those in a configurable way.

README in the project repository should have a demo video, a better overview

of how it all works together, and how to build/configure and run those.

Couple things I found interesting about these components, in no particular order:

Nice thing about such breakdown is that first two components (eBPF + pipe)

can run anywhere and produce an efficient overview for some remote system,

VM or container - e.g. can have multiple widgets to look at things happening

in home network for example, not just local machine, though I haven't used that yet.

Another nice thing is that each component can use a language suitable for it,

i.e. for kernel hooks old C is perfectly fine, but it's hard to beat python

as a systems language for an eventloop doing data mangling/formatting,

and for a graphical widget doing custom liteweight font/effects rendering,

a modern low-level language like Nim with fast graphics abstraction lib like SDL

is the best option, to avoid using significant cpu/memory for a background/overlay

window drawing 60+ frames per second (when visible and updating at least).

Separate components also make is easier to tweak or debug those separately, like

for changing eBPF hooks or data formatting, it's easy to run loader and/or python

script and look at its stdout, or read maps via bpftool in case of odd output.

systemd makes it quite easy to do ExecStart=+... to run eBPF loader as root

and then only pass map file descriptors from that to an unprivileged data-pipe script

(with DynamicUser=yes and full sandboxing even), all defined and configured

within one .service ini-file.

Not having to use large GUI framework like GTK/QT for graphical widget was quite nice

and refreshing, as those are hellishly complicated, and seem poorly suitable for a

custom things like semi-transparent constantly-updating information overlays anyway

(while adding a ton of unnecessary complexity and maintenance burden).

Most surprising thing was probably that pretty much whole configuration language

for all filtering and grouping ended up fitting nicely into a list of regexps,

as displayed network info is just text lines, so regexp-replacing specific string-parts

in those to look nicer or to pick/match things to group by is what regexps do best.

widget.ini config in project repo has ones that I use and some description,

in addition to README sections there.

Making it configurable how visual effects behave over time is quite easy by using

a list of e.g. "time,transparency ..." values, with some smooth curve auto-connecting

those dots, to only need to specify points where direction changes.

A simple HTML file to open in browser allows to edit such curves easily,

like for example making info for new connections quickly fade-in and the

fade-out in a few smooth steps, to easily spot which ones are recent or older.

I think in gamedev this way of specifying effect magnitude over time is often

referred to as "tweening" or "tweens" (as in what happens in-between specific

states/sprites).

Was thinking to add fancier effects for the tool, but then realized that the

more plain and non-distracting it looks the better, as it's supposed to be in the

background, not something eye-catching, and smooth easing-in/out is already good for that.

Nice text outline/shadow doesn't actually require blur or any extra pixel-processing,

can just stamp same glyphs in black with -1,-1 then 1,1 and 2,2 offsets plus some

transparency, and it's good enough, esp. for readability over colorful backgrounds.

Always-on-top semi-transparent non-interactable vertical overlay window fits quite

well on the second screen, where most stuff is read-only and unimportant anyway.

Works fine as a typical desktop-background window like conky as well.

C dependencies that are statically-linked-in during build seem to work fairly

well as git submodules, being very obvious and explicit, pinned to a specific

supported version, and are easy enough to manage via command line.

Network accounting is quite complicated as usual, hard to even describe in the

README precisely but succinctly, with all the quirks and caveats there.

Nice DNS names are surprisingly not that important for such overview, as it's

usually fairly obvious where each app connects, especially with the timing of it

(e.g. when clicking some "connect" button or running git-push in a terminal),

and most of the usual connections are easy to regexp-replace with better-than-DNS

names anyway (like say "IRC" instead of whatever longer name).

Should still be easy enough to fill those in by e.g. adding a python resolver

module to a local unbound cache, which would cache queries passing through it

by-IP, and then resolve some special queries with encoded IPs back to names,

which should be way simpler and accurate than getting those from traffic inspection

(esp. with apps using DNS-over-TLS/HTTPS protocols).

Kernel "sock" structs have a nice unique monotonic skc_cookie id number, but it's

basically unusable in tracepoints because it's lazily generated at the worst time,

and bpf_get_socket_cookie helper isn't available there, damnit.

Somewhat surprisingly never bumped into info about eBPF code licensing -

is it linking against kernel's GPL code, interpreted code on top of it,

maybe counts as part of the kernel in some other way?

Don't particularly care, using GPL is fine and presumably avoids any issues there,

but it just seems like a hairy subject that should've been covered to death somewhere.

Links above all point to project repository on github but it can be also be

found on codeberg or self-hosted, as who knows how long github will still

be around and not enshittified into the ground.

May 14, 2025

My pattern for using these shell wrappers / terminal multiplexers

for many years have been something like this:

Run a single terminal window, usually old XTerm, sometimes Terminology.

Always run tmux inside that window, with a C-x (ctrl-x) prefix-key.

Open a couple local tmux windows (used as "terminal tabs") there.

Usually a root console (via su), and one or two local user consoles.

Run "screen" (as in GNU Screen) in all these "tabs", with its default C-a

prefix-key, and opening more screen-windows whenever I need to run something new,

and current console/prompt is either busy, has some relevant history, or e.g.

being used persistently for something specific in some project dir.

This is for effectively infinite number of local shells within a single "tmux tab",

easy to switch between via C-a n/p, a (current/last) or number keys.

Whenever I need to ssh into something, which is quite often - remote hosts

or local VMs - always open a new local-tmux tab, ssh into the thing, and always

run "screen" on the other end as the first and only "raw" command.

Same as with local shells, this allows for any number of ad-hoc shells on that

remote host, grouped under one host-specific tmux tab, with an important feature

of being persistent across any network hiccups/disconnects or any local

desktop/laptop issues.

If remote host doesn't have "screen" - install and run it, and if that's not possible

(e.g. network switch device), then still at least run "screen" locally and have

(multipexed) ssh sessions open to there in each screen-window within that tmux tab.

Shell running on most local/remote hosts (zsh in my case typically) has a hook

to detect whether it's running under screen and shows red "tty" warning in prompt

when it's not protected/multiplexed like that, so almost impossible to forget to

use those in "screen", even without a habit of doing so.

So it's a kind of two-dimensional screen-in-tmux setup, with per-host tmux tabs,

in which any number of "screen" windows are contained for that local user or remote host.

I tend to say "tmux tabs" above, simply to tell those apart from "screen windows",

even though same concept is also called "windows" in tmux, but here they are used

kinda like tabs in a GUI terminal.

Unlike GUI terminal tabs however, "tmux tabs" survive GUI terminal app crashing

or being accidentally closed just fine, or a whole window manager / compositor /

Xorg crashing or hanging due to whatever complicated graphical issues

(which tend to be far more common than base kernel panics and such).

(worst-case can usually either switch to a linux VT via e.g. ctrl-alt-F2,

or ssh into desktop from a laptop, re-attach to that tmux with all consoles,

finish or make a note of whatever I was doing there, etc)

Somewhat notably, I've never used "window splitting" (panes/layouts) features of screen/tmux,

kinda same as I tend to use only full-screen or half-screen windows on graphical desktop,

with fixed places at app-specific virtual desktop, and not bother with any other

"window management" stuff.

Most of the work in this setup is done by GNU Screen, which is actually hosting all

the shells on all hosts and interacts with those directly, with tmux being relegated

to a much simpler "keep tabs for local terminal window" role (and can be killed/replaced

with no disruption).

I've been thinking to migrate to using one tool instead of two for a while, but:

Using screen/tmux in different roles like this allows to avoid conflicts between the two.

I.e. reconnecting to a tmux session on a local machine always restores a full

"top-level terminal" window, as there's never anything else in there.

And it's easier to configure each tool for its own role this way in their

separate screenrc/tmux.conf files.

"screen" is an older and more common tool, available on any systems/distros (to ssh into).

"screen" is generally more lightweight than tmux.

I'm more used to "screen" as my own use of it predates tmux,

but tbf they aren't that different.

So was mostly OK using "screen" for now, despite a couple misgivings:

It seem to bundle a bunch more legacy functionality and modes which I don't

want or need - multiuser login/password and access-control stuff for that,

serial terminal protocols (e.g. screen /dev/ttyUSB0), utmp user-management.

Installs one of a few remaining suid binaries, with many potential issues this implies.

See e.g. su-sudo-from-root-tty-hijacking, arguments for using run0 or ssh

to localhost instead of sudo, or endless special-case hacks implemented in

sudo and linux over decades, for stuff like LD_PRELOAD to not default-leak

accross suid change.

Old code there tends to have more issues that tmux doesn't have (e.g. this

recent terminal title overflow), but mostly easy to ignore or work around.

Long-running screen sessions use that suid root binary instead of systemd

mechanisms to persist across e.g. ssh disconnects.

More recently, with a major GNU Screen 5.0.0 update, a bunch of random stuff broke

for me there, which I've mostly worked around by sticking with last 4.9 release,

but that can't last, and after most recent batch of security issues in screen,

finally decided to fully jump to tmux, to at least deal with only one set of issues there.

By now, tmux seem to be common enough to easily install on any remote hosts,

but it works slightly differently than "screen", and has couple other problems

with my workflow above:

- "session" concept there has only one "active window", so having to sometimes

check same "screen" on the same remote from different terminals, it'd force

both "clients" to look at the same window, instead of having more independent

state, like with "screen".

- Starting tmux-in-tmux needs a separate configuration and resolving a couple

conflicts between the two.

- Some different hotkeys and such minor quirks.

Habits can be changed of course, but since tmux is quite flexible and easily

configurable, they actually don't have to change, and tmux can work pretty much

like "screen" does, with just shell aliases and a config file.

With "screen", I've always used following aliases in zshrc:

alias s='exec screen'

alias sr='screen -r'

alias sx='screen -x'

"s" here replaces shell with "shell in screen", "sr" reconnects to that "screen"

normally, sometimes temporarily (hence no "exec"), and "sx" is same as "sr" but

to share "screen" that's already connected-to (e.g. when something went wrong,

and old "client" is still technically hanging around, or just from a diff device).

Plus tend to always replace /etc/screenrc with one that disables welcome-screen,

sets larger scrollback and has couple other tweaks enabled, so it's actually

roughly same amount of tweaks as tmux needs to be like "screen" on a new system.

Differences between the two that I've found so far, to alias/configure around:

To run tmux within tmux for local "nested" sessions, like "screen in tmux"

case above, with two being entirely independent, following things are needed:

- Clear TMUX= env var, e.g. in that "s" alias.

- Use different configuration files, i.e. with different prefix, status line,

and any potential "screen-like" tweaks.

- Have different session socket name set either via -L screen

or -S option with full path.

These tweaks fit nicely with using just aliases + separate config file,

which are already a given.

To facilitate shared "windows" between "sessions", but independent "active window"

in each, tmux has "session groups" feature - running "new-session -t <groupname>"

will share all "windows" between the two, adding/removing them in both, but not

other state like "active windows".

Again, shell alias can handle that by passing additional parameter, no problem.

tmux needs to use different "session group" names to create multiple "sessions"

on the same host with different windows, for e.g. running multiple separate local

"screen" sessions, nested in different tmux "tabs" of a local terminal, and not sharing

"windows" between those (as per setup described at the beginning).

Not a big deal for a shell alias either - just use new group names with "s" alias.

Reconnecting like "screen -r" with "sr" alias ideally needs to auto-pick "detached"

session or group, but unlike "screen", tmux doesn't care about whether session is

already attached when using its "attach" command.

This can be checked, sessions printed/picked in "sr" alias, like it was with "screen -r".

Sharing session via "screen -x" or "sx" alias is a tmux default already.

But detaching from a "shared screen session" actually maps to a "kill-session"

action in tmux, because it's a "session group" that is shared between two "sessions"

there, and one of those "sessions" should just be closed, group will stay around.

Given that "shared screen sessions" aren't that common to use for me, and

leaving behind detached tmux "session" isn't a big deal, easiest fix seem to

be adding "C-a shift-d" key for "kill-session" command, next to "C-a d" for

regular "detach-client".

Any extra tmux key bindings spread across keyboard like landmines to fatfinger

at the worst moment possible, and then have no idea how to undo whatever it did!

Easy to fix in the config - run tmux list-keys to dump them all,

pick only ones you care about there for config file, and put e.g.

unbind-key -T prefix -a + unbind-key -T root -a before those bindings

to reliably wipe out the rest.

Status-line needs to be configured in that separate tmux-screen config to be

different from the one in the wrapper tmux, to avoid confusion.

None of these actually change the simple "install tmux + config + zshrc aliases"

setup that I've had with "screen", so it's a pretty straightforward migration.

zshrc aliases got a bit more complicated than 3 lines above however, but eh, no big deal:

# === tmux session-management aliases

# These are intended to mimic how "screen" and its -r/-x options work

# I.e. sessions are started with groups, and itended to be connected to those

s_tmux() {

local e; [[ "$1" != exec ]] || { e=$1; shift; }

TMUX= $e tmux -f /etc/tmux.screen.conf -L screen "$@"; }

s() {

[[ "$1" != sg=? ]] || s_tmux exec new-session -t "$1"

[[ "$#" -eq 0 ]] || { echo >&2 "tmux: errror - s `

` alias/func only accepts one optional sg=N arg"; return 1; }

local ss=$(s_tmux 2>/dev/null ls -F '#{session_group}'); ss=${ss:(-4)}

[[ -z "$ss" || "$ss" != sg=* ]] && ss=sg=1 || {

[[ "${ss:(-1)}" -lt 9 ]] || { echo >&2 'tmux: not opening >9 groups'; return 1; }

ss=sg=$(( ${ss:(-1)} + 1 )); }

s_tmux exec new-session -t "$ss"; }

sr() {

[[ "$#" -ne 1 ]] || {

[[ "$1" != ? ]] || { s_tmux new-session -t "sg=$1"; return; }

[[ "$1" != sg=? ]] || { s_tmux new-session -t "$1"; return; }

[[ "$1" = sg=?-* || "$1" = \$* ]] || {

echo >&2 "tmux: error - invalid session-match [ $1 ]"; return 1; }

s_tmux -N attach -Et "$1"; return; }

[[ "$#" -eq 0 ]] || { echo >&2 "tmux: errror - sr alias/func`

` only accepts one optional session id/name arg"; return 1; }

local n line ss=() sl=( "${(@f)$( s_tmux 2>/dev/null ls -F \

'#{session_id} #S#{?session_attached,, [detached]} :: #{session_windows}'`

`' window(s) :: group #{session_group} :: #{session_group_attached} attached' )}" )

for line in "${sl[@]}"; do

n=${line% attached}; n=${n##* }

[[ "$n" != 0 ]] || ss+=( "${line%% *}" )

done

[[ ${#ss[@]} -ne 1 ]] || { s_tmux -N attach -Et "$ss"; return; }

[[ ${#sl[@]} -gt 1 || ${sl[1]} != "" ]] || {

echo >&2 'tmux: no screen-like sessions detected'; return 1; }

echo >&2 "tmux: no unique unused session-group`

` (${#sl[@]} total), use N or sg=N group, or session \$M id / sg=N-M name"

for line in "${sl[@]}"; do echo >&2 " $line"; done; return 1; }

They work pretty much same as screen and screen -r used to do, even

easier for "sr" with simple group numbers, and "sx" for screen -x isn't needed

("sr" will attach to any explicitly picked group just fine).

And as for a screen-like tmux config - /etc/tmux.screen.conf:

TMUX_SG=t # env var to inform shell prompt

set -g default-terminal 'screen-256color'

set -g terminal-overrides 'xterm*:smcup@:rmcup@'

set -g xterm-keys on

set -g history-limit 30000

set -g set-titles on

set -g set-titles-string '#T'

set -g automatic-rename off

set -g status-bg 'color22'

set -g status-fg 'color11'

set -g status-right '[###I %H:%M]'

set -g status-left '#{client_user}@#h #{session_group} '

set -g status-left-length 40

set -g window-status-current-style 'bg=color17 bold'

set -g window-status-format '#I#F'

set -g window-status-current-format '#{?client_prefix,#[fg=colour0]#[bg=colour180],}#I#F'

set -g mode-keys emacs

unbind-key -T prefix -a

unbind-key -T root -a

unbind-key -T copy-mode-vi -a # don't use those anyways

set -g prefix C-a

bind-key a send-prefix

bind-key C-a last-window

# ... and all useful prefix bind-key lines from "tmux list-keys" output here.

As I don't use layouts/panes and bunch of other features of screen/tmux multiplexers,

it's only like 20 keys at the end for me, but to be fair, tmux keys are pretty much

same as screen after you change prefix to C-a, so probably don't need to be

unbound/replaced at all for someone who uses more of those features.

So in the end, it's a good overdue upgrade to a more purpose-built/constrained

and at the same time more feature-rich and more modern tool within its scope,

without loosing ease of setup or needing to change any habits - a great thing,

can recommend to anyone still using "screen" in roughly this role.

Jan 16, 2025

Dunno what random weirdo found me this time around, but have noticed 'net

connection on home-server getting clogged by 100mbps of incoming traffic yesterday,

which seemed to be just junk sent to every open protocol which accepts it from some

5-10K IPs around the globe, with bulk being pipelined requests over open nginx connections.

Seems very low-effort, and easily worked around by not responding to TCP SYN

packets, as volume of those is relatively negligible (not a syn/icmp flood

or any kind of amplification backscatter), and just nftables can deal with that,

if configured to block the right IPs.

Actually, first of all, as nginx was a prime target here, and allows single

connection to dump a lot of request traffic into it (what was happening),

two things can be easily done there:

Tighten keepalive and request limits in general, e.g.:

limit_req_zone $binary_remote_addr zone=perip:10m rate=20r/m;

limit_req zone=perip burst=30 nodelay;

keepalive_requests 3;

keepalive_time 1m;

keepalive_timeout 75 60;

client_max_body_size 10k;

client_header_buffer_size 1k;

large_client_header_buffers 2 1k;

Idea is to at least force bots to reconnect, which will work nicely with

nftables rate-limiting below too.

If bots are simple and dumb, sending same 3-4 types of requests, grep those:

tail -F access.log | stdbuf -oL awk '/.../ {print $1}' |

while read addr; do nft add element inet filter set.inet-bots4 "{$addr}"; done

Yeah, there's fail2ban and such for that as well, but why

overcomplicate things when a trivial tail to grep/awk will do.

Tbf that takes care of bulk of the traffic in such simple scenario already,

but nftables can add more generalized "block bots connecting to anything way

more than is sane" limits, like these:

add set inet filter set.inet-bots4.rate \

{ type ipv4_addr; flags dynamic, timeout; timeout 10m; }

add set inet filter set.inet-bots4 \

{ type ipv4_addr; flags dynamic, timeout; counter; timeout 240m; }

add counter inet filter cnt.inet-bots.pass

add counter inet filter cnt.inet-bots.blackhole

add rule inet filter tc.pre \

iifname $iface.wan ip daddr $ip.wan tcp flags syn jump tc.pre.ddos

add rule inet filter tc.pre.ddos \

ip saddr @set.inet-bots4 counter name cnt.inet-bots.blackhole drop

add rule inet filter tc.pre.ddos \

update @set.inet-bots4.rate { ip saddr limit rate over 3/minute burst 20 packets } \

add @set.inet-bots4 { ip saddr } drop

add rule inet filter tc.pre.ddos counter name cnt.inet-bots.pass

(this is similar to an example under SET STATEMENT from "man nft")

Where $iface.wan and such vars should be define'd separately,

as well as tc.pre hooks (somewhere like prerouting -350, before anything else).

ip/ip6 addr selectors can also be used with separate IPv4/IPv6 sets.

But the important things there IMO are:

To define persistent sets, like set.inet-bots4 blackhole one,

and not flush/remove those on any configuration fine-tuning afterwards,

only build it up until non-blocked botnet traffic is negligible.

Rate limits like ip saddr limit rate over 3/minute burst 20 packets

are stored in the dynamic set itself, so can be adjusted on the fly anytime,

without needing to replace it.

Sets are easy to export/import in isolation as well:

# nft list set inet filter set.inet-bots4 > bots4.nft

# nft -f bots4.nft

Last command adds set elements from bots4.nft, as there's no "flush" in there,

effectively merging old set with the new, does not replace it.

-j/--json input/output can be useful there to filter sets via scripts.

Always use separate chain like tc.pre.ddos for complicated rate-limiting

and set-matching rules, so that those can be atomically flushed-replaced via

e.g. a simple .sh script to change or tighten/relax the limits as-needed later:

nft -f- <<EOF

flush chain inet filter tc.pre.ddos

add rule inet filter tc.pre.ddos \

ip saddr @set.inet-bots4 counter name cnt.inet-bots.blackhole drop

# ... more rate-limiting rule replacements here

EOF

These atomic updates is one of the greatest things about nftables - no need to

nuke whole ruleset, just edit/replace and apply relevant chain(s) via script.

It's also not hard to add such chains after the fact, but a bit fiddly -

see e.g. "Managing tables, chains, and rules using nft commands" in RHEL docs

for how to list all rules with their handles (use nft -at list ... with

-t in there to avoid dumping large sets), insert/replace rules, etc.

But the point is - it's a lot easier when pre-filtered traffic is already

passing through dedicated chain to focus on, and edit it separately from the rest.

Counters are very useful to understand whether any of this helps, for example:

# nft list counters table inet filter

table inet filter {

counter cnt.inet-bots.pass {

packets 671 bytes 39772

}

counter cnt.inet-bots.blackhole {

packets 368198 bytes 21603012

}

}

So it's easy to see that rules are working, and blocking is applied correctly.

And even better - nft reset counters ... && sleep 100 && nft list counters ...

command will effectively give the rate of how many bots get passed or blocked per second.

nginx also has similar metrics btw, without needing to remember any status-page

URLs or monitoring APIs - tail -F access.log | pv -ralb >/dev/null

(pv is a common unix "pipe viewer" tool, and can count line rates too).

Sets can have counters as well, like set.inet-bots4,

defined with counter; in the example above.

nft get element inet filter set.inet-bots4 '{ 103.115.243.145 }'

will get info on blocked packets/bytes for specific bot, when it was added, etc.

One missing "counter" on sets is the number of elements in those, which piping

it through wc -l won't get, as nft dumps multiple elements on the same line,

but jq or a trivial python script can get from -j/--json output:

nft -j list set inet filter set.inet-bots4 | python /dev/fd/3 3<<'EOF'

import sys, json

for block in json.loads(sys.stdin.read())['nftables']:

if not (nft_set := block.get('set')): continue

print(f'{len(nft_set.get("elem", list())):,d}'); break

EOF

(jq syntax is harder to remember when using it rarely than python)

nftables sets can have tuples of multiple things too, e.g. ip + port, or even

a verdict stored in there, but it hardly matters with such temporary bot blocks.

Feed any number of other easy-to-spot bot-patterns into same "blackhole" nftables sets.

E.g. that tail -F access.log | awk is enough to match obviously-phony

requests to same bogus host/URL, and same for malformed junk in error.log,

auth.log, mail.log, etc - stream all those IPs into nft add element ...

too, the more the merrier :)

It used to be more difficult to maintain such limits efficiently in userspace to

sync into iptables, but nftables has this basic stuff built-in and very accessible.

Though probably won't help against commercial DDoS that's expected to get results

instead of just a minor nuisance, against something more valuable than a static

homepage on a $6/mo internet connection - bots might be a bit more sophisticated there,

and numerous enough to clog the pipe by syn-flood or whatever icmp/udp junk,

without distributed network like CloudFlare filtering it at multiple points.

This time I've finally decided to bother putting it all in the script too

(as well as this blog post while at it), which can be found in the usual repo

for scraps - mk-fg/fgtk/scraps/nft-ddos (or on codeberg and in local cgit).

Dec 13, 2024

For projects tracked in some package repositories, apparently it's worth tagging

releases in git repos (as in git tag 24.12.1 HEAD && git push --tags),

for distro packagers/maintainers to check/link/use/compare new release from git,

which seems easy enough to automate if pkg versions are stored in a repo file already.

One modern way of doing that in larger projects can be CI/CD pipelines, but they

imply a lot more than just release tagging, so for some tiny python module like

pyaml, don't see a reason to bother with them atm, and I know how git hooks work.

For release to be pushed to a repository like PyPI in the first place,

project repo almost certainly has a version stored in a file somewhere,

e.g. pyproject.toml for PyPI:

[project]

name = "pyaml"

version = "24.12.1"

...

Updates to this version string can be automated on their own (I use simple

git-version-bump-filter script for that in some projects), or done manually

when pushing a new release to package repo, and git tags can easily follow that.

E.g. when pyproject.toml changes in git commit, and that change includes

version= line - that's a commit that should have that updated version tag on it.

Best place to add/update that tag in git after commit is post-commit hook:

#!/bin/bash

set -eo pipefail

die() {

echo >&2 $'\ngit-post-commit :: ----------------------------------------'

echo >&2 "git-post-commit :: ERROR: $@"

echo >&2 $'git-post-commit :: ----------------------------------------\n'; exit 1; }

ver=$( git show --no-color --diff-filter=M -aU0 pyproject.toml |

gawk '/^\+version\s*=/ {

split(substr($NF,2,length($NF)-2),v,".")

print v[1]+0 "." v[2]+0 "." v[3]+0}' )

[[ "$ver" =~ ^[0-9]+\.[0-9]+\.[0-9]+$ ]] || {

ver=$( gawk '/^version\s*=/ {

split(substr($NF,2,length($NF)-2),v,".")

print v[1]+0 "." v[2]+0 "." v[3]+0}' pyproject.toml )

[[ "$ver" =~ ^[0-9]+\.[0-9]+\.[0-9]+$ ]] || \

die 'Failed to get version from git-show and pyproject.toml file'

ver_tag=$(git tag --sort=v:refname | tail -1)

[[ -n "$ver" && "$ver" = "$ver_tag" ]] || die 'No new release to tag,'`

`" and last git-tag [ $ver_tag ] does not match pyproject.toml version [ $ver ]"

echo $'\ngit-post-commit :: no new tag, last one matches pyproject.toml\n'; exit 0; }

git tag -f "$ver" HEAD # can be reassigning tag after --amend

echo -e "\ngit-post-commit :: tagged new release [ $ver ]\n"

git-show there picks version update line from just-created commit,

which is then checked against existing tag and assigned or updated as necessary.

"Updated" part tends to be important too, as at least for me it's common to

remember something that needs to be fixed/updated only when writing commit msg

or even after git-push, so git commit --amend is common, and should update

that same tag to a new commit hash.

Messages printed in this hook are nicely prepended to git's usual commit info

output in the terminal, so that you remember when/where this stuff is happening,

and any potential errors are fairly obvious.

Having tags assigned is not enough to actually have those on github/gitlab/codeberg

and such, as git doesn't push those automatically.

There's --follow-tags option to push "annotated" tags only, but I don't see

any reason why trivial version tags should have a message attached to them,

so of course there's another way too - pre-push hook:

#!/bin/sh

set -e

# Push tags on any pushes to "master" branch, with stdout logging

# Re-assigns tags, but does not delete them, use "git push --delete remote tag" for that

push_remote=$1 push_url=$2

master_push= master_oid=$(git rev-parse master)

while read local_ref local_oid remote_ref remote_oid

do [ "$local_oid" != "$master_oid" ] || master_push=t; done

[ -n "$master_push" ] || exit 0

prefix=$(printf 'git-pre-push [ %s %s ] ::' "$push_remote" "$push_url")

printf '\n%s --- tags-push ---\n' "$prefix"

git push --no-verify --tags -f "$push_url" # specific URL in case remote has multiple of those

printf '%s --- tags-push success ---\n\n' "$prefix"

It has an extra check for whether it's a push for a master branch, where release

tags presumably are, and auto-runs git push --tags -f to the same URL.

Again -f here is to be able to follow any tag reassignments after --amend's,

although it doesn't delete tags that were removed locally, but don't think that

should happen often enough to bother (if ever).

pre-push position of the hook should abort the push if there're any issues

pushing tags, and pushing to specific URLs allows to use multiple repo URLs in

e.g. default "origin" remote (used with no-args git push), like in github +

codeberg + self-hosted URL-combo that I typically use for redundancy and to

avoid depending on silly policies of "free" third-party services (which is also

why maintaining service-specific CI/CD stuff on those seems like a wasted effort).

With both hooks in place (under .git/hooks/), there should be no manual work

involved in managing/maintaining git tags anymore, to forget that they exist again

for all practical purposes.

Made both hooks for pyaml project repo (apparently packaged in some distro),

where maybe more recent versions of those can be found:

Don't think git or sh/bash/gawk used in those ever change to bother updating them,

but maybe there'll be some new corner-case or useful git workflow to handle,

which I haven't bumped into yet.

Sep 30, 2024

Debugging the usual censorshit issues, finally got sick of looking at normal

tcpdump output, and decided to pipe it through a simple translator/colorizer script.

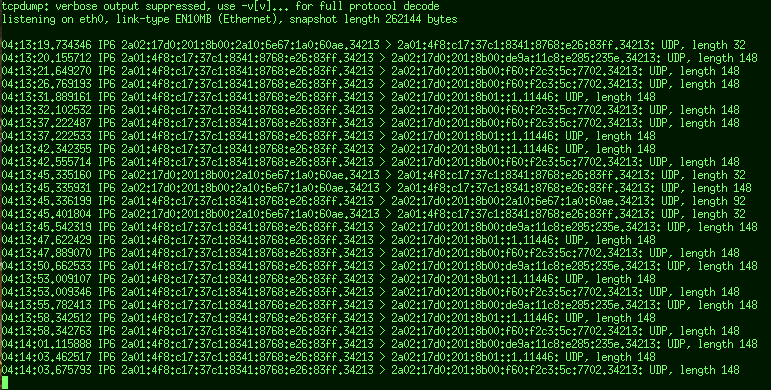

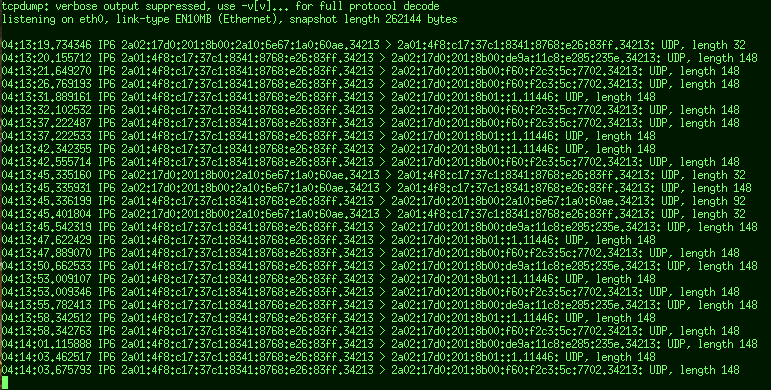

I think it's one of these cases where a picture is worth a thousand words:

This is very hard to read, especially when it's scrolling,

with long generated IPv6'es in there.

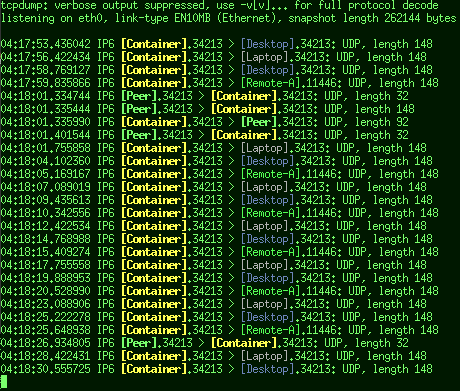

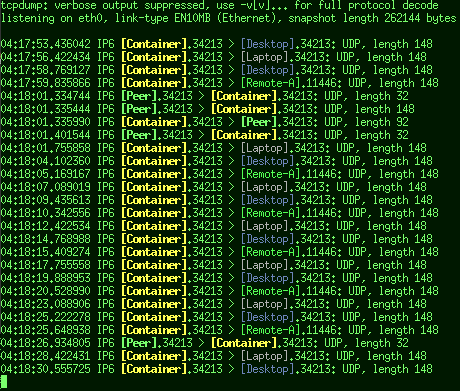

While this IMO is quite readable:

Immediately obvious who's talking to whom and when, where it's especially

trivial to focus on packets from specific hosts by their name shape/color.

Difference between the two is this trivial config file:

2a01:4f8:c17:37c1: local.net: !gray

2a01:4f8:c17:37c1:8341:8768:e26:83ff [Container] !bo-ye

2a02:17d0:201:8b0 remote.net !gr

2a02:17d0:201:8b01::1 [Remote-A] !br-gn

2a02:17d0:201:8b00:2a10:6e67:1a0:60ae [Peer] !bold-cyan

2a02:17d0:201:8b00:f60:f2c3:5c:7702 [Desktop] !blue

2a02:17d0:201:8b00:de9a:11c8:e285:235e [Laptop] !wh

...which sets host/network/prefix labels to replace unreadable address parts

with (hosts in brackets as a convention) and colors/highlighting for those

(using either full or two-letter DIN 47100-like names for brevity).

Plus the script to pipe that boring tcpdump output through - tcpdump-translate.

Another useful feature of such script turns out to be filtering -

tcpdump command-line quickly gets unwieldy with "host ... && ..." specs,

while in the config above it's trivial to comment/uncomment lines and filter

by whatever network prefixes, instead of cramming it all into shell prompt.

tcpdump has some of this functionality via DNS reverse-lookups too,

but I really don't want it resolving any addrs that I don't care to track specifically,

which often makes output even more confusing, with long and misleading internal names

assigned by someone else for their own purposes popping up in wrong places, while still

remaining indistinct and lacking colors.

Aug 06, 2024

For TCP connections, it seems pretty trivial - old netstat (from net-tools project)

and modern ss (iproute2) tools do it fine, where you can easily grep both listening

or connected end by IP:port they're using.

But ss -xp for unix sockets (AF_UNIX, aka "named pipes") doesn't work like

that - only prints socket path for listening end of the connection, which makes

lookups by socket path not helpful, at least with the current iproute-6.10.

"at least with the current iproute" because manpage actually suggests this:

ss -x src /tmp/.X11-unix/*

Find all local processes connected to X server.

Where socket is wrong for modern X - easy to fix - and -p option seem to be

omitted (to show actual processes), but the result is also not at all "local

processes connected to X server" anyway:

# ss -xp src @/tmp/.X11-unix/X1

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

u_str ESTAB 0 0 @/tmp/.X11-unix/X1 26800 * 25948 users:(("Xorg",pid=1519,fd=51))

u_str ESTAB 0 0 @/tmp/.X11-unix/X1 331064 * 332076 users:(("Xorg",pid=1519,fd=40))

u_str ESTAB 0 0 @/tmp/.X11-unix/X1 155940 * 149392 users:(("Xorg",pid=1519,fd=46))

...

u_str ESTAB 0 0 @/tmp/.X11-unix/X1 16326 * 20803 users:(("Xorg",pid=1519,fd=44))

u_str ESTAB 0 0 @/tmp/.X11-unix/X1 11106 * 27720 users:(("Xorg",pid=1519,fd=50))

u_str LISTEN 0 4096 @/tmp/.X11-unix/X1 12782 * 0 users:(("Xorg",pid=1519,fd=7))

It's just a long table listing same "Xorg" process on every line,

which obviously isn't what example claims to fetch, or useful in any way.

So maybe it worked fine earlier, but some changes to the tool or whatever

data it grabs made this example obsolete and not work anymore.

But there are "ports" listed for unix sockets, which I think correspond to

"inodes" in /proc/net/unix, and are global across host (or at least same netns),

so two sides of connection - that socket-path + Xorg process info - and other

end with connected process info - can be joined together by those port/inode numbers.

I haven't been able to find a tool to do that for me easily atm, so went ahead to

write my own script, mostly focused on listing per-socket pids on either end, e.g.:

# unix-socket-links

...

/run/dbus/system_bus_socket :: dbus-broker[1190] :: Xorg[1519] bluetoothd[1193]

claws-mail[2203] dbus-broker-lau[1183] efreetd[1542] emacs[2160] enlightenment[1520]

pulseaudio[1523] systemd-logind[1201] systemd-network[1363] systemd-timesyn[966]

systemd[1366] systemd[1405] systemd[1] waterfox[2173]

...

/run/user/1000/bus :: dbus-broker[1526] :: dbus-broker-lau[1518] emacs[2160] enlightenment[1520]

notification-th[1530] pulseaudio[1523] python3[1531] python3[5397] systemd[1405] waterfox[2173]

/run/user/1000/pulse/native :: pulseaudio[1523] :: claws-mail[2203] emacs[2160]

enlightenment[1520] mpv[9115] notification-th[1530] python3[2063] waterfox[2173]

@/tmp/.X11-unix/X1 :: Xorg[1519] :: claws-mail[2203] conky[1666] conky[1671] emacs[2160]

enlightenment[1520] notification-th[1530] python3[5397] redshift[1669] waterfox[2173]

xdpms[7800] xterm[1843] xterm[2049] yeahconsole[2047]

...

Output format is <socket-path> :: <listening-pid> :: <clients...>, where it's

trivial to see exactly what is connected to which socket (and what's listening there).

unix-socket-links @/tmp/.X11-unix/X1 can list only conns/pids for that

socket, and adding -c/--conns can be used to disaggregate that list of

processes back into specific connections (which can be shared between pids too),

to get more like a regular netstat/ss output, but with procs on both ends,

not weirdly broken one like ss -xp gives you.

Script is in the usual mk-fg/fgtk repo (also on codeberg and local git),

with code link and a small doc here:

https://github.com/mk-fg/fgtk?tab=readme-ov-file#hdr-unix-socket-links

Was half-suspecting that I might need to parse /proc/net/unix or load eBPF

for this, but nope, ss has all the info needed, just presents it in a silly way.

Also, unlike some other iproute2 tools where that was added (or lsfd below), it

doesn't have --json output flag, but should be stable enough to parse

anyway, I think, and easy enough to sanity-check by the header.

Oh, and also, one might be tempted to use lsof or lsfd for this, like I did,

but it's more complicated and can be janky to get the right output out of these,

and pretty sure lsof even has side-effects, where it connects to socket with +E

(good luck figuring out what's that supposed to do btw), causing all sorts of

unintended mayhem, but here are snippets that I've used for those in some past

(checking where stalled ssh-agent socket connections are from in this example):

lsof -wt +E "$SSH_AUTH_SOCK" | awk '{print "\\<" $1 "\\>"}' | g -3f- <(ps axlf)

lsfd -no PID -Q "UNIX.PATH == '$SSH_AUTH_SOCK'" | grep -f- <(ps axlf)

Don't think either of those work anymore, maybe for same reason as with ss

not listing unix socket path for egress unix connections, and lsof in particular

straight-up hangs without even kill -9 getting it, if socket on the other

end doesn't process its (silly and pointless) connection, so maybe don't use

that one at least - lsfd seem to be easier to use in general.

Jul 01, 2024

Usually zsh does fine wrt tab-completion, but sometimes you just get nothing

when pressing tab, either due to somewhat-broken completer or it working as

intended but there's seemingly being "nothing" to complete.

Recently latter started happening after redirection characters,

e.g. on cat myfile > <TAB>, and that finally prompted me to re-examine

why I even put up with this crap.

Because in vast majority of cases, completion should use files, except for

commands as the first thing on the line, and maybe some other stuff way more rarely,

almost as an exception.

But completing nothing at all seems like an obvious bug to me,

as if I wanted nothing, wouldn't have pressed the damn tab key in the first place.

One common way to work around the lack of file-completions when needed,

is to define special key for just those, like shift-tab:

zstyle ':completion:complete-files:*' completer _files

bindkey "\e[Z" complete-files

If using that becomes a habit everytime one needs files, that'd be a good solution,

but I still use generic "tab" by default, and expect file-completion from it in most cases,

so why not have it fallback to file-completion if whatever special thing zsh has

otherwise fails - i.e. suggest files/paths instead of nothing.

Looking at _complete_debug output (can be bound/used instead of tab-completion),

it's easy to find where _main_complete dispatcher picks completer script,

and that there is apparently no way to define fallback of any kind there, but easy

enough to patch one in, at least.

Here's the hack I ended up with for /etc/zsh/zshrc:

## Make completion always fallback to next completer if current returns 0 results

# This allows to fallback to _file completion properly when fancy _complete fails

# Patch requires running zsh as root at least once, to apply it (or warn/err about it)

_patch_completion_fallbacks() {

local patch= p=/usr/share/zsh/functions/Completion/Base/_main_complete

[[ "$p".orig -nt "$p" ]] && return || {

grep -Fq '## fallback-complete patch v1 ##' "$p" && touch "$p".orig && return ||:; }

[[ -n "$(whence -p patch)" ]] || {

echo >&2 'zshrc :: NOTE: missing "patch" tool to update completions-script'; return; }

read -r -d '' patch <<'EOF'

--- _main_complete 2024-06-09 01:10:28.352215256 +0500

+++ _main_complete.new 2024-06-09 01:10:51.087404762 +0500

@@ -210,18 +210,20 @@

fi

_comp_mesg=

if [[ -n "$call" ]]; then

if "${(@)argv[3,-1]}"; then

ret=0

break 2

fi

elif "$tmp"; then

+ ## fallback-complete patch v1 ##

+ [[ $compstate[nmatches] -gt 0 ]] || continue

ret=0

break 2

fi

(( _matcher_num++ ))

done

[[ -n "$_comp_mesg" ]] && break

(( _completer_num++ ))

done

EOF

patch --dry-run -stN "$p" <<< "$patch" &>/dev/null \

|| { echo >&2 "zshrc :: WARNING: zsh fallback-completions patch fails to apply"; return; }

cp -a "$p" "$p".orig && patch -stN "$p" <<< "$patch" && touch "$p".orig \

|| { echo >&2 "zshrc :: ERROR: failed to apply zsh fallback-completions patch"; return; }

echo >&2 'zshrc :: NOTE: patched zsh _main_complete routine to allow fallback-completions'

}

[[ "$UID" -ne 0 ]] || _patch_completion_fallbacks

unset _patch_completion_fallbacks

This would work with multiple completers defined like this:

zstyle ':completion:*' completer _complete _ignored _files

Where _complete _ignored is the default completer-chain, and will try

whatever zsh has for the command first, and then if those return nothing,

instead of being satisfied with that, patched-in continue will keep going

and run next completer, which is _files in this case.

A patch with generous context is to find the right place and bail if upstream

code changes, but otherwise, whenever first running the shell as root,

fix the issue until next zsh package update (and then patch will run/fix it again).

Doubt it'd make sense upstream in this form, as presumably current behavior is

locked-in over years, but an option for something like this would've been nice.

I'm content with a hack for now though, it works too.