The biggest issue I have with

fossil scm is that

it's not

git - there are just too many advanced tools

which I got used to with git over time, which probably will never be

implemented in fossil just because of it's "lean single binary" philosophy.

And things get even worse when you need to bridge git-fossil repos - common

denominator here is git, so it's either constant "export-merge-import" cycle

or some hacks, since fossil doesn't support incremental export to a git repo

out of the box (but it does have

support for full import/export), and git

doesn't seem to have a plugin to track fossil remotes (yet?).

I thought of migrating away from fossil, but there's just no substitute

(although

quite a lot of attempts to implement that) for distributed issue tracking and

documentation right in the same repository and plain easy to access format

with a sensible web frontend for those who don't want to install/learn scm and

clone the repo just to file a ticket.

None of git-based tools I've been able to find seem to meet this (seemingly)

simple criterias, so dual-stack it is then.

Solution I came up with is real-time mirroring of all the changes in fossil

repositories to a git.

- watching fossil-path with inotify(7) for IN_MODIFY events (needs

pyinotify for that)

- checking for new revisions in fossil (source) repo against tip of a

git

- comparing these by timestamps, which are kept in perfect sync (by

fossil-export as well)

- exporting revisions from fossil as a full artifacts (blobs),

importing these into git via git-fast-import

It's also capable to do oneshot updates (in which case it doesn't need anything

but python-2.7, git and fossil), bootstrapping git mirrors as new fossil repos

are created and catching-up with their sync on startup.

While the script uses quite a low-level (but standard and documented here and there) scm

internals, it was actually very easy to write (~200 lines, mostly simple

processing-generation code), because both scms in question are built upon

principles of simple and robust design, which I deeply admire.

Limitation is that it only tracks one branch, specified at startup ("trunk",

by default), and doesn't care about the tags at the moment, but I'll probably

fix the latter when I'll do some tagging next time (hence will have a

realworld test case).

It's also trivial to make the script do two-way synchronization, since fossil

supports "fossil import --incremental" update right from git-fast-export, so

it's just a simple pipe, which can be run w/o any special tools on demand.

Script itself.

fossil_echo --help:

usage: fossil_echo [-h] [-1] [-s] [-c] [-b BRANCH] [--dry-run] [-x EXCLUDE]

[-t STAT_INTERVAL] [--debug]

fossil_root git_root

Tool to keep fossil and git repositories in sync. Monitors fossil_root for

changes in *.fossil files (which are treated as source fossil repositories)

and pushes them to corresponding (according to basename) git repositories.

Also has --oneshot mode to do a one-time sync between specified repos.

positional arguments:

fossil_root Path to fossil repos.

git_root Path to git repos.

optional arguments:

-h, --help show this help message and exit

-1, --oneshot Treat fossil_root and git_root as repository paths and

try to sync them at once.

-s, --initial-sync Do an initial sync for every *.fossil repository found

in fossil_root at start.

-c, --create Dynamically create missing git repositories (bare)

inside git-root.

-b BRANCH, --branch BRANCH

Branch to sync (must exist on both sides, default:

trunk).

--dry-run Dump git updates (fast-import format) to stdout,

instead of feeding them to git. Cancels --create.

-x EXCLUDE, --exclude EXCLUDE

Repository names to exclude from syncing (w/o .fossil

or .git suffix, can be specified multiple times).

-t STAT_INTERVAL, --stat-interval STAT_INTERVAL

Interval between polling source repositories for

changes, if there's no inotify/kevent support

(default: 300s).

--debug Verbose operation mode.

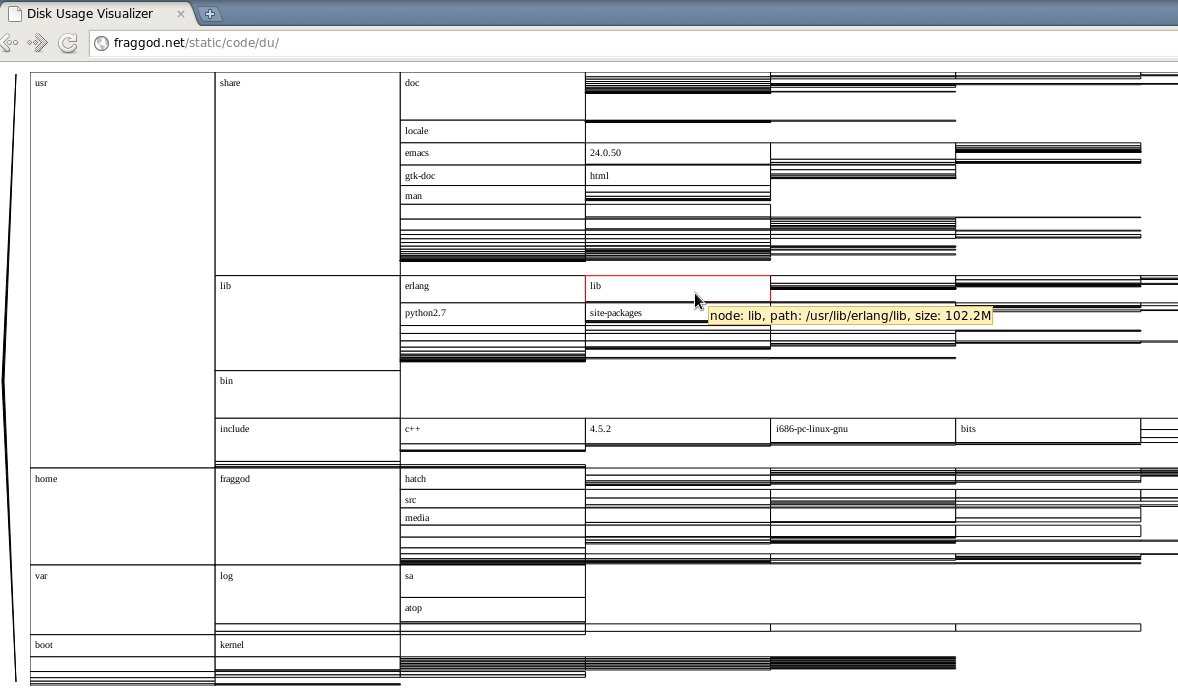

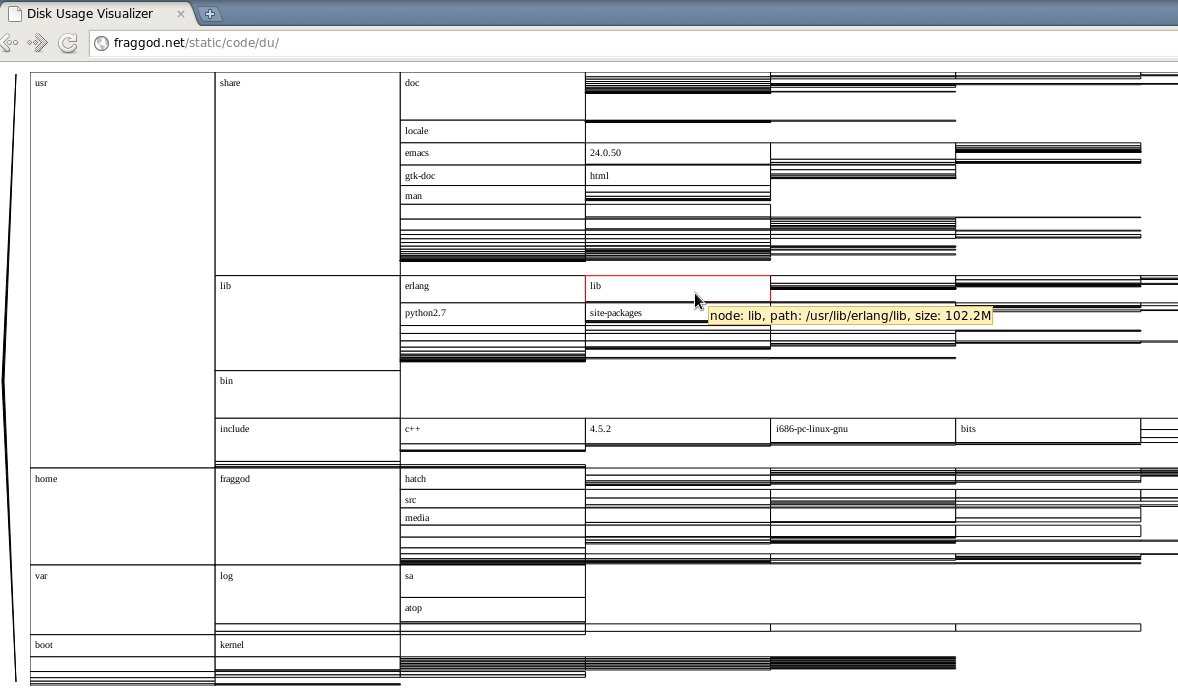

xdiskusage(1) is a simple and useful

tool to visualize disk space usage (a must-have thing in any admin's

toolkit!).

Probably the best thing about it is that it's built on top of "du" command, so

if there's a problem with free space on a remote X-less server, just "ssh

user@host 'du -k' | xdiskusage" and in a few moments you'll get the idea

where the space has gone to.

Lately though I've had problems building fltk, and noticed that xdiskusage is

the only app that uses it on my system, so I just got rid of both, in hopes

that I'll be able to find some lite gtk replacement (don't have qt either).

Maybe I do suck at googling (or just giving up too early), but

filelight (kde util),

baobab (gnome util) and

philesight (ruby) are pretty much the only

alternatives I've found. First one drags in half of the kde, second one - half

of gnome, and I don't really need ruby in my system either.

And for what? xdiskusage seem to be totally sufficient and much easier to

interpret (apparently it's a lot easier to compare lengths than angles for me)

than stupid round graphs that filelight and it's ruby clone produce, plus it

looks like a no-brainer to write.

There are some CLI alternatives as well, but this task is definitely outside

of CLI domain.

So I wrote this tool. Real source is

actually coffeescript, here, JS is compiled from it.

Initially I wanted to do this in python, but then took a break to read some

reddit and blogs, which just happened to push me in the direction of a

web. Good thing they did, too, as it turned out to be simple and

straightforward to work with graphics there these days.

I didn't use (much-hyped) html5 canvas though, since svg seem to be much more

fitting in html world, plus it's much easier to make it interactive (titles,

events, changes, etc).

Aside from the intended stuff, tool also shows performance shortcomings in

firefox and opera browsers - they both are horribly slow on pasting large text

into textarea (or iframe with "design mode") and just slow on rendering

svg. Google chrome is fairly good at both tasks.

Not that I'll migrate all my firefox addons/settings and habits to chrome

anytime soon, but it's certainly something to think about.

Also, JS calculations can probably be made hundred-times faster by caching

size of the traversed subtrees (right now they're recalculated gozillion times

over, and that's basically all the work).

I was just too lazy to do it initially and textarea pasting is still a lot

slower than JS, so it doesn't seem to be a big deal, but guess I'll do that

eventually anyway.

Working with a number of non-synced servers remotely (via fabric) lately, I've found the need to push updates to a set of

(fairly similar) files.

It's a bit different story for each server, of course, like crontabs for a web

backend with a lot of periodic maintenance, data-shuffle and cache-related

tasks, firewall configurations, common html templates... well, you get the

idea.

I'm not the only one who makes the changes there, and without any

change/version control for these sets of files, state for each file/server

combo is essentially unique and accidental change can only be reverted from a

weekly backup.

Not really a sensible infrastructure as far as I can tell (or just got used

to), but since I'm a total noob here, working for only a couple of weeks,

global changes are out of question, plus I've got my hands full with the other

tasks as it is.

So, I needed to change files, keeping the old state for each one in case

rollback is necessary, and actually check remote state before updating files,

since someone might've introduced either the same or conflicting change while

I was preparing mine.

Problem of conflicting changes can be solved by keeping some reference (local)

state and just applying patches on top of it. If file in question is important

enough, having such state is double-handy, since you can pull the remote state

in case of changes there, look through the diff (if any) and then decide

whether the patch is still valid or not.

Problem of rollbacks is solved long ago by various versioning tools.

Combined, two issues kinda beg for some sort of storage with a history of

changes for each value there, and since it's basically a text, diffs and

patches between any points of this history would also be nice to have.

It's the domain of the SCM's, but my use-case is a bit more complicated then

the usual usage of these by the fact that I need to create new revisions

non-interactively - ideally via something like a key-value api (set, get,

get_older_version) with the usual interactive interface to the history at

hand in case of any conflicts or complications.

Being most comfortable with

git, I looked for

non-interactive db solutions on top of it, and the simplest one I've found

was

gitshelve.

GitDB seem to be more powerful, but

unnecessary complex for my use-case.

Then I just implemented patch (update key by a diff stream) and diff methods

(generate diff stream from key and file) on top of gitshelve plus writeback

operation, and thus got a fairly complete implementation of what I needed.

Looking at such storage from a DBA perspective, it's looking pretty good -

integrity and atomicity are assured by git locking, all sorts of replication and

merging possible in a quite efficient and robust manner via git-merge and

friends, cli interface and transparency of operation is just superb. Regular

storage performance is probably far off db level though, but it's not an issue

in my use-case.

Here's gitshelve

and state.py, as used in my fabric

stuff. fabric imports can be just dropped there without much problem (I use

fabric api to vary keys depending on host).

Pity I'm far more used to git than pure-py solutions like

mercurial or

bazaar,

since it'd have probably been much cleaner and simpler to implement such

storage on top of them - they probably expose python interface directly.

Guess I'll put rewriting the thing on top of hg on my long todo list.

Had IPv6 tunnel from

HE for a few years now, but

since I've changed ISP about a year ago, I've been unable to use it because

ISP dropped sit tunnel packets for some weird reason.

A quick check yesterday revealed that this limitation seem to have been

lifted, so I've re-enabled the tunnel at once.

All the IPv6-enabled stuff started using AAAA-provided IPs at once, and that

resulted in some problems.

Particulary annoying thing is that

ZNC IRC bouncer

managed to loose connection to

freenode about five

times in two days, interrupting conversations and missing some channel

history.

Of course, problem can be easily solved by making znc connect to IPv4

addresses, as it was doing before, but since there's no option like "connect

to IPv4" and "irc.freenode.net" doesn't seem to have some alias like

"ipv4.irc.freenode.net", that'd mean either specifying single IP in znc.conf

(instead on DNS-provided list of servers) or filtering AAAA results, while

leaving A records intact.

Latter solution seem to be better in many ways, so I decided to look for

something that can override AAAA RR's for a single domain (irc.freenode.net in

my case) or a configurable list of them.

I use dead-simple dnscache resolver from

djbdns bundle, which doesn't seem to be capable of such

filtering by itself.

ISC BIND seem to have "filter-aaaa"

global option to provide A-only results to a list of clients/networks, but

that's also not what I need, since it will make IPv6-only mirrors (upon which

I seem to stumble more and more lately) inaccessible.

Rest of the recursive DNS resolvers doesn't seem to have even that capability,

so some hack was needed here.

Useful feature that most resolvers have though is the ability to query

specific DNS servers for a specific domains. Even dnscache is capable of doing

that, so putting BIND with AAAA resolution disabled behind dnscache and

forwarding freenode.net domain to it should do the trick.

But installing and running BIND just to resolve one (or maybe a few more, in

the future) domain looks like an overkill to me, so I thought of

twisted and it's names component, implementing DNS

protocols.

And all it took with twisted to implement such no-AAAA DNS proxy, as it turns

out, was these five lines of code:

class IPv4OnlyResolver(client.Resolver):

def lookupIPV6Address(self, name, timeout = None):

return self._lookup('nx.fraggod.net', dns.IN, dns.AAAA, timeout)

protocol = dns.DNSDatagramProtocol(

server.DNSServerFactory(clients=[IPv4OnlyResolver()]) )

Meh, should've skipped the search for existing implementation altogether.

That script plus "echo IP > /etc/djbdns/cache/servers/freenode.net" solved the

problem, although dnscache doesn't seem to be capable of forwarding queries to

non-standard port, so proxy has to be bound to specific localhost interface, not

just some wildcard:port socket.

Code, with trivial CLI, logging, dnscache forwarders-file support and redirected

AAAA-answer caching, is here.

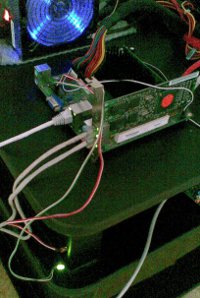

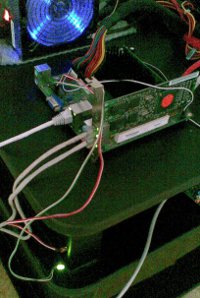

I've heard about how easy it is to control stuff with a parallel port, but

recently I've been asked to write a simple script to send repeated signals to

some hardware via lpt and I really needed some way to test whether signals are

coming through or not.

Googling around a bit, I've found that it's trivial to plug leds right into

the port and did just that to test the script.

Since it's trivial to control these leds and they provide quite a simple way for

a visual notification for an otherwise headless servers, I've put together

another script to monitor system resources usage and indicate extra-high load

with various blinking rates.

Probably the coolest thing is that parallel port on mini-ITX motherboards comes

in a standard "male" pin group like usb or audio with "ground" pins exactly

opposite of "data" pins, so it's easy to take a few leds (power, ide, and they

usually come in different colors!) from an old computer case and plug these

directly into the board.

Making leds blink actually involves an active switching of data bits on the

port in an infinite loop, so I've forked one subprocess to do that while

another one checks/processes the data and feeds led blinking intervals'

updates to the first one via pipe.

System load data is easy to acquire from "/proc/loadavg" for cpu and as

"%util" percentage from "sar -d" reports.

And the easiest way to glue several subprocesses and a timer together into an

eventloop is

twisted, so the script is

basically 150 lines sar output processing, checks and blinking rate settings.

Obligatory link to the source. Deps

are python-2.7, twisted

and pyparallel.

Guess mail notifications could've been just as useful, but quickly-blinking leds

are more spectacular and kinda way to utilize legacy hardware capabilities that

these motherboards still have.

Systemd does a good job at monitoring and restarting services. It also keeps

track of "failed" services, which you can easily see in systemctl output.

Problem for me is that services that should be running at the machine don't

always "fail".

I can stop them and forget to start again, .service file can be broken (like,

reload may actually terminate the service), they can be accidentally or

deliberately killed or just exit with 0 code due to some internal event, just

because they think that's okay to stop now.

Most often such "accidents" seem to happen on boot - some services just

perform sanity checks, see that some required path or socket is missing and

exit, sometimes with code 0.

As a good sysadmin, you take a peek at systemctl, see no failures there and

think "ok, successful reboot, everything is started", and well, it's not, and

systemd doesn't reveal that fact.

What's needed here is kinda "dashboard" of what is enabled and thus should be

running with clear indication if something is not. Best implementation of such

thing I've seen in openrc init system, which comes with baselayout-2 on Gentoo

Linux ("unstable" or "~" branch atm, but guess it'll be stabilized one day):

root@damnation:~# rc-status -a

Runlevel: shutdown

killprocs [ stopped ]

savecache [ stopped ]

mount-ro [ stopped ]

Runlevel: single

Runlevel: nonetwork

local [ started ]

Runlevel: cryptinit

rsyslog [ started ]

ip6tables [ started ]

...

twistd [ started ]

local [ started ]

Runlevel: sysinit

dmesg [ started ]

udev [ started ]

devfs [ started ]

Runlevel: boot

hwclock [ started ]

lvm [ started ]

...

wdd [ started ]

keymaps [ started ]

Runlevel: default

rsyslog [ started ]

ip6tables [ started ]

...

twistd [ started ]

local [ started ]

Dynamic Runlevel: hotplugged

Dynamic Runlevel: needed

sysfs [ started ]

rpc.pipefs [ started ]

...

rpcbind [ started ]

rpc.idmapd [ started ]

Dynamic Runlevel: manual

Just "grep -v started" and you see everything that's "stopped", "crashed",

etc.

Implementation uses extensive dbus interface provided by systemd to get a set

of all the .service units loaded by systemd, then gets "enabled" stuff from

symlinks on a filesystem. Latter are easily located in places

/{etc,lib}/systemd/system/*/*.service and systemd doesn't seem to keep track

of these, using them only at boot-time.

Having some experience using rc-status tool from openrc I also fixed the main

annoyance it has - there's no point to show "started" services, ever! I always

cared about "not enabled" or "not started" only, and shitload of "started"

crap it dumps is just annoying, and has to always be grepped-out.

So, meet the systemd-dashboard tool:

root@damnation:~# systemd-dashboard -h

usage: systemd-dashboard [-h] [-s] [-u] [-n]

Tool to compare the set of enabled systemd services against currently running

ones. If started without parameters, it'll just show all the enabled services

that should be running (Type != oneshot) yet for some reason they aren't.

optional arguments:

-h, --help show this help message and exit

-s, --status Show status report on found services.

-u, --unknown Show enabled but unknown (not loaded) services.

-n, --not-enabled Show list of services that are running but are not

enabled directly.

Simple invocation will show what's not running while it should be:

root@damnation:~# systemd-dashboard

smartd.service

systemd-readahead-replay.service

apache.service

Adding "-s" flag will show what happened there in more detail (by the grace of

"systemctl status" command):

root@damnation:~# systemd-dashboard -s

smartd.service - smartd

Loaded: loaded (/lib64/systemd/system/smartd.service)

Active: failed since Sun, 27 Feb 2011 11:44:05 +0500; 2s ago

Process: 16322 ExecStart=/usr/sbin/smartd --no-fork --capabilities (code=killed, signal=KILL)

CGroup: name=systemd:/system/smartd.service

systemd-readahead-replay.service - Replay Read-Ahead Data

Loaded: loaded (/lib64/systemd/system/systemd-readahead-replay.service)

Active: inactive (dead)

CGroup: name=systemd:/system/systemd-readahead-replay.service

apache.service - apache2

Loaded: loaded (/lib64/systemd/system/apache.service)

Active: inactive (dead) since Sun, 27 Feb 2011 11:42:34 +0500; 51s ago

Process: 16281 ExecStop=/usr/bin/apachectl -k stop (code=exited, status=0/SUCCESS)

Main PID: 5664 (code=exited, status=0/SUCCESS)

CGroup: name=systemd:/system/apache.service

Would you've noticed that readahead fails on a remote machine because the kernel

is missing fanotify and the service apparently thinks "it's okay not to start"

in this case? What about smartd you've killed a while ago and forgot to restart?

And you can check if you forgot to enable something with "-n" flag, which will

show all the running stuff that was not explicitly enabled.

Code is under a hundred lines of python with the only dep of dbus-python

package. You can grab the

initial (probably not updated much, although it's probably finished as it is)

version from here or a

maintained version from fgtk repo (yes,

there's an anonymous login form to pass).

If someone will also find the thing useful, I'd appreciate if you'll raise

awareness to the issue within systemd project - I'd rather like to see such

functionality in the main package, not hacked-up on ad-hoc basis around it.

Update (+20d): issue was noticed and will probably be addressed in

systemd. Yay!

Update 2019-10-02: This still works, but only for old cgroup-v1 interface,

and is deprecated as such, plus largely unnecessary with modern systemd -

see cgroup-v2 resource limits for apps with systemd scopes and slices post for more info.

Linux control groups (cgroups) rock.

If you've never used them at all, you bloody well should.

"git gc --aggressive" of a linux kernel tree killing you disk and cpu?

Background compilation makes desktop apps sluggish? Apps step on each others'

toes? Disk activity totally kills performance?

I've lived with all of the above on the desktop in the (not so distant) past and

cgroups just make all this shit go away - even forkbombs and super-multithreaded

i/o can just be isolated in their own cgroup (from which there's no way to

escape, not with any amount of forks) and scheduled/throttled (cpu

hard-throttling - w/o cpusets - seem to be coming soon as well) as necessary.

Some problems with process classification and management of these limits seem to

exist though.

Systemd

does a great job of classification of everything outside of user session

(i.e. all the daemons) - any rc/cgroup can be specified right in the unit

files or set by default via system.conf.

And it also makes all this stuff neat and tidy because cgroup support there is

not even optional - it's basic mechanism on which systemd is built, used to

isolate all the processes belonging to one daemon or the other in place of

hopefully-gone-for-good crappy and unreliable pidfiles. No renegade processes,

leftovers, pids mixups... etc, ever.

Bad news however is that all the cool stuff it can do ends right there.

Classification is nice, but there's little point in it from resource-limiting

perspective without setting the actual limits, and systemd doesn't do that

(

recent thread on systemd-devel).

Besides, no classification for user-started processes means that desktop users

are totally on their own, since all resource consumption there branches off

the user's fingertips. And desktop is where responsiveness actually matters

for me (as in "me the regular user"), so clearly something is needed to create

cgroups, set limits there and classify processes to be put into these cgroups.

libcgroup project looks like the remedy at

first, but since I started using it about a year ago, it's been nothing but

the source of pain.

First task that stands before it is to actually create cgroups' tree, mount

all the resource controller pseudo-filesystems and set the appropriate limits

there.

libcgroup project has cgconfigparser for that, which is probably the most

brain-dead tool one can come up with. Configuration is stone-age pain in the

ass, making you clench your teeth, fuck all the DRY principles and write

N*100 line crap for even the simplest tasks with as much punctuation as

possible to cram in w/o making eyes water.

Then, that cool toy parses the config, giving no indication where you messed

it up but the dreaded message like "failed to parse file". Maybe it's not

harder to get right by hand than XML, but at least XML-processing tools give

some useful feedback.

Syntax aside, tool still sucks hard when it comes to apply all the stuff

there - it either does every mount/mkdir/write w/o error or just gives you the

same "something failed, go figure" indication. Something being already mounted

counts as failure as well, so it doesn't play along with anything, including

systemd.

Worse yet, when it inevitably fails, it starts a "rollback" sequence,

unmounting and removing all the shit it was supposed to mount/create.

After killing all the configuration you could've had, it will fail

anyway. strace will show you why, of course, but if that's the feedback

mechanism the developers had in mind...

Surely, classification tools there can't be any worse than that? Wrong, they

certainly are.

Maybe C-API is where the project shines, but I have no reason to believe that,

and luckily I don't seem to have any need to find out.

Luckily, cgroups can be controlled via regular filesystem calls, and thoroughly

documented (in Documentation/cgroups).

Anyways, my humble needs (for the purposes of this post) are:

- isolate compilation processes, usually performed by "cave" client of

paludis package mangler (exherbo) and occasionally shell-invoked make

in a kernel tree, from all the other processes;

- insulate specific "desktop" processes like firefox and occasional

java-based crap from the rest of the system as well;

- create all these hierarchies in a freezer and have a convenient

stop-switch for these groups.

So, how would I initially approach it with libcgroup? Ok, here's the

cgconfig.conf:

### Mounts

mount {

cpu = /sys/fs/cgroup/cpu;

blkio = /sys/fs/cgroup/blkio;

freezer = /sys/fs/cgroup/freezer;

}

### Hierarchical RCs

group tagged/cave {

perm {

task {

uid = root;

gid = paludisbuild;

}

admin {

uid = root;

gid = root;

}

}

cpu {

cpu.shares = 100;

}

freezer {

}

}

group tagged/desktop/roam {

perm {

task {

uid = root;

gid = users;

}

admin {

uid = root;

gid = root;

}

}

cpu {

cpu.shares = 300;

}

freezer {

}

}

group tagged/desktop/java {

perm {

task {

uid = root;

gid = users;

}

admin {

uid = root;

gid = root;

}

}

cpu {

cpu.shares = 100;

}

freezer {

}

}

### Non-hierarchical RCs (blkio)

group tagged.cave {

perm {

task {

uid = root;

gid = users;

}

admin {

uid = root;

gid = root;

}

}

blkio {

blkio.weight = 100;

}

}

group tagged.desktop.roam {

perm {

task {

uid = root;

gid = users;

}

admin {

uid = root;

gid = root;

}

}

blkio {

blkio.weight = 300;

}

}

group tagged.desktop.java {

perm {

task {

uid = root;

gid = users;

}

admin {

uid = root;

gid = root;

}

}

blkio {

blkio.weight = 100;

}

}

Yep, it's huge, ugly and stupid.

Oh, and you have to do some chmods afterwards (more wrapping!) to make the

"group ..." lines actually matter.

So, what do I want it to look like? This:

path: /sys/fs/cgroup

defaults:

_tasks: root:wheel:664

_admin: root:wheel:644

freezer:

groups:

base:

_default: true

cpu.shares: 1000

blkio.weight: 1000

tagged:

cave:

_tasks: root:paludisbuild

_admin: root:paludisbuild

cpu.shares: 100

blkio.weight: 100

desktop:

roam:

_tasks: root:users

cpu.shares: 300

blkio.weight: 300

java:

_tasks: root:users

cpu.shares: 100

blkio.weight: 100

It's parseable and readable YAML, not

some parenthesis-semicolon nightmare of a C junkie (you may think that because

of these spaces don't matter there btw... well, think again!).

Didn't had to touch the parser again or debug it either (especially with - god

forbid - strace), everything just worked as expected, so I thought I'd dump it

here jic.

Configuration file above (YAML) consists

of three basic definition blocks:

"path" to where cgroups should be initialized.

Names for the created and mounted rc's are taken right from "groups" and

"defaults" sections.

Yes, that doesn't allow mounting "blkio" resource controller to "cpu"

directory, guess I'll go back to using libcgroup when I'd want to do

that... right after seeing the psychiatrist to have my head examined... if

they'd let me go back to society afterwards, that is.

"groups" with actual tree of group parameter definitions.

Two special nodes here - "_tasks" and "_admin" - may contain (otherwise the

stuff from "defaults" is used) ownership/modes for all cgroup knob-files

("_admin") and "tasks" file ("_tasks"), these can be specified as

"user[:group[:mode]]" (with brackets indicating optional definition, of

course) with non-specified optional parts taken from the "defaults" section.

Limits (or any other settings for any kernel-provided knobs there, for that

matter) can either be defined on per-rc-dict basis, like this:

roam:

_tasks: root:users

cpu:

shares: 300

blkio:

weight: 300

throttle.write_bps_device: 253:9 1000000

Or just with one line per rc knob, like this:

roam:

_tasks: root:users

cpu.shares: 300

blkio.weight: 300

blkio.throttle.write_bps_device: 253:9 1000000

Empty dicts (like "freezer" in "defaults") will just create cgroup in a named

rc, but won't touch any knobs there.

And the "_default" parameter indicates that every pid/tid, listed in a root

"tasks" file of resource controllers, specified in this cgroup, should belong

to it. That is, act like default cgroup for any tasks, not classified into any

other cgroup.

"defaults" section mirrors the structure of any leaf cgroup. RCs/parameters

here will be used for created cgroups, unless overidden in "groups" section.

Script to process this stuff (cgconf) can be run with

--debug to dump a shitload of info about every step it takes (and why it does

that), plus with --dry-run flag to just dump all the actions w/o actually

doing anything.

cgconf can be launched as many times as needed to get the job done - it won't

unmount anything (what for? out of fear of data loss on a pseudo-fs?), will

just create/mount missing stuff, adjust defined permissions and set defined

limits without touching anything else, thus it will work alongside with

everything that can also be using these hierarchies - systemd, libcgroup,

ulatencyd, whatever... just set what you need to adjust in .yaml and it wll be

there after run, no side effects.

cgconf.yaml

(.yaml, generally speaking) file can be put alongside cgconf or passed via the

-c parameter.

Anyway, -h or --help is there, in case of any further questions.

That handles the limits and initial (default cgroup for all tasks)

classification part, but then chosen tasks also need to be assigned to a

dedicated cgroups.

libcgroup has pam_cgroup module and cgred daemon, neither of which can

sensibly (re)classify anything within a user session, plus cgexec and

cgclassify wrappers to basically do "echo $$ >/.../some_cg/tasks && exec $1"

or just "echo" respectively.

These are dumb simple, nothing done there to make them any easier than echo,

so even using libcgroup I had to wrap these.

Since I knew exactly which (few) apps should be confined to which groups, I just

wrote a simple wrapper scripts for each, putting these in a separate dir, in the

head of PATH. Example:

#!/usr/local/bin/cgrc -s desktop/roam/usr/bin/firefox

cgrc script here is a

dead-simple wrapper to parse cgroup parameter, putting itself into

corresponding cgroup within every rc where it exists, making special

conversion in case not-yet-hierarchical (there's a patchset for that though:

http://lkml.org/lkml/2010/8/30/30) blkio, exec'ing the specified binary with

all the passed arguments afterwards.

All the parameters after cgroup (or "-g ", for the sake of clarity) go to the

specified binary. "-s" option indicates that script is used in shebang, so

it'll read command from the file specified in argv after that and pass all the

further arguments to it.

Otherwise cgrc script can be used as "cgrc -g /usr/bin/firefox " or

"cgrc. /usr/bin/firefox ", so it's actually painless and effortless to use

this right from the interactive shell. Amen for the crappy libcgroup tools.

Another special use-case for cgroups I've found useful on many occasions is a

"freezer" thing - no matter how many processes compilation (or whatever other

cgroup-confined stuff) forks, they can be instantly and painlessly stopped and

resumed afterwards.

cgfreeze dozen-liner script addresses this

need in my case - "cgfreeze cave" will stop "cave" cgroup, "cgfreeze -u cave"

resume, and "cgfreeze -c cave" will just show it's current status, see -h

there for details. No pgrep, kill -STOP or ^Z involved.

Guess I'll direct the next poor soul struggling with libcgroup here, instead of

wasting time explaining how to work around that crap and facing the inevitable

question "what else is there?" *sigh*.

All the mentioned scripts can be found here.

I rarely watch footage from various conferences online, usually because I have

some work to do and video takes much more dedicated time than the same thing

just written on a webpage, making it basically a waste of time, but sometimes

it's just fun.

Watching familiar "desktop linux complexity" holywar right on the stage of

"Desktop on the Linux..." presentation of 27c3 (

here's the dump, available atm, better one

should probably appear in the

Recordings section) certainly

was, and since there are few other interesting topics on schedule (like DJB's

talk about high-speed network security) and I have some extra time, I decided

not to miss the fun.

Problem is, "watching stuff online" is even worse than just "watching stuff" -

either you pay attention or you just miss it, so I set up recording as a sort of

"fs-based cache", at the very least to watch the recorded streams right as they

get written, being able to pause or rewind, should I need to do so.

Natural tool to do the job is mplayer, with it's "-streamdump" flag.

It works well up until some network (remote or local) error or just mplayer

glitch, which seem to happen quite often.

That's when mplayer crashes with funny "Core dumped ;)" line and if you're

watching the recorded stream atm, you'll certainly miss it at the time,

noticing the fuckup when whatever you're watching ends aburptly and the

real-time talk is already finished.

Somehow, I managed to forget about the issue and got bit by it soon enough.

So, mplayer needs to be wrapped in a while loop, but then you also need

to give dump files unique names to keep mplayer from overwriting them,

and actually do several such loops for several streams you're recording

(different halls, different talks, same time), and then probably because

of strain on the streaming servers mplayer tend to reconnect several

times in a row, producing lots of small dumps, which aren't really good

for anything, and you'd also like to get some feedback on what's

happening, and... so on.

Well, I just needed a better tool, and it looks like there aren't much simple

non-gui dumpers for video+audio streams and not many libs to connect to http

video streams from python, existing one being vlc bindings, which isn't probably

any better than mplayer, provided all I need is just to dump a stream to a file,

without any reconnection or rotation or multi-stream handling mechanism.

To cut the story short I ended up writing a bit more complicated eventloop

script to control several mplayer instances, aggregating (and marking each

accordingly) their output, restarting failed ones, discarding failed dumps and

making up sensible names for the produced files.

It was a quick ad-hoc hack, so I thought to implement it straight through

signal handling and poll loop for the output, but thinking about all the async

calls and state-tracking it'd involve I quickly reconsidered and just used

twisted to shove all this mess under the rug, ending up with quick and simple

100-liner.

And now, back to the ongoing talks of day 3.

Some time ago I decided to check out

pulseaudio project, and after a few docs it was controlling all the

sound flow in my system, since then I've never really looked back to pure

alsa.

At first I just needed the sound-over-network feature, which I've extensively

used a few years ago with esd, and pulse offered full transparency here, not

just limited support. Hell, it even has simple-client netcat'able stream

support, so there's no need to look for a client on alien OS'es.

Controllable and centralized resampling was the next nice feat, because some

apps (notably, audacious and aqualung) seemed to waste quite a lot of

resources on it in the past, either because of unreasonably-high quality or

just suboptimal alghorithms, I've never really cared to check. Alsa should be

capable to do that as well, but for some reason it failed me in this regard

before.

One major annoyance though was the abscence of a simple tool to control volume

levels.

pactl seem to be only good for muting the output, while the rest of pa-stuff

on the net seem to be based on either gtk or qt, while I needed something to

bind to a hotkeys and quickly run inside a readily-available terminal.

Maybe it's just an old habit of using alsamixer for this, but replacing it

with heavy gnome/kde tools for such a simple task seem unreasonable, so I

thought: since it's modern daemon with a lot of well-defined interfaces, why

not write my own?

I considered writing a simple hack around pacmd/pacli, but they aren't much

machine-oriented and regex-parsing is not fun, so I found that newer (git or

v1.0-dev) pulse versions have a

nice dbus interface to everything.

Only problem there is that it doesn't really work, crashing pulse on any

attempt to get some list from properties. Had to track down

the issue, good thing it's fairly trivial to fix

(just a simple revert), and then just hacked-up simple non-interactive tool to

adjust sink volume by some percentage, specified on command line.

It was good enough for hotkeys, but I still wanted some nice

alsamixer-like bars and thought it might be a good place to implement

control per-stream sound levels as well, which is really another nice

feature, but only as long as there's a way to actually adjust these

levels, which there wasn't.

A few hours of python and learning curses and there we go:

ALSA plug-in [aplay] (fraggod@sacrilege:1424) [ #############---------- ]

MPlayer (fraggod@sacrilege:1298) [ ####################### ]

Simple client (TCP/IP client from 192.168.0.5:49162) [ #########-------------- ]

Result was quite responsive and solid, which I kinda didn't expect from any sort

of interactive interface.

Guess I may be not the only person in the world looking for a cli mixer, so

I'd probably put the project up somewhere, meanwhile the script is available

here.

The only deps are python-2.7 with curses support and dbus-python, which should

come out of the box on any decent desktop system these days, anyway. List of

command-line parameters to control sink level is available via traditional

"-h" or "--help" option, although interactive stream levels tuning doesn't

need any of them.